David Payne:

Mike Cranfield:

Interesting!

The low end could be restored using PixelMath along the lines of: iif(original < x, original, starless). Where x is found by examining the histogram and finding the cut off point.

Do you know that Mike are you just assuming...? Were the pixels just clipped - if so then why doesn't Blurx or StarNet just restore them. It could be, that BlurX assigned the value of these pixels to neighbouring pixels, which, if you added them back in, would amount to a relative over-brightening of the pixels their values were assigned to. Without knowing what happened to these pixel values it is hard to say (that darned superposition thing again. Once again again, is BlurX doing deconvolution or not? I was proposing this PixelMath for SXT rather than BXT. In SXT the primary changes to your image should be relating to stars, ie brighter elements of the image so pixel values that are likely to be greater than the threshold you would specify. I agree this approach may well not be so effective for BXT.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

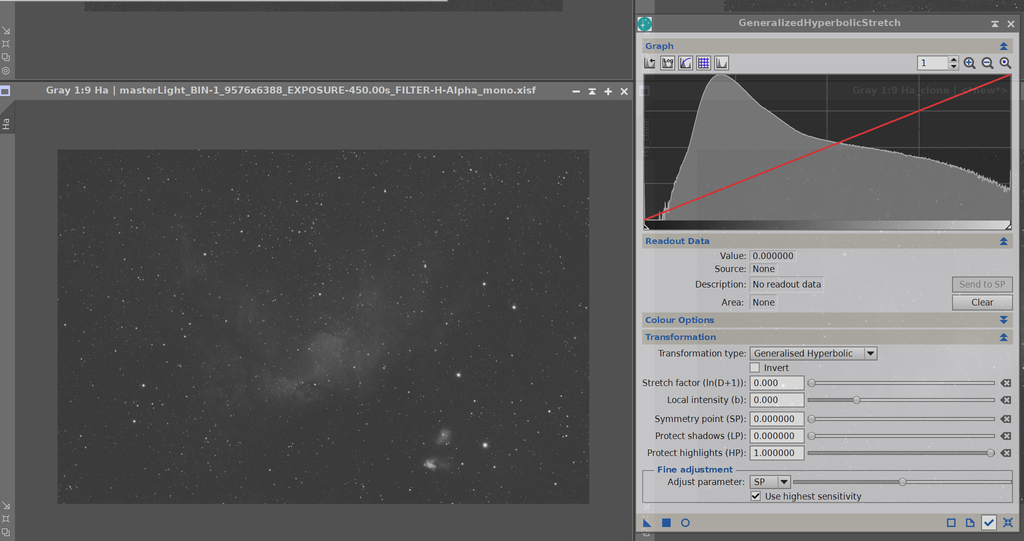

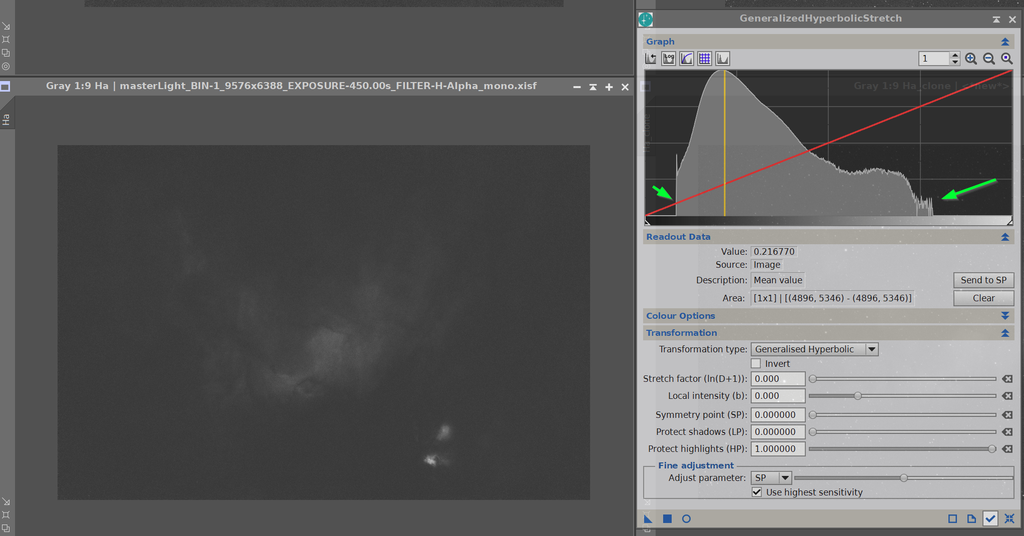

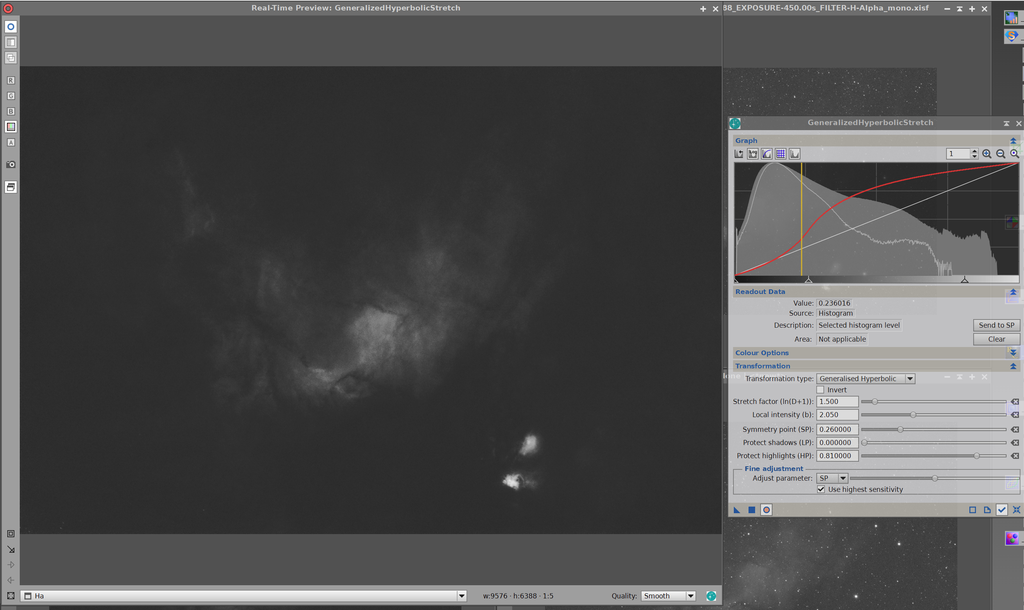

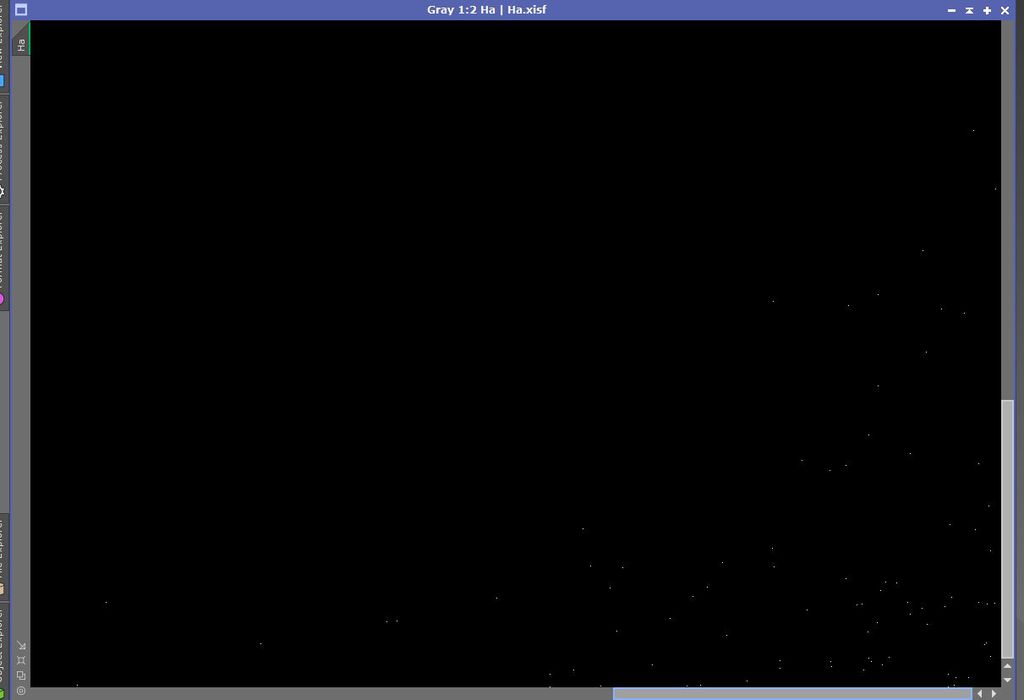

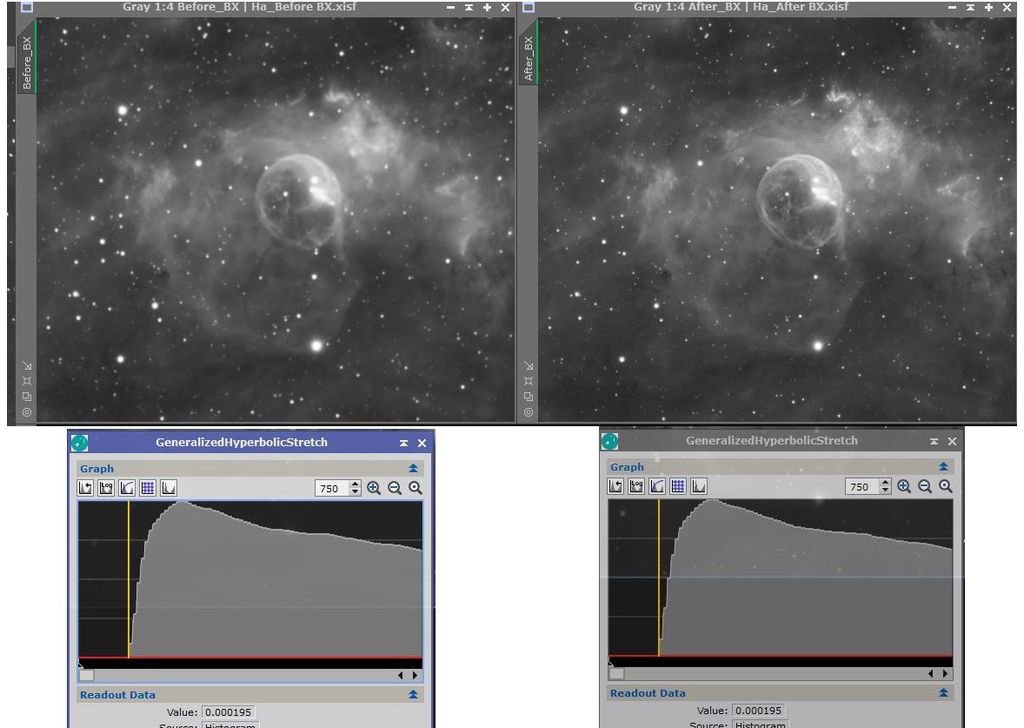

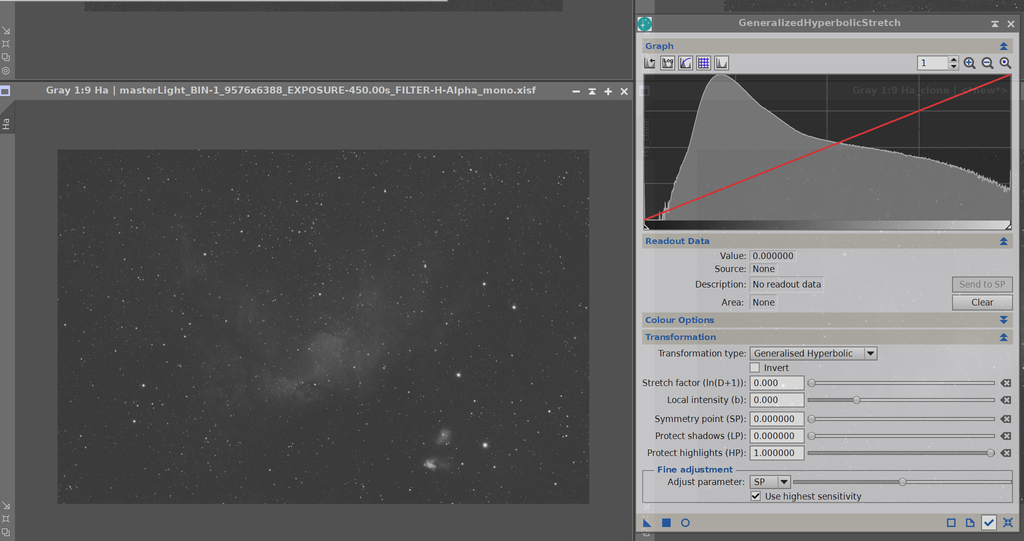

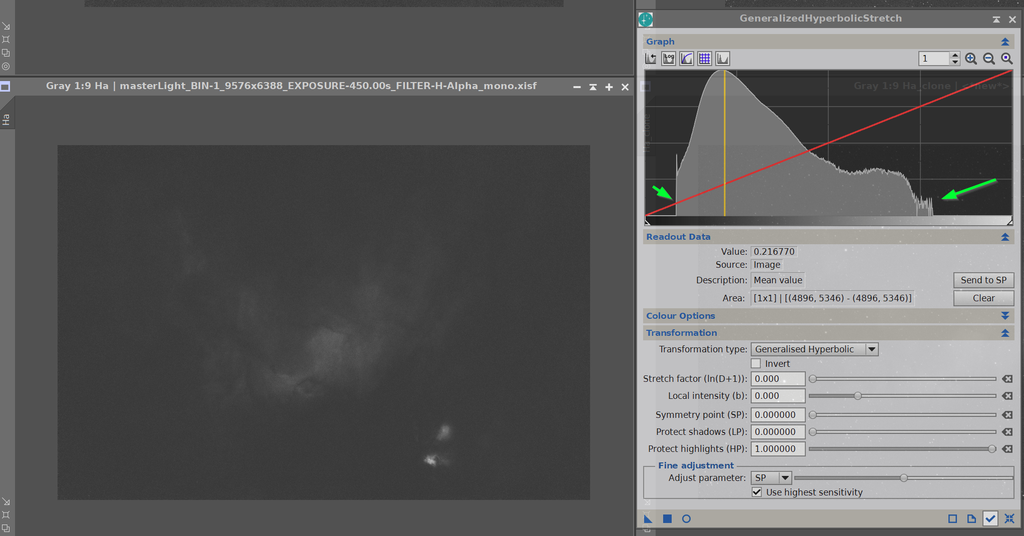

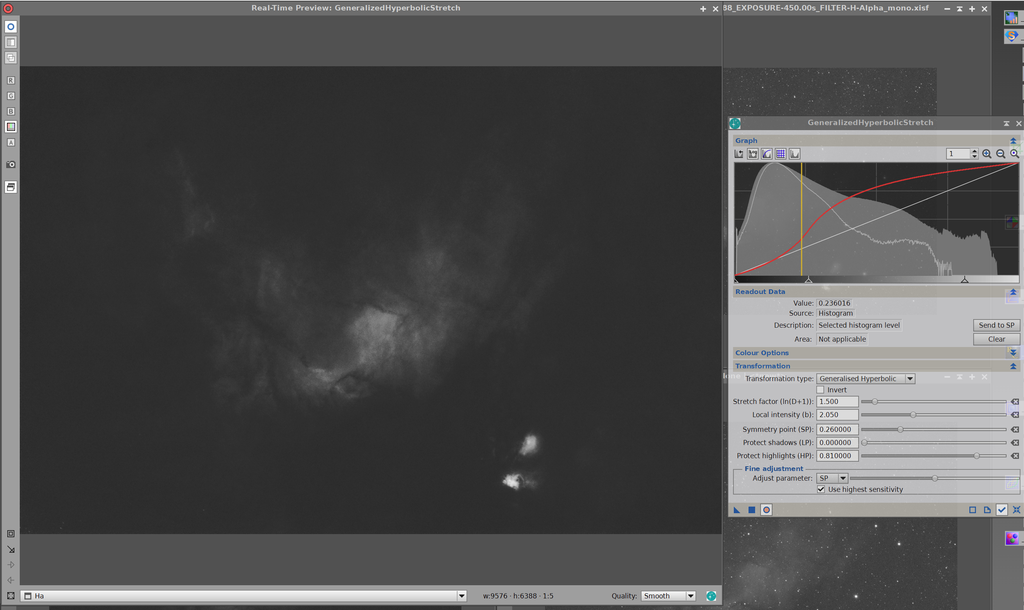

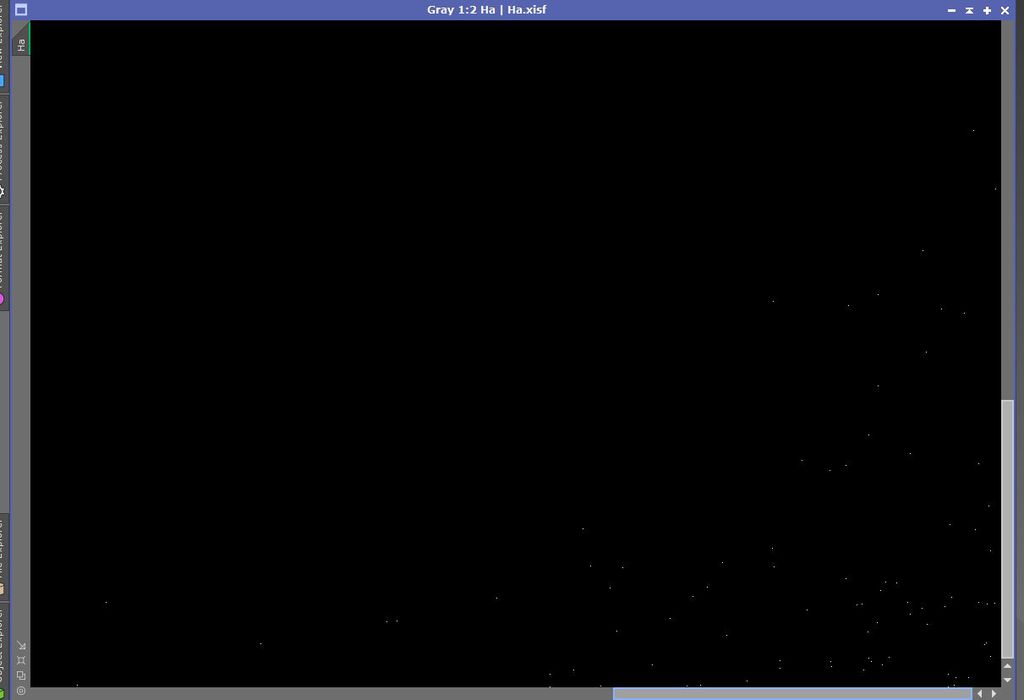

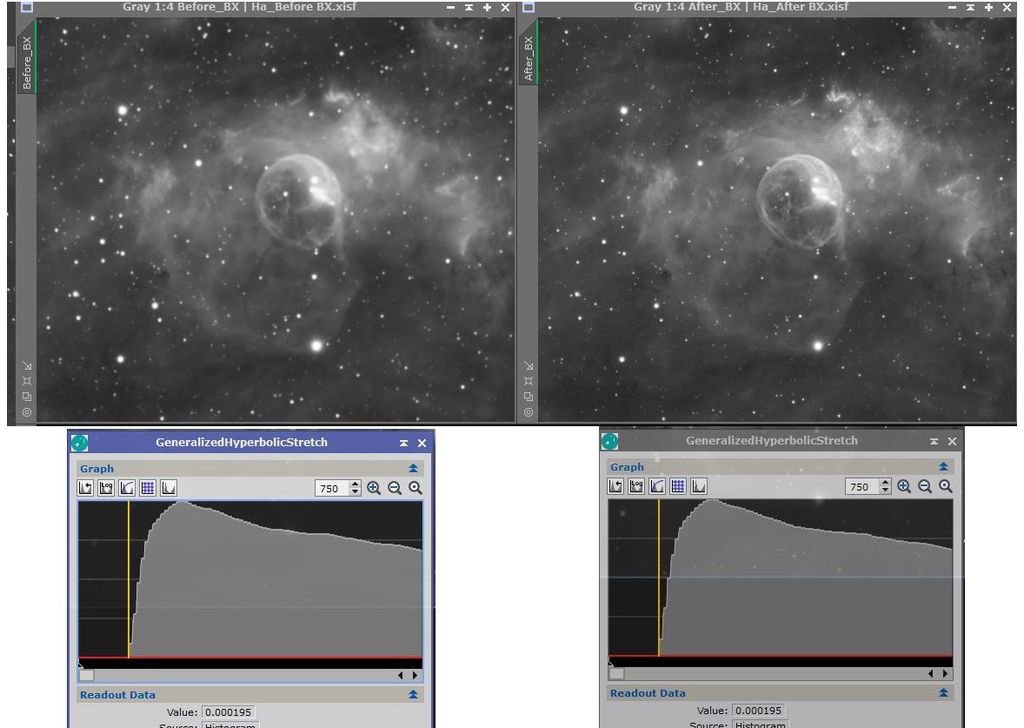

Yeah, the PixelMath is an option, maybe, but would be a PITA. I have to say that I went and installed the latest version of Starnet++ (V2.10) and it does the same thing as StarX in the linear mode. Have not used Starnet++ for a year. I believe it works in non-linear mode, but that defeats the purpose of removing the stars in linear mode. I mentioned, Using the mask in StarX does not work as it does in BlurX. I better get my long-duration guiding fixed and just use GHS with the stars in. My guiding works fine at 60s but the 450s NB images are .5 eccentric... better go back somehow to my old settings... think I have notes. Think we need to know what the programmer's algorithm is to understand this. This was the explanation: "But in a nutshell, BlurX needs to stretch an image to get the luminance values into an appropriate range of normalized values, it does it magic and then inverts the stretch. The issue is that it can be overly aggressive with its choice of shadows clipping value and so when it inverts the stretch the left tail can be clipped. I can only reproduce the issue when using images with a lower SNR, my higher SNR images don't show clipping. " So, he is going to non-linear and then coming back.... must be doing the same thing in Starnet++. So to get the normalized values need to stretch, but coming back to linear you arrive there are the shadows/halos and therefore they are clipped. Maybe Starnet++ halos look "worse" because it clips "less" on the return trip. If you think about it, the AI has to be pretty clever to identify star versus backgrounds and then replace star with exactly the same background to not leave a distortion. I believe the programmer "Russel" is the same person who wrote some clever scripts to add in Colored stars to NB images and he does this by replacing the background of the colored stars using data from a destarred color image..... looks to me like the chicken needs to lay a very good egg before you can hatch the chicken to lay another egg... a bit of a tortured analogy  Can't help thinking about this: Below is the imaged stretched in GHS PRIOR to applying StarX... thought if I did a nice stretch maybe it would just leave it in stretched mode and not clip... WRONG... clipped.... in second image after StarX applied to stretched image.  StarX applied to above:  It does stretch out but still clipped:  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Mike Cranfield:

David Payne:

Mike Cranfield:

Interesting!

The low end could be restored using PixelMath along the lines of: iif(original < x, original, starless). Where x is found by examining the histogram and finding the cut off point.

Do you know that Mike are you just assuming...? Were the pixels just clipped - if so then why doesn't Blurx or StarNet just restore them. It could be, that BlurX assigned the value of these pixels to neighbouring pixels, which, if you added them back in, would amount to a relative over-brightening of the pixels their values were assigned to. Without knowing what happened to these pixel values it is hard to say (that darned superposition thing again. Once again again, is BlurX doing deconvolution or not?

I was proposing this PixelMath for SXT rather than BXT. In SXT the primary changes to your image should be relating to stars, ie brighter elements of the image so pixel values that are likely to be greater than the threshold you would specify. I agree this approach may well not be so effective for BXT. I wouldn't know, I have never used SXT. In Starnet, I notice all the brighter single pixels are gone, I just assumed they taken into the starry image (as stars), but I haven't actually checked. But this value of clipping seems pretty high. I wonder if a clipped midtones transfer stretch is done according to a prescribed value that the SXT and BXT wants applied. Later, after the stars pixels are recognized and lifted off, the invert stretch might be applied to bring the image back to linear. However, any pixels clipped are lost and wont come back with the invert. Perhaps BlurX is doing star identification and requires the same stretch to be applied. The best solution would be to retrain the AI using GHS for the star identification purpose. It could still use midtones transfer by setting b=1, but avoid clipping altogether. But normal deconvolution works really well if you clip the pixels and remove the stars first. Maybe this is the secret sauce to BlurX.... a) get the PSF from the starry linear image b) clip the dimmer pixels that create the background "static" noise during R-L deconvolution (or other) during a stretch for star removal c) remove the stars that cause ringing during R-L deconvolution or (other) d) perform the deconvolution on the starless image - now background noise free, and ringing free e) in parallel to d, fix and shrink the stars f) add the stars back in g) invert the clipping stretch Your option to save the clipped stars and add them back in would then work. The deconvolution would be applied to a noiseless, uniform background with no stars which would work nicely. Dave

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I sent in a detailed message to the developer and invited him to comment here… I do have 4 of his products  I can see a lot of work arounds, but they all look like a lot of work! I do like the push button approach, if it is valid…. I was surprised to see the clipping on both star removal products after stretching with GHS… to me it would seem they both have set some arbitrary noise clipping level on the return… I wonder how do you go from stretched image to a linear image… I remember reading about this once in Pixinsight, but there were tons of caveats. Since you do not know what the funny function was exactly that did the stretch… unless it is a default setting… somebody/or some code has to make a judgement. Cheers, JY

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Hi Jerry,

The equations in GHS are mathematically invertible (not the same as inverting an image), so you can go forward or back.

Take a linear image and do a GHS stretch on it, using the parameters of your choice. This will stretch your image to non-linear. Then reapply GHS with the same parameters, but with the invert check box ticked. Then apply this second stretch. This will take your image back to original (linear) state.

So you can always take your image back to linear if you know the stretch history by applying the inverted mode in reverse order.

Using GHS with invert is liking having a new stretching utility. SP in inverted mode takes on the opposite role - now become the point where you take away the most contrast from the image. One place I often use this is to reduce the contrast in the very dimmest areas or very brightest (like the "final" S curve I used to do to add contrast in the middle). The invert GHS is very useful when stretching stars.

Did you forget to do an operation in linear mode after doing some non-linear adjustments? As long as you haven't clipped, you can use apply the invert of the GHS to get back to linear, without losing your non-linear work. Then apply your linear processes, and back you can go to non-linear. Easy peasy.

One thing not really explored by camera manufacturers is the ability to manufacture non-linear chips. Considerable research dollars have been spent by the sensor manufacturers on making CMOS chips linear. Having linear responding chips was demanded by astronomers to do quantitative astrophysics. This is why it has almost been the exclusive domain of CCD chips. However, I feel this is totally unnecessary with GHS. Any non-linear chip can now be calibrated with the hyperbolic equations in GHS to transform non-linear output into linear output with one or more GHS or inverted GHS equation to output linear.

In fact, as an astrophotographer my ideal camera is actually non-linear. It would have very high sensitivity at low count, but the sensitivity would decrease as the well filled up. This would give my camera a HUGE (essentially unlimited) dynamic range. No more playing with gain, binning, to compromise well depth. No more saturated stars - they would always be unsaturated. In addition, who needs an ultra-sensitive sensor for the stars anyways - much better that my sensitivity be used as the dimmer end. Interested in quantitative analysis? - just apply the calibrated GHS equations to the non-linear ADCs and out will pop out linear ADC's - just like a linear camera. Even sensors that purport to be linear can be calibrated using GHS to output truly linear results.

Whenever one explores a new corner of technology, one finds "facts" and "legends". Legends often exist due to tradition as much as anything else. The need for linear sensors is a legend, born out of the need, at the time, for photographic plates to be linear as there was no ability to transform this analog data. Astro-physicists then demanded that digital cameras be "linear" and adopted CCD chips that were linear as their sensor of choice, because they needed to use linear data. When CMOS chips became competitive, then were initially rejected (although more widely adopted by photographers) because they were non-linear, like they were used to. The chip manufacturers then spent a lot of money, based on the legend that the chips needed to be linearly responding, to compete with CCDs. Why did this demand for linear sensors originate? - tradition, in my opinion. It is this tradition that I think is getting in the way of sensor manufacturers providing cheaper and better sensors that we astrophotographers (and, well photographers in general) can make use of - like unnecessarily tying one had behind your back).

(Yes, I know there was/is an interlude in there where the "gamma" function was the force-du-jour because CRT monitors responded in a power-law (gamma) way and the gamma function was convenient for data compression, but we have now thrown away our CRT monitors, and extreme data compression is less of an issue - so we can ditch gamma whenever we see fit. Note gamma stretching is still the "levels" method employed by Photoshop - talk about a legend. At least Pixinsight adopted the mid-tones transfer function which is both better (in my view) and actually part of the hyperbolic equation family).

Calibration of other kinds of sensors is the general rule, while demanding linear response is a luxury one generally doesn't have. In my industry, for example, a big challenge is measuring flow rates in pipe. The best sensors are those that are linearly responding, but those that are most accurate. To get your answer, however, you have to use calibration. Even the pitot tubes in aircraft for measuring airspeed require calibration to report the airspeed to the pilots. This doesn't make them bad measurements nor incapable of reporting data that needs to be linear. Knowing your airspeed in an airplane is very important data too

So here is my wish, that the manufacturers create a sensor that responds logarithmically - ie extremely sensitive when the pixel is getting few hits. For those pixels getting more hits, the sensitivity decreases. Then provide a calibration method (I would use GHS, but whatever - even gamma would work) or curve that would allow me to transform this data into linear if I wanted. First one there, gets my sale!

Sorry about the long post Jerryyyyy, I hope it was interesting - you just opened the door to a topic and I thought I would walk right in.

Dave

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

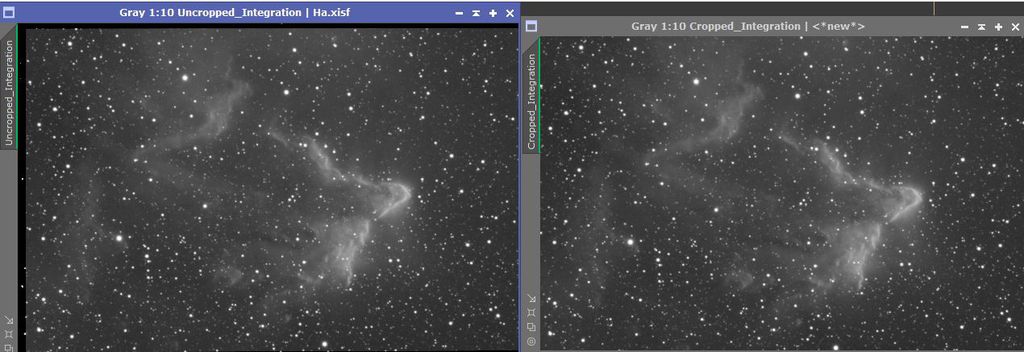

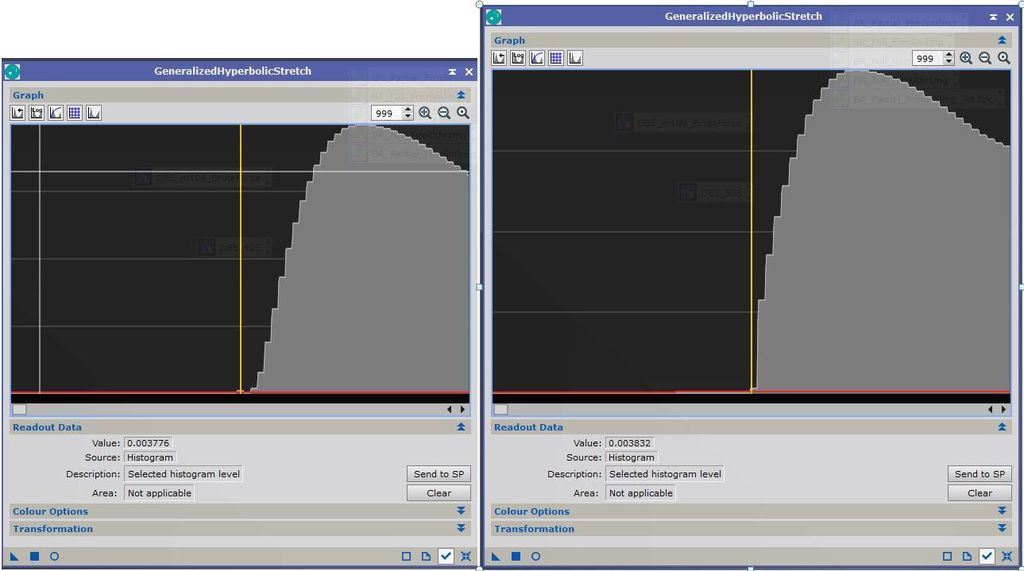

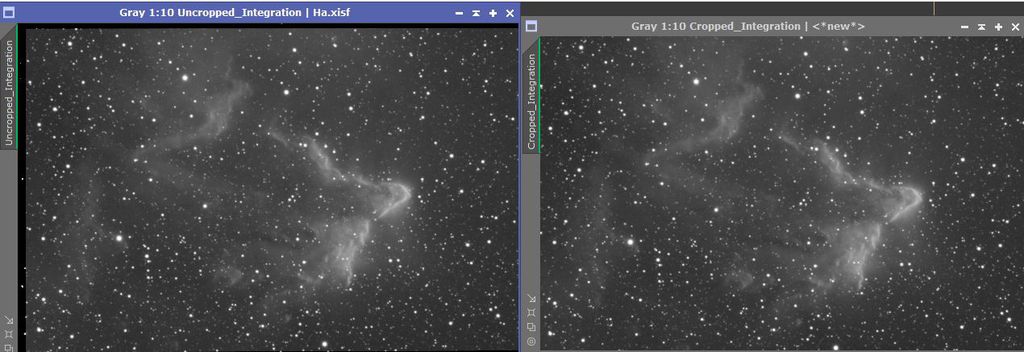

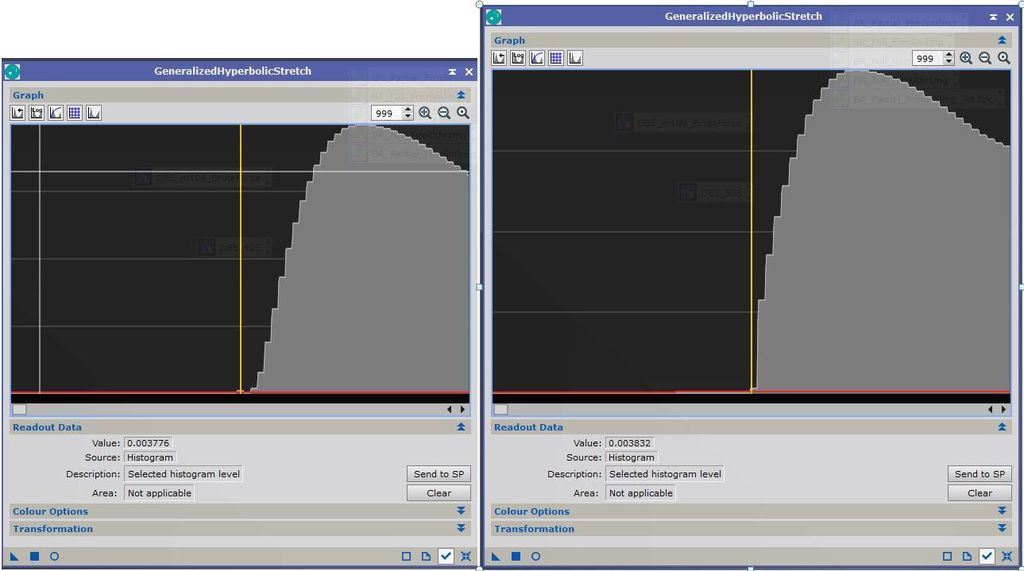

I don't know if this informs, or confuses, but I tried BX and SX on an Ha integration (linear) of IC 63 (so lots of nebulosity at varying brightness). I just happened to grab the uncropped version, and while I saw the same clipping reported here for both BX and SX, the values clipped were less than 0.0038 and the only parts of the image with those values were the nearly black alignment artefacts along the borders, so something I would have cropped anyhow and no useful data lost.

I then cropped those areas off the image and tried BX and SX again. This time, SX *didn't* cause any clipping, but BX did at about the same value as with the artefacts. By 'clipping', though, it doesn't appear to be clipping any values to zero, but instead raised pixel values below 0.0038 to that value.

I also compared non-artefact pixel to pixel values for both SX and BX. There was little change in values throughout the image (not counting star sites) when SX was applied. With BX, there was also little change in the darkest pixels (according to the plots, the 'clipping' effect raised some number of pixels by about 0.00006), but the brightest areas of nebulosity were significantly brighter (of course, star brightness was reduced). FWIW.

Cheers,

Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Scott Badger:

I don't know if this informs, or confuses, but I tried BX and SX on an Ha integration (linear) of IC 63 (so lots of nebulosity at varying brightness). I just happened to grab the uncropped version, and while I saw the same clipping reported here for both BX and SX, the values clipped were less than 0.0038 and the only parts of the image with those values were the nearly black alignment artefacts along the borders, so something I would have cropped anyhow and no useful data lost.

I then cropped those areas off the image and tried BX and SX again. This time, SX *didn't* cause any clipping, but BX did at about the same value as with the artefacts. By 'clipping', though, it doesn't appear to be clipping any values to zero, but instead raised pixel values below 0.0038 to that value.

I also compared non-artefact pixel to pixel values for both SX and BX. There was little change in values throughout the image (not counting star sites) when SX was applied. With BX, there was also little change in the darkest pixels (according to the plots, the 'clipping' effect raised some number of pixels by about 0.00006), but the brightest areas of nebulosity were significantly brighter (of course, star brightness was reduced). FWIW.

Cheers,

Scott Thanks Scott, A simple step could be added as per @Mike Cranfield suggestion within BlurX or StarX to restore those pixels after the other operations are completed. I wonder if this was left out because this might show noise/signal that differs from what is placed behind stars in the StarX or Starnet starless image, or what exists next to shrunken stars in BlurX.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

The more I think about it, the more I am concluding that the easiest way to avoid ringing of star during deconvolution, or matching background levels during star removal is to bring the background level up to a certain threshold. Since no pixel can then be dimmer than the background, ringing disappears in BlurX and the background level behind removed stars can easily set to match. The results of the operation is responsible for the "dreamy, pure" look of starless images as well as the absolute elimination of ringing.

If you aren't interested in dim stuff or believe it is just noise, then "good to go" on the BlurX & StarX/Starnet. If you want the dim stuff, then you need to work without these tools and do your work with the stars intact or live with deconvolution artifacts. I am now pretty sure that if you want to use BlurX and star removal, you have to say goodbye to the dim stuff or find another way to substitute what is behind the stars.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

David Payne:

If you aren't interested in dim stuff or believe it is just noise, then "good to go" on the BlurX & StarX/Starnet. If you want the dim stuff, then you need to work without these tools and do your work with the stars intact or live with deconvolution artifacts. I am now pretty sure that if you want to use BlurX and star removal, you have to say goodbye to the dim stuff or find another way to substitute what is behind the stars. I get your point, but for the image I played with, at least, I couldn't find any 'dim stuff' that was lost at the value where clipping occurred. As I mentioned, for the uncropped integration, the only values changed were within the miss-alignment artefacts, so nothing lost there, and for the cropped integration (no artefacts), only BX clipped anything, and what was 'clipped' wasn't any 'area' of the image I could identify, but likely a small number of scattered individual pixels as far as I can tell. And at that, only by a change of around 0.00006. So again, no appreciable loss in observable data. In case it helps, here are the uncropped and cropped images that I started with and the GHS histograms for the cropped image before and after BX and showing the before and after darkest pixel values.   Cheers, Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Hi,

Was in contact with the BlurX developer today and he has a revised version coming out to be followed by the other products.

Just wanted to mention this as busy today.

JY

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Scott, I think we are saying the same thing. In your image, those clipped pixels are unimportant so no worries. I can also see Jerry's point that they were important so there is a problem. I still believe it has to do with the corrections made behind removed or shrunken stars and I am hopeful that the next releases of the product fix the problem for everyone, everywhere in the image. Unintended consequences are to be expected when new sophisticated products come out. Still, good on you and Jerry for noticing.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Mike Cranfield:

I have been thinking about this a bit more and I have come up with "the snowball analogy for deconvolution"!

Think of the light from the star as a snowball and the path as your sensor. When you drop the snowball on the path it spreads out, ie a kind of PSF. (Ok I know the PSF is not created by light splatting on the sensor but bear with me!). Deconvolution can then be thought of as gathering up the scattered parts of the snowball and packing then back into a tight shape again. No extra snow is added or taken away, it is just gathered up. Now a consequence of this will be that the snow ball will get higher in the middle - ie the peak of the star in deconvolution will get brighter. For a few bright stars I guess this could mean the cores go over a value of 1.0 and may then get clipped back to 1.0. If this happens the mean will reduce and that may be the source of the discrepancy in the mean statistic I have seen.

Those of you in warmer climes may need to substitute some other material for the snow!

CS, Mike I like your analogy, and indeed if the star gets smaller, it needs to get brighter. The deconvolution process (at least in Pixinsight) has dynamic range extension to handle this if some of the stars are near saturation. If the pixels around the stars are overallocated (ie almost all of their brightness moved to a nearby star. Since they are dim, it doesn't make much difference to the star, but the brightness at the pixel location becomes a dark ring around the star itself, and hence the ringing issue that accompanies deconvolution. If star-removal or other AI program is setting a minimum background brightness for all pixels (thereby "clipping"), then when finished deconvolution, all the AI has to do is ensure that pixels around a star don't drop below that minimum and voila! - no rings. Superposition will be violated, but only as if you had done a "clone stamp" very, very carefully all over the background so that the ring had the same brightness as the background.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

David Payne:

Scott, I think we are saying the same thing. In your image, those clipped pixels are unimportant so no worries. I can also see Jerry's point that they were important so there is a problem. I still believe it has to do with the corrections made behind removed or shrunken stars and I am hopeful that the next releases of the product fix the problem for everyone, everywhere in the image. Unintended consequences are to be expected when new sophisticated products come out. Still, good on you and Jerry for noticing. I'm curious whether the difference you point to between Jerry and me is a philosophical difference, purest vs pragmatist (and I do respect both), or whether you think BX potentially removes more usable data in some images than others. I think you may be right that setting a minimum background level has something to do with manipulating stars and the artefacts produced, and though I didn't see any usable data lost in my image, I wonder if that's always the case. I think it's interesting that when I ran SX on the uncropped image that had the miss-alignment artefacts, there was 'clipping', but the same image with those artefacts removed (cropped) isn't clipped. I've also noticed that in other images of mine where there's less signal/more noise, BX doesn't go nearly as far. Cheers, Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Scott Badger:

David Payne:

Scott, I think we are saying the same thing. In your image, those clipped pixels are unimportant so no worries. I can also see Jerry's point that they were important so there is a problem. I still believe it has to do with the corrections made behind removed or shrunken stars and I am hopeful that the next releases of the product fix the problem for everyone, everywhere in the image. Unintended consequences are to be expected when new sophisticated products come out. Still, good on you and Jerry for noticing.

I'm curious whether the difference you point to between Jerry and me is a philosophical difference, purest vs pragmatist (and I do respect both), or whether you think BX potentially removes more usable data in some images than others. I think you may be right that setting a minimum background level has something to do with manipulating stars and the artefacts produced, and though I didn't see any usable data lost in my image, I wonder if that's always the case. I think it's interesting that when I ran SX on the uncropped image that had the miss-alignment artefacts, there was 'clipping', but the same image with those artefacts removed (cropped) isn't clipped. I've also noticed that in other images of mine where there's less signal/more noise, BX doesn't go nearly as far.

Cheers,

Scott Yes, I am back from work, and I have looked closely at the ringing in BlurX versus deconvolution and it seems to adjust the deringing for the size of the star in BlurX. It is very good except on rather large stars where it breaks down a bit, but this has always bothered me with deconvolution since the models will vary with stars size... one PSF does not fit all... you guys know the math better than I. Yes, also I am very or rather concerned about those dark pixels and there are more than a few. That histograp Yes, I am back from work, and I have looked closely at the ringing in BlurX versus deconvolution and it seems to adjust the deringing for the size of the star in BlurX. It is very good except on rather large stars where it breaks down a bit, but this has always bothered me with deconvolution since the models will vary with stars size... one PSF does not fit all... you guys know the math better than I. Yes, also I am very or rather concerned about those dark pixels and there are more than a few. That histograp[h is a frequency count right? When they are clipped in BlurX, you get a salt and pepper result in the darker areas that is reduced when you use the L-mask. I think Russel will fix this. I think it just went past him until it was noticed by others, like myself, who are trying to ring out the detail in H-alpha or other NB images. I can tell you that with the H-alpha when you look for it, it is all over the place in the Milky-Way and is what helps keep me interested in the Sharfpless2 Objects.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

The image I was using was an Ha integration…..where I’ve seen a ‘salt and pepper’ look like you describe is when I don’t apply a pedestal when calibrating Ha subs. Maybe clipped pixels you get without a pedestal can be exacerbated by BX? Also, with my Ha image, ‘clipping’ by BX entailed *raising* some pixel values, not clipping any to black.

Cheers,

Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Scott Badger:

The image I was using was an Ha integration…..where I’ve seen a ‘salt and pepper’ look like you describe is when I don’t apply a pedestal when calibrating Ha subs. Maybe clipped pixels you get without a pedestal can be exacerbated by BX? Also, with my Ha image, ‘clipping’ by BX entailed *raising* some pixel values, not clipping any to black.

Cheers,

Scott Hi Scott, Thanks and again I think we are saying the same thing: If it doesn't make a difference to you or the image, go ahead - but if it does... you may need to compromise. One of the differences in types of images, i have found are those where a) there is a clear object and a clear background: In these the clipping likely has very little pragmatic effect. In these, the histogram peak is generally in darkness and there is not substantial problem with what BlurX does. b) nebulosity is everywhere in the image and there is very little if any dark background: In these you wouldn't want any clipping at all. In these, the histogram peak emerges from the darkness and is not "separated" from the the object histogram. This is more the case in Jerry's recent images. It wouldn't be the first time that GHS either revealed or was blamed for a change in an image that another process created. We are in a GHS forum topic after all. (I am not a purist, but a retired engineering/business pragmatist. ) Thanks, Dave

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Again, I agree in principle, but for those very reasons in your b), I chose an image that was both NB and predominantly nebulae, yet didn’t see any significant loss, or altercation, of data that would impact visible detail.

Cheers,

Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Here's another way to visualize what's happening to my image. When I run BX on the uncropped version of my Ha integration, the value where the curve is truncated is 00.003840**, so I used Pixel Math to make any pixels in the pre-BX image that are lower than that value white, and all other pixels black. This shows exactly what data is 'lost'. Note that by 'lost', it's really a section of the histogram curve that is lost, BX doesn't clip those pixels to black, but arbitrarily raises their values. As Dave has said, it appears those values are raised to some minimum background level that BX establishes i.e 0.003840 in this case. To see the individual pixels, I needed to zoom in a bit, but I'm showing the densest cluster of pixels that BX will alter. What you see here is probably a little more than half of the total number for the image.  ** The value of 00.003840 is a little higher than the value shown in the histogram I posted earlier. That's because I noticed a tiny blip just to the left of the curve that represented a bit of alignment artefact I had missed when cropping, so I re-cropped resulting a slightly different minimum brightness value represented by the curve.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Hi Scott,

I get your point. Note that the effect on your histogram is a lot less than Jerry's. Your nebulosity seems to cover about 50% of the image, with your problem pixels definitely on the non-nebulosity side, that you are going to put into the dark. Jerry has an issue with it that I think can understand - it is definitely in his dimmer stuff - but it is affecting the nebulosity. The nebulosity in his images sometimes cover 100% of the image. Here's what I conclude:

One of the things GHS is good at, is showing the dim nebulosity. It is likely why Jerry noticed it while using GHS.

GHS is always a good thing. It preserves your data - is invertible, conformal mapping,…..

Deconvolution can be good, but it struggles both in the background, where bright noise pixels can accumulate brightness and at bright stars which, due to slight non-conformance with the PSF can cause dark rings in the surrounding halo areas. Deconvolution is better if it is performed starless to avoid ringing. Deconvolution can be made better still, if the background noise is eliminated. This can be done by setting the background to a constant, minimum value. Then deconvolution is very good.

BlurX can be very good, but some pixels (sometimes more, sometimes less) seem to be altered for some unknown reason that we can only speculated on. Most of the time these pixels are put in the dark, but sometimes we want to be displayed them. Then BlurX is bad. The developers acknowledge this and are dealing with it.

Artificial intelligence started out as a good thing, but then the terminators showed up…..

Dave

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

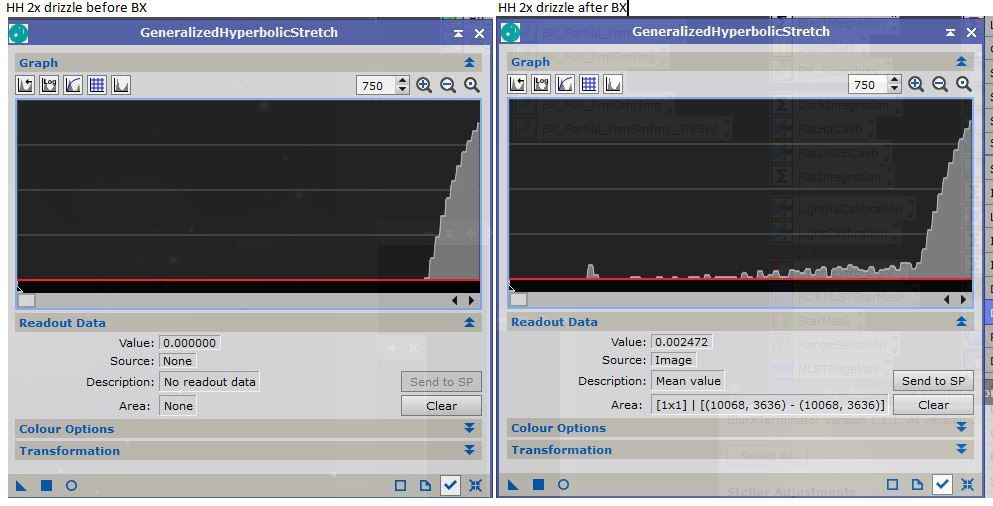

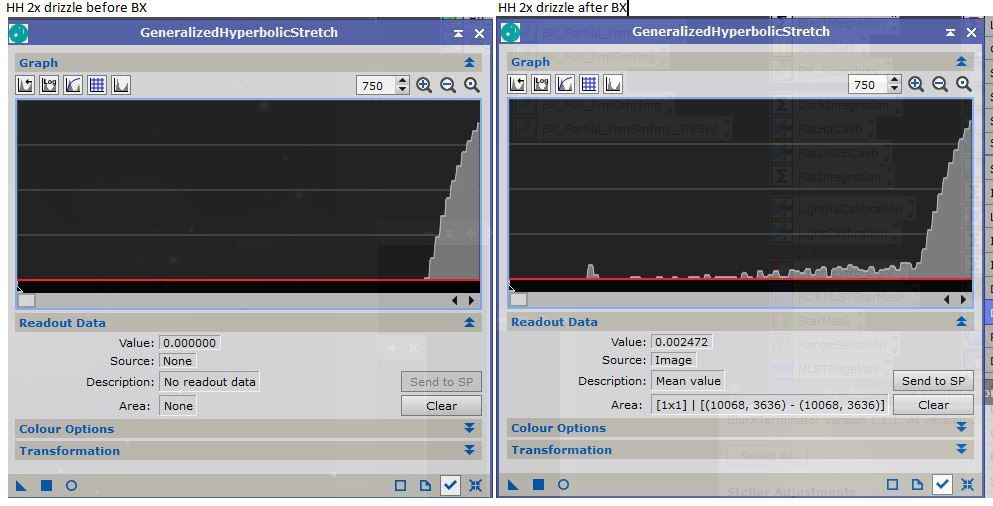

Not to keep beating this moldering horse, but..... I wanted to push what you were saying, Dave, about images with little spread between target and background/noise even further. So I tried BX on an Ha integration of NGC 7635 cropped to show no clear background sky. This time there was no truncation of the histo curve (see below). So, it appears that BX is sensitive in some way to what it has to work with. On another note, RC has said that BX is only trained on images with stars up to 8 px. Where this can be an issue is if you're starting out with large stars, like in the 7 to 8 px range as mine are, and 2x drizzle such that they're now 14+ px, BX then provides very little sharpening effect. In my experience. When I tried it on a 2x drizzled Ha integration of the Horsehead nebula (19px) there was barely any sharpening, as expected, but this time BX did darken a fair amount of pixels according to the GHS histogram (see histo comparison further below). Anyhow, I think this star size limitation isn't something most people using BX know about, and may be why some see very little effect from BX. When I look at the histograms Jerry posted in #24, though it looks like a lot of pixels are missing because of the height of the curve truncation line, the curve prior to BX is so steep left of that line, that I think it makes it look like more pixels are affected than really are. If you look very closely at the post-BX graph, you can also see a new spike right at that line which I believe are the pixels that have had their values raised to the minimum background value. BX AI is nothing like ChatGPT AI, but yeah, I share your termination concerns....... Should also be noted that RC is the first to point out that AI processes aren't reversible, and are therefore limited in their application. And thanks for pointing out the Invert function in GHS! Was wondering what that was and that's great!! Cheers, Scott   |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

BlurXTerminator 1.1.14 beta is released for testing. It should resolve the histogram clipping. Details: https://www.rc-astro.com/blurxterminator-1-1-14-beta/ |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

For the record, there is a new public Beta of BlurXterminator out and it seems to solve the clipping problem: https://www.rc-astro.com/blurxterminator-1-1-14-beta/Wish all developers were this responsive. Oops missed the duplicate above.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

This has to be the best script in PixInsight. I tried it last night after seeing this post last week and I will never use normal histogram stretch again. Thank you for this script. A big game changer

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Thanks for the great feedback (on behalf of Mike, too) Abdul.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Thanks Abdul - It's always great to hear that GHS is working well.

CS, Mike

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.