Given my background is mainly in DSO imaging I know this trend started and died early in DSO imaging (1-2 years ago) because it was easy to spot AI "creating non-existing" features and details in images. To prove my point there is a whole section on astrobin IOTD photographer guidelines that explains why Topaz should be avoided (because how can you use a machine learning algorithm trained on daytime photography for niche astronomical data ? the answer is you cannot without producing made-up data).

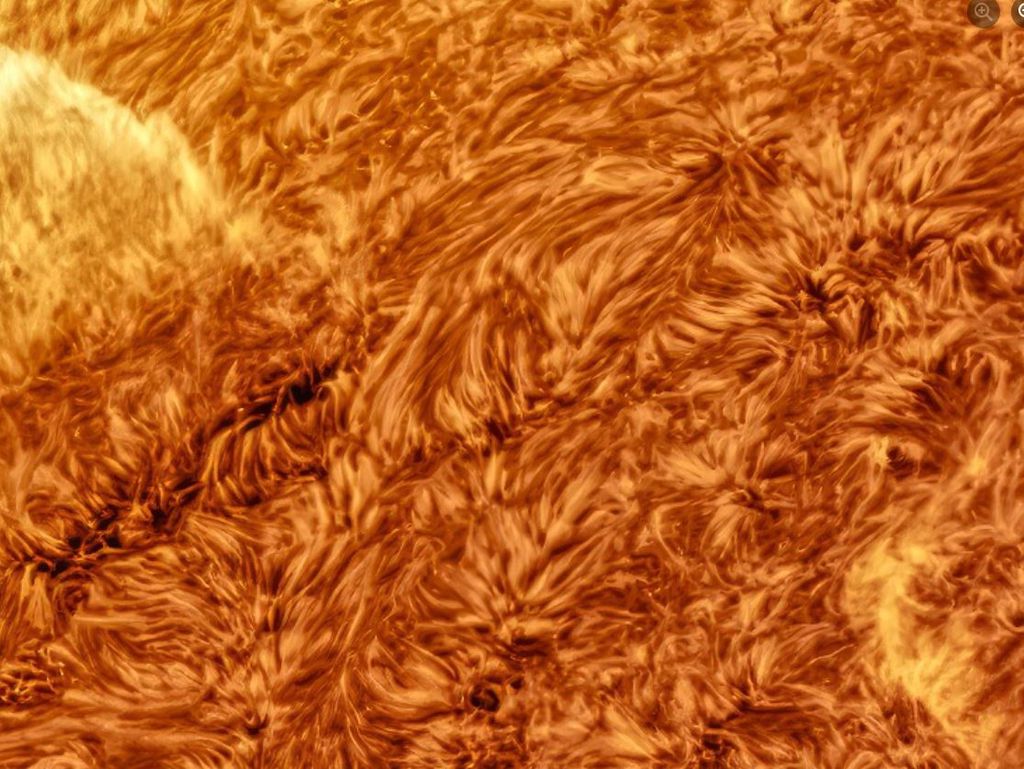

The problem I see is getting positive feedback, likes, awards for basically a "painting" of the Sun instead of actual data because users/staff members/judges are not educated better to spot the difference what is an actual filament and what is an imaginary creation.

I will include some examples and you can have a look for yourself.

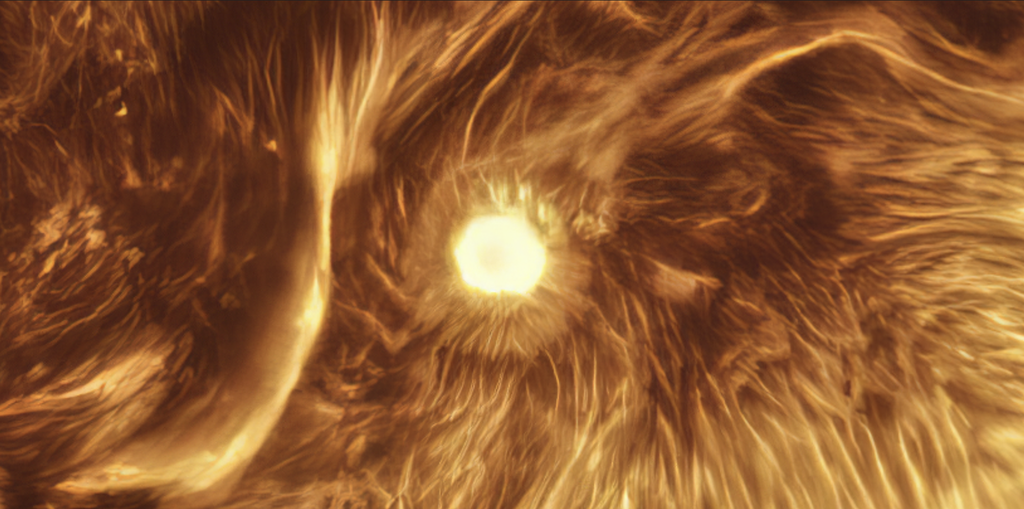

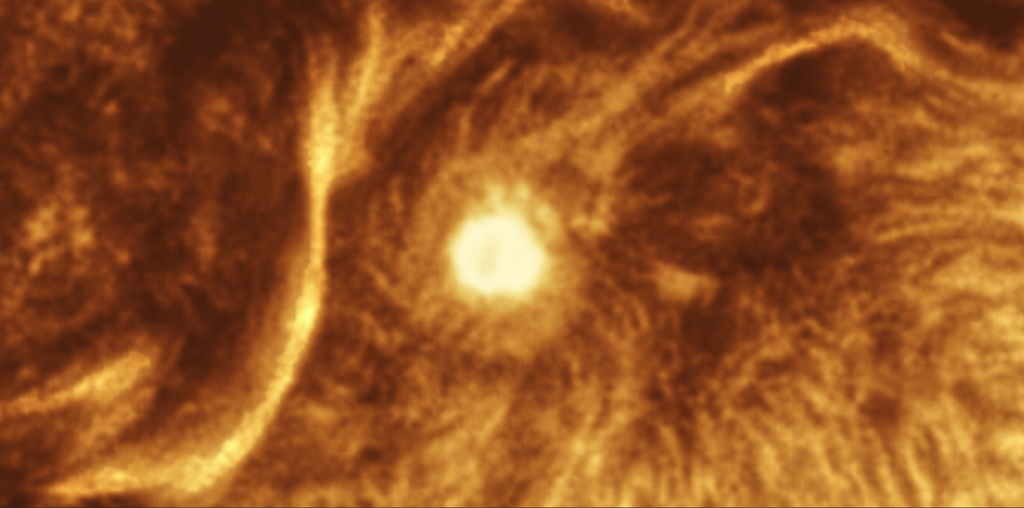

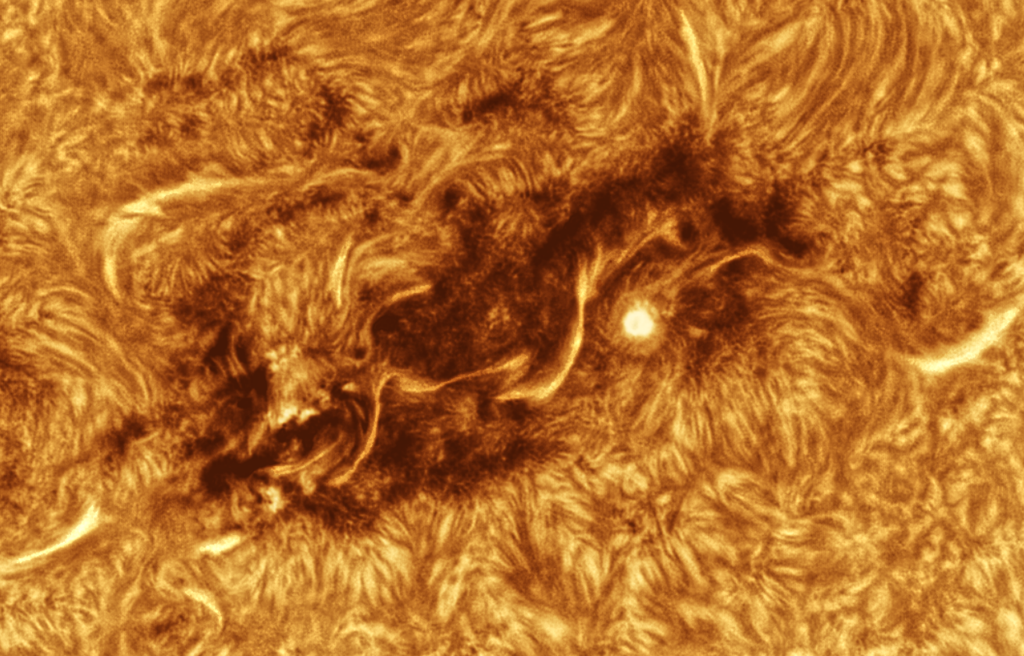

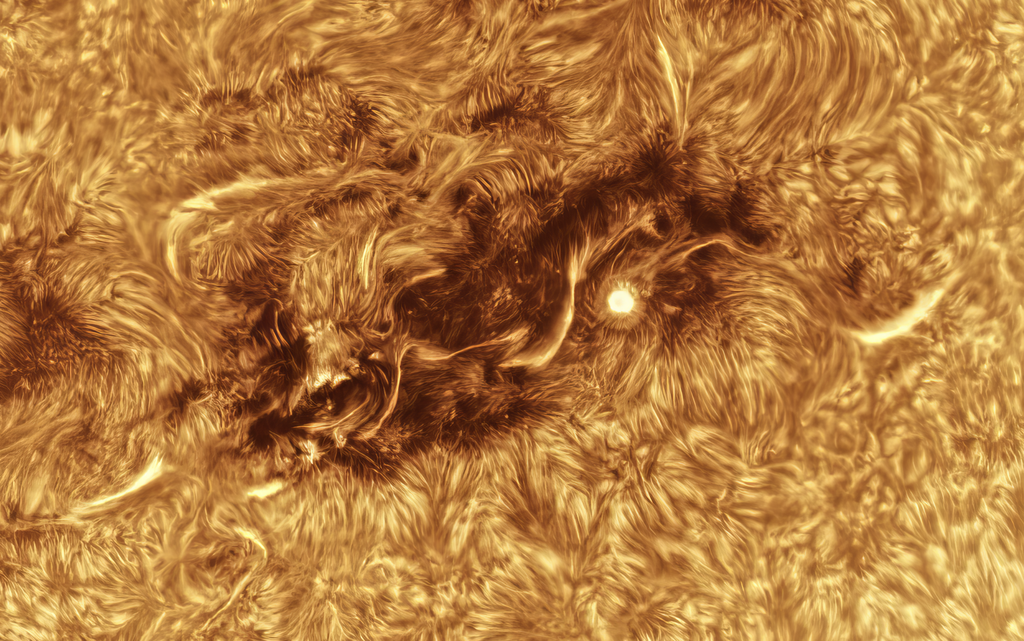

And now for a 1:1 comparison of RAW processed data and data processed in Topaz (guess which one is which)

To quote a user on another forum:

"Wow!! that's quite an incredible transformation! I don't think I would spot that, it looks like an image I would die for. I get very frustrated as I can never get detail like that, and others can. I see lovely detail in my videos and can never see those details in the processed version and this annoys me."

The question lies in the transparency of the people behind the images: Some of us are looking for visual impacts, other are looking for actual details, other are looking for both.

As long as its transparent that possible artificial details were added im fine with that, what I would not encourage is not describing the manipulation behind the data.

I think it should be clear to anyone that "AI" cannot replace or improve real signal when it comes to finding realistic structures. The machine is guessing the missing data / structures based on training with other images. If that is a good thing or not depends on your intentions.

In case of High resolution Solar Topaz processing: 1. there are no knife sharp edges on the Sun

2. chances are Topaz is using something like an image of "Cheetah's fur" for the "hairy filaments"

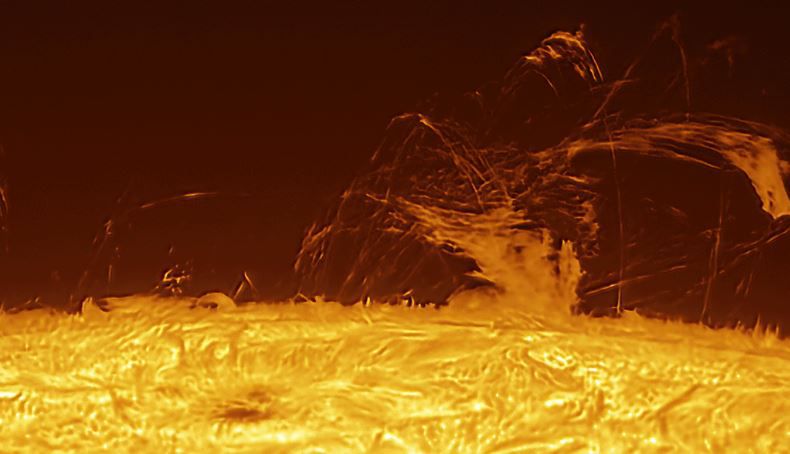

How to spot it: This is quite easy to know wheter what we seen on images are actual details or artifacts. We just have to browse through images taken with larger aperture (e.g. professional telescopes).

I would be glad if we could discuss it further.

Luka