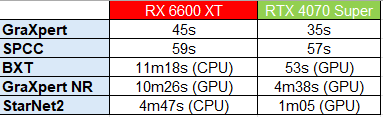

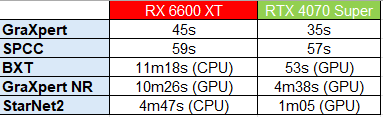

After almost a year running PixInsight "CUDA-less", today I finally got my first Nvidia GPU, an RTX 4070 Super. I have it paired with a Ryzen 7 5700X and 32GB of 3200MHz RAM. My previous GPU is a beautiful and efficient Radeon RX 6600 XT, which I'll surely miss... but not for PixInsight! I used a huge 2x-drizzled image from my Sony a6100 for testing: 11614x7832 pixels, ~90 megapixels. These are the results:  10-fold improvement with BXT, 5-fold with StarNet2 and 2-fold with GraXpert noise reduction. My current workflow with the IMX585 will be much lighter than this, I just wanted to to see how it would look like in a demanding scenario. I'm happy  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I've got a new GPU on my list… Still using my old 1660ti!  Glad to see it would be of some benefit to upgrade in my processing workflow.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

It’s well worth it. I’ve setup cuda at least 2 years ago and it still works on every Pixinsight update. You just need to copy over the cuda tensorflow.dll after you update Pixinsight. Saves so much time.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

+1!

Just got my new computer specifically "built-up" to work with Pixinsight and other image processing applications after running on, using your word, "Cuda-less" system. Wow! What a difference it makes with the AI driven toolsets! Would really like to see Pixinsight start leveraging the GPU for some of its intensive image processing (like WBPP integration)!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I think it is a no brainer to run CUDA if you have a GPU card that supports that option. It makes any of the star removal tools (which are absolutely integral to my workflow) run so much faster, along with other processes as well.

ML

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Since 5 years now I use Astro processing software and do not understand why the GPU has not made it earlier into the Astro community. Also the libraries should be added by pixinsight as additional installation packages for windows and Linux to make it easier to install cuda for everyone!

With deeper graphical processing you can even extract and examine exoplanets directly and make them visible within transit datasets. light is geometry into the deepest realm of the atom!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Cuda vs CPU?

No brainer setup cuda acceleration!

My starx runs went from 2 minutes with my ryzen 93900x to about 20-45 seconds depending on the image with my 3080(probably faster)

Daniel V

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Not a Shakespearean question for me … to CUDA … what else 😊. Approximately 10 times faster with BXT, and (not measured) much faster with SXT. As mentioned above, after each new PI release, copy the tensorflow.dll back in the bin directory, and it worked so far flawlessly. Should be part of the PI installation, if this is permitted. Was hesitant as well at the beginning, and so glad that I overcame it!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

To Cuda or not …is not a question for the light walleted astroinager.

Save the money and by all means get it! Game changer for me at least.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I will say that going to a newer Cuda does not always help hugely, however. I recently went from a 3060 to a 4080 super and although there was improvement it was not huge.

It would probably not have been worth it for just PI but since I do some gaming as well - it was - since the upgrade also included more RAM and a Ryzen 9950X and faster M.2 drives.

Not cheap (although building your own helps a great deal with cost).

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

My computer is equipped with a CPU I7-14700K and a video card Geforce RTX4070.

With a 12196 x 8136 pixel image (IMX410 drizzle 2 - 1.2GB), BXT is 8.7 times faster with cuda (46,8s vs 406s), NXT is 5.3 times faster (15,3s vs 81s) and SXT is 5.9 times faster than with the CPU (41,5 vs 245s).

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I've got a Radeon 7900XTX for gaming and recently just added a second video card specifically for CUDA/PixInsight. Ended up just getting a 3060 but even that made star extraction on my 12-Core Ryzen 7900 go from 1:15 to 13 seconds. Really wish OpenCL was supported but for now, I'll take the two card setup!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Definitely run CUDA. The difference between using the GPU vs CPU is huge.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Jack Kolesar:

I've got a Radeon 7900XTX for gaming and recently just added a second video card specifically for CUDA/PixInsight. Ended up just getting a 3060 but even that made star extraction on my 12-Core Ryzen 7900 go from 1:15 to 13 seconds. Really wish OpenCL was supported but for now, I'll take the two card setup! If only my motherboard had a second x16 PCI-e slot!!!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Franco Grimoldi:

Jack Kolesar:

I've got a Radeon 7900XTX for gaming and recently just added a second video card specifically for CUDA/PixInsight. Ended up just getting a 3060 but even that made star extraction on my 12-Core Ryzen 7900 go from 1:15 to 13 seconds. Really wish OpenCL was supported but for now, I'll take the two card setup!

If only my motherboard had a second x16 PCI-e slot!!! No Doubt. I had to get a new case because my mid couldn't hold the last slot! Still worth it!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I haven't tried it myself (I use an RTX3080), but apparently you can use Radeon 7XXX series for GPU acceleration as well. https://sadrastro.com/pixinsight-gpu-acceleration-for-amd/ |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Casey Offord:

I haven't tried it myself (I use an RTX3080), but apparently you can use Radeon 7XXX series for GPU acceleration as well.

https://sadrastro.com/pixinsight-gpu-acceleration-for-amd/ That's huge news!!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Linus Torvalds on Nvidia Marketing 😂 i personally use Nvidia on Linux and the support has become better over the last years in my experience the hardware quality was also better then Radeon cards.   |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Bill McLaughlin:

I will say that going to a newer Cuda does not always help hugely, however. I recently went from a 3060 to a 4080 super and although there was improvement it was not huge.

It would probably not have been worth it for just PI but since I do some gaming as well - it was - since the upgrade also included more RAM and a Ryzen 9950X and faster M.2 drives.

Not cheap (although building your own helps a great deal with cost).

The cards get more pricey, beefier and more power hungry with each new version release but finally it’s also the code that matters, the performance of the software plays a significant role. People expect wonders with their new rad looking card and get disappointed, that’s marketing!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Charles Barker:

+1!

Just got my new computer specifically "built-up" to work with Pixinsight and other image processing applications after running on, using your word, "Cuda-less" system. Wow! What a difference it makes with the AI driven toolsets! Would really like to see Pixinsight start leveraging the GPU for some of its intensive image processing (like WBPP integration)! WBPP isn't a PixInsight process. It's a script.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

So guys I don’t want to spam you but here is a detailed listing from the artificial brain of processes make sense /nonsense on GPU /CPU

and I do agree to this analysis.

The potential for GPU acceleration in PixInsight is an intriguing topic, especially when considering its computationally intensive tasks such as stacking, quality estimation, and processes involved in workflows like the Weighted Batch Preprocessing (WBPP) script. While PixInsight already benefits from some degree of multi-threading and CPU optimization, leveraging GPU power could greatly enhance performance in several areas. Let’s break it down:

1. GPU Acceleration for AI-Based Tools

PixInsight already incorporates machine-learning-based tools like NoiseXTerminator and StarXTerminator, which often use GPUs when available. These tools demonstrate how GPUs excel at:

• Matrix and tensor computations: Key for neural networks and machine learning.

• Parallel processing: Thousands of cores handle repetitive computations efficiently.

Thus, for AI-based tools in PixInsight, GPU acceleration is practically a must, as seen with tools leveraging CUDA and OpenCL.

2. GPU Acceleration for Stacking

Stacking involves aligning, normalizing, and integrating multiple frames, which are traditionally CPU-bound processes. GPU acceleration could bring significant advantages here:

• Image Registration: Calculations for aligning stars and frames involve transformation matrices, which GPUs can process faster.

• Pixel Rejection: Techniques like Winsorized sigma clipping could see performance boosts since they involve analyzing large data sets.

• Integration: Averaging, weighting, and normalizing pixel values are ideal tasks for GPUs due to their parallel nature.

However, implementing GPU acceleration for stacking isn’t trivial. Tasks like data I/O (loading/saving FITS files) and the need for double-precision floating-point operations (often less optimized on GPUs) might introduce bottlenecks.

3. Quality Estimation in WBPP

Quality estimation includes measuring parameters like FWHM, SNR, and eccentricity for each frame. These involve:

• Statistical Analysis: GPUs can speed up calculations for large datasets like image histograms or background models.

• Convolution and Filtering: Tasks that analyze star profiles or noise are highly parallelizable and would greatly benefit from GPU acceleration.

For WBPP, GPU acceleration could significantly speed up preprocessing, particularly in quality estimation and frame sorting, where CPUs often become the bottleneck with large datasets.

4. Challenges of GPU Acceleration in PixInsight

Despite its advantages, there are notable challenges to integrating GPU acceleration:

• Development Effort: GPU programming is more complex, requiring optimized CUDA, OpenCL, or Vulkan implementations.

• Double-Precision Support: Many astrophotography processes rely on high precision, which is less efficient on consumer-grade GPUs.

• Data Transfer Overhead: Moving data between the CPU and GPU can sometimes negate performance gains, especially for smaller operations.

• Broad Compatibility: GPU acceleration must work across diverse hardware setups, including AMD, NVIDIA, and integrated GPUs.

5. Future Possibilities

GPU acceleration in PixInsight could revolutionize workflows if implemented thoughtfully. Here’s where it would shine:

• Dynamic Alignment & Stacking: Real-time previews and faster alignment during preprocessing.

• Wavelet-Based Transformations: Tasks like deconvolution, noise reduction, and multiscale processing could become faster.

• Real-Time Feedback in Workflows: The WBPP script could dynamically adjust frame weights with GPU-accelerated quality estimation, improving automation.

Conclusion

For GPU acceleration in PixInsight, AI-based tools like noise reduction already make excellent use of GPUs, and expanding this to WBPP’s stacking and quality estimation processes could be transformative. However, the balance between development complexity and performance gains must be carefully considered.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Monty Chandler:

Charles Barker:

+1!

Just got my new computer specifically "built-up" to work with Pixinsight and other image processing applications after running on, using your word, "Cuda-less" system. Wow! What a difference it makes with the AI driven toolsets! Would really like to see Pixinsight start leveraging the GPU for some of its intensive image processing (like WBPP integration)!

WBPP isn't a PixInsight process. It's a script. Lol… Please don’t distract from the extremely brief message that was being sent, captured in the simple “WBPP integration” phrase. I was simply trying to highlight a “for example” in that the image integration phase is the most “machine” driven, time consuming of the astroimage workflow processes that WBPP encapsulates. I included WBPP in that the WBPP flow seems to be the most popular means to streamline astroimage preprocessing so most can relate. My implication here is if PixInsight is going to start leveraging GPU capabilities please start with the most time-consuming process and maybe, just maybe start extending to the other time-consuming processes like those called by the WBPP SCRIPT… I am extremely happy folks like those on this thread are investing in hardware/software that will improve the experience this astroimaging hobby and/or profession has to offer and no way want to distract from the "giddiness." But I don't think it is out of bounds to highlight an "ask" for PixInsight to pick up the ball and start leveraging what GPU processing has to offer and continue to improve our experience. Or, maybe, I am just one of a few who doesn’t like hitting the WBPP SCRIPT “Run” button and waiting, literally days for all of those processes to finish.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

So guys I don’t want to spam you but here is a detailed listing from the artificial brain of processes make sense /nonsense on GPU /CPU

and I do agree to this analysis. No please... by all means! This was the intent of what I was trying to push continuing to encourage PixInsight to really start digging into improving the user's experience of their product instead of telling us how hard it will be. Don't get me wrong I certainly appreciate the fact that others have made strides in the post-processing side and PixInsight generously invited them in and includes them. Using NoiseXTerminator from several minutes to less than one is happily welcomed. I also admire and benefit everything PixInsight brings to the table! However, if you want to really improve the PixInsight experience and the opportunity is there, make it so I don't have to wait 24 hours to get a result that I may or may not like...

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

So guys I don’t want to spam you but here is a detailed listing from the artificial brain of processes make sense /nonsense on GPU /CPU

and I do agree to this analysis.

The potential for GPU acceleration in PixInsight is an intriguing topic, especially when considering its computationally intensive tasks such as stacking, quality estimation, and processes involved in workflows like the Weighted Batch Preprocessing (WBPP) script. While PixInsight already benefits from some degree of multi-threading and CPU optimization, leveraging GPU power could greatly enhance performance in several areas. Let’s break it down:

1. GPU Acceleration for AI-Based Tools

PixInsight already incorporates machine-learning-based tools like NoiseXTerminator and StarXTerminator, which often use GPUs when available. These tools demonstrate how GPUs excel at:

• Matrix and tensor computations: Key for neural networks and machine learning.

• Parallel processing: Thousands of cores handle repetitive computations efficiently.

Thus, for AI-based tools in PixInsight, GPU acceleration is practically a must, as seen with tools leveraging CUDA and OpenCL.

2. GPU Acceleration for Stacking

Stacking involves aligning, normalizing, and integrating multiple frames, which are traditionally CPU-bound processes. GPU acceleration could bring significant advantages here:

• Image Registration: Calculations for aligning stars and frames involve transformation matrices, which GPUs can process faster.

• Pixel Rejection: Techniques like Winsorized sigma clipping could see performance boosts since they involve analyzing large data sets.

• Integration: Averaging, weighting, and normalizing pixel values are ideal tasks for GPUs due to their parallel nature.

However, implementing GPU acceleration for stacking isn’t trivial. Tasks like data I/O (loading/saving FITS files) and the need for double-precision floating-point operations (often less optimized on GPUs) might introduce bottlenecks.

3. Quality Estimation in WBPP

Quality estimation includes measuring parameters like FWHM, SNR, and eccentricity for each frame. These involve:

• Statistical Analysis: GPUs can speed up calculations for large datasets like image histograms or background models.

• Convolution and Filtering: Tasks that analyze star profiles or noise are highly parallelizable and would greatly benefit from GPU acceleration.

For WBPP, GPU acceleration could significantly speed up preprocessing, particularly in quality estimation and frame sorting, where CPUs often become the bottleneck with large datasets.

4. Challenges of GPU Acceleration in PixInsight

Despite its advantages, there are notable challenges to integrating GPU acceleration:

• Development Effort: GPU programming is more complex, requiring optimized CUDA, OpenCL, or Vulkan implementations.

• Double-Precision Support: Many astrophotography processes rely on high precision, which is less efficient on consumer-grade GPUs.

• Data Transfer Overhead: Moving data between the CPU and GPU can sometimes negate performance gains, especially for smaller operations.

• Broad Compatibility: GPU acceleration must work across diverse hardware setups, including AMD, NVIDIA, and integrated GPUs.

5. Future Possibilities

GPU acceleration in PixInsight could revolutionize workflows if implemented thoughtfully. Here’s where it would shine:

• Dynamic Alignment & Stacking: Real-time previews and faster alignment during preprocessing.

• Wavelet-Based Transformations: Tasks like deconvolution, noise reduction, and multiscale processing could become faster.

• Real-Time Feedback in Workflows: The WBPP script could dynamically adjust frame weights with GPU-accelerated quality estimation, improving automation.

Conclusion

For GPU acceleration in PixInsight, AI-based tools like noise reduction already make excellent use of GPUs, and expanding this to WBPP’s stacking and quality estimation processes could be transformative. However, the balance between development complexity and performance gains must be carefully considered. Obvious GPU accelerated post.  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

David Foust:

I've got a new GPU on my list... Still using my old 1660ti!  Glad to see it would be of some benefit to upgrade in my processing workflow. Glad to see it would be of some benefit to upgrade in my processing workflow. I also use a 533 MC-Pro and my 1660Ti blazes through the Xterminators. I call blazing 25 sec including initialization and 12 seconds for subsequent runs. For the $$, I can't justify a new GPU until I upgrade my 4 year old desktop. Of course, I am using a 533. I am sure the difference would be greater with a larger sensor format camera.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.