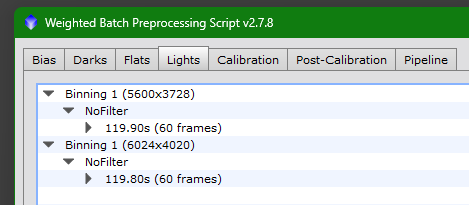

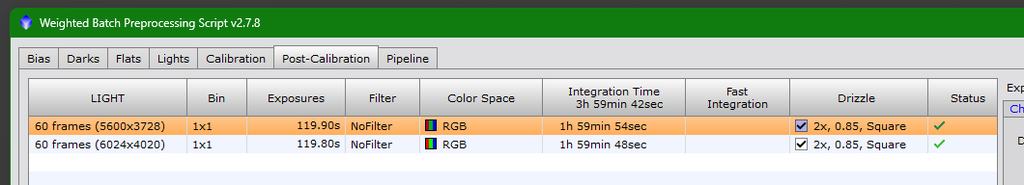

I am taking images using a dual setup with DSLRs that produce different resolution images. The preprocessing is done in WBPP. Here are some screenshots showing my configuration:

Post-Calibation tab:

WBPP creates separate integrations for each camera. As far as I could find out, there is no way to tell WBPP directly to create only one integration of all images combined.

As of now, my solution to this is the following: let WBPP only calibrate all the frames and then perform LocalNormalization, ImageIntegration and DrizzleIntegration on all frames manually using a manually created LN reference image. While this works and offers the benefit of integrating everything as one image set, this also has some problems:

First, it involves some manual work that is spread out over a long period of time. That is inconvenient and error-prone, since it is easy to miss some small details when you do other stuff while PI is working on the last task.

Secondly, this leads to a lot of pixel rejection around the stars since both setups have slightly different PSFs (due to the used lenses and rescaling of one image group).

However, instead of doing this, I thought of another idea how to handle this situation that I would like to discuss here. Maybe this is slightly better and could also benefit others that are in the same situation.

My new idea is to let WBPP run as shown in the settings above and perform every step except for DrizzleIntegration (i.e. calibration, StarAlignment, LocalNormalization and ImageIntegration). This already takes care of most of the manual work.

After this, I would perform a DrizzleIntegration of all images combined in one step.

This method has the following positive aspects:

First, it is less error-prone. The only step that I would have to do manually is the final DrizzleIntegration.

Secondly, since the pixel rejection is done for each set of images independently during ImageIntegration, this would reject less pixels around stars due to differing PSFs.

However, there is one Problem I see here: LocalNormalization normalizes both images against different reference images, that (in most cases) will have different brightness. So in practice, this would integrate images with different brightness levels, which I assume to be a major drawback.

From a purely theoretical standpoint, what are your thoughts on both workflows? Do you think the second workflow would offer some benefit?

CS Gerrit