I know that many AP’ers here use Pixinsight (PI) for image processing. I just want to pass on what I learned recently about PI performance that you may or may not know.

This might be old news to most of you, but it was a very cool find for me.

PI users know that PI consumes quite a bit of system resources. High end cpu’s, ram memory, big video cards, and fast storage disks all contribute to the performance of PI.

The performance of PI is so important, that many folks spend many thousands of dollars on a stacked high-performance pc (laptop or desktop) to run PI.

Understandably so. Some PI processes and scripts take many minutes to hours to run.

My son and I built my desktop about 2 ½ years ago on a budget.

I kept the budget at $700, but I really just needed the “box” as I had a monitor, keyboard, and 500GB ssd (sata).

I have added a few things the past few years, a ton of storage (10.5TB total) including a nice 2TB nvme m.2 (gen3) boot drive. Using the same 4 ½ year old cpu.

Recently (Christmas) I received a really nice Christmas gift (that I asked for) of 64GB of DDR4 3200 ram. It was an upgrade from the 32GB of DDR4 2667 ram that I had before. Faster and double the capacity.

Many of you PI users are familiar with the benchmark in PI to check performance. It is a script called Pixinsight Benchmark nested under Benchmarks.

I don’t have a benchmark before the ram upgrade, I believe that it was a modest boost (+6-8%), but I have learned a little bit about the PI swap files. It seems that even though PI thrives on huge fast ram memory, it also depends heavily on swap files which seems odd if large amounts of free ram memory are available.

Swap files are temporary files/folders that PI uses (exclusive of ram) to process and temporarily store data. The default PI swap file is created on the local “C” drive.

I read several posts on CN post about increasing the number of swap files. Seems strange, but if you do an internet search, you will find many references to using multiple swap files to increase PI performance.

It helps to have a fast storage drive (nvme or ssd) to create the swap files on, but it also seems that PI performance can be enhanced by creating multiple swap files on even conventional spinning drives.

Below are some tests that I did recently and the results did surprise me.

Yes, spending lots of money on better, faster hardware components can surely increase the performance of PI, but for free, you can create extra swap files that can increase performance to. Sometimes significantly.

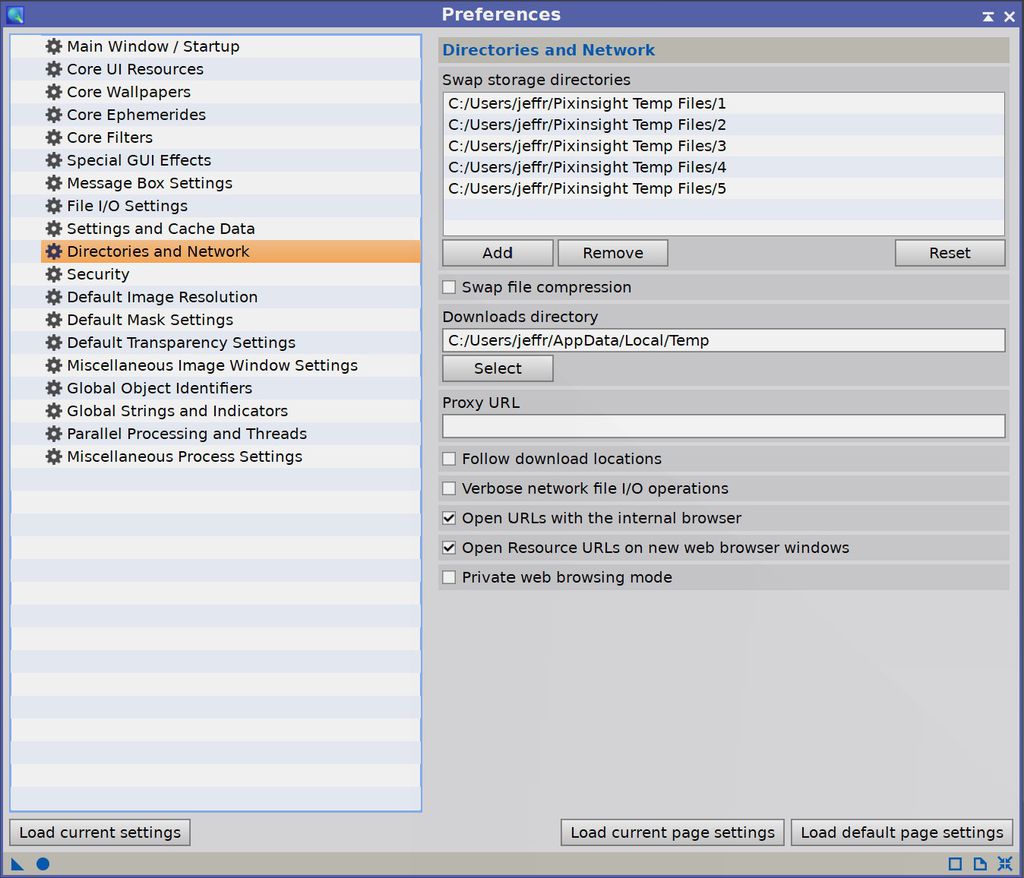

Under the EDIT drop down menu, you will find GLOBAL PREFERENCES. At PREFERENCES, select Directories and Network. From there, you can see the default Swap storage directories.

This was my PI benchmark score using the single default swap file directory (12456).

I created six folders on my C: drive (nvme) to use as temporary swap files (I really don't think that it matter where you create the folders.

Here is the benchmark with no change except using six swap storage directories (15635). A 26% increase in the PI benchmark over the single default swap file directory.

I created a 16GB ramdrive (out of my 64GB of total ram) using free software and dedicated it to swap file directories.

The benchmark went up to 16236 a 30% increase in the PI benchmark from using the single default swap file directory. A very significant increase for free!

I have since tested both the nvme and ramdrive with four swap file directories and the performance was basically the same as with six swap file directories. So, some fine tuning can help.

Your mileage may vary depending on your hardware.

Hope that this is helpful to someone!

Jim

![7f346eb861f93c4ac794b20c017bf150[1].png](https://cdn.astrobin.com/ckeditor-thumbs/125749/2023/8f3522fd-b74f-4b1d-b3c9-f50ca1b8f1d3.png)