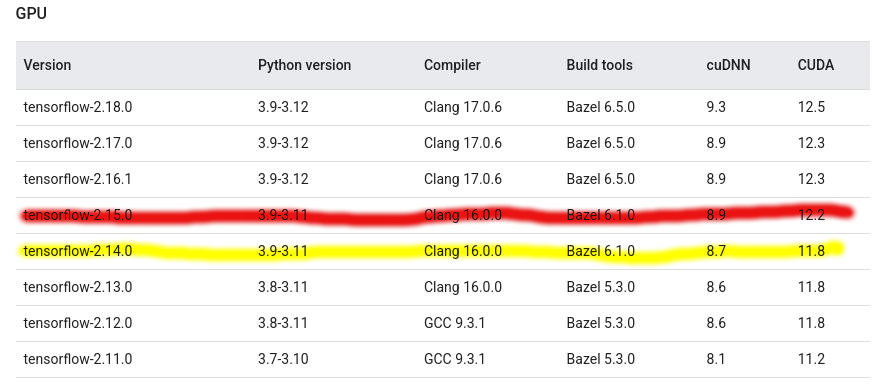

Newer version can't be used because introduce new CUDA version 12.2 and loose compatibility with used in PixInsight TensorFlow API.

What to do if we want enable this support in Debian 12? In short:

- remove all NVIDIA CUDA drivers delivered by distribution

- install a proper NVIDIA driver manually

- build TensorFlow 2.14.1 with CUDA support

- replace PixInsight libraries

Unfortunately this process is not sow easy:

1) Download correct NVIDIA drivers:

- CUDA 11.8.0 with driver 520.61.05 -> cuda_11.8.0_520.61.05_linux.run

- CuDNN 8.7.0.84 -> cudnn-linux-x86_64-8.7.0.84_cuda11-archive.tar.xz

- NCCL 2.21.5 -> nccl_2.21.5-1+cuda11.0_x86_64.txz

- TensorRT 8.6.1.6 -> TensorRT-8.6.1.6.Linux.x86_64-gnu.cuda-11.8.tar.gz

2) Remove all NVIDIA and CUDA by `apt` or `aptitude`

3) Install CUDA 11.8.0 with driver 520.61.05:

3a) Install necessary packages for built kernel modules as root or with sudo:

apt -y install linux-headers-$(uname -r) build-essential libglvnd-dev pkg-config

3b) Switch `gcc` to version is `gcc version 11.3.0 (Debian 11.3.0-12)` if not follow this topic.

3c) Disable default drivers as root or with sudo:

- open and edit:

nano /etc/modprobe.d/blacklist-nouveau.conf

put there and save file:

blacklist nouveau

options nouveau modeset=0

update kernel initramfs:

update-initramfs -u

restart computer it should run in text mode and it's ok because graphics drivers is not installed

3d) Driver and CUDA installation:

- login to root account and go to directory with `cuda_11.8.0_520.61.05_linux.run`

- run installer, remember install al CUDA and NVIDIA drivers

sh cuda_11.8.0_520.61.05_linux.run

- create configuration file `/etc/X11/xorg.conf.d/nvidia_as_primary.conf` with:

Section "OutputClass"

Identifier "nvidia"

MatchDriver "nvidia-drm"

Driver "nvidia"

Option "PrimaryGPU" "yes"

EndSection

- reset computer, now X server should start

3e) Install libraries CuDNN, NCCL, TensorRT

- Extract files from: `cudnn-linux-x86_64-8.7.0.84_cuda11-archive.tar.xz`:

--> cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/ --> /usr/local/include

--> cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/ --> /usr/local/lib/

- Extract files from `nccl_2.21.5-1+cuda11.0_x86_64.txz`:

--> nccl_2.21.5-1+cuda11.0_x86_64/include/ --> /usr/local/include

--> nccl_2.21.5-1+cuda11.0_x86_64/lib/ --> /usr/local/lib

- TensorRT-8.6.1.6.Linux.x86_64-gnu.cuda-11.8.tar.gz -> extract into `/opt/nvidia/`:

mkdir -p /opt/nvidia/ && sudo tar -xz TensorRT-8.6.1.6.Linux.x86_64-gnu.cuda-11.8.tar.gz -C /opt/nvidia/

-- then follow directory `/opt/nvidia/TensorRT-8.6.1.6/`

-- run following commands:

ln -sf /opt/nvidia/TensorRT-8.6.1.6 /opt/nvidia/tensorrt

echo "/opt/nvidia/TensorRT-8.6.1.6/lib" > /etc/ld.so.conf.d/989_tensorrt-8-6.conf

ldconfig

- reset computer

4) Build TensorFlow

4a) Extract TensorFlow 2.14.1:

tar -xf tensorflow-2.14.1.tar.gz

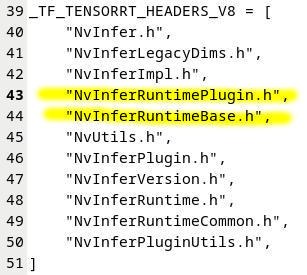

4b) Edit `tensorflow-2.14.1/third_party/tensorrt/tensorrt_configure.bzl` and add this two lines:

"NvInferRuntimePlugin.h",

"NvInferRuntimeBase.h",

4c) Set TMP directory:

export TMP=/tmp

4d) Run TensorFlow configuration in `tensorflow-2.14.1` and answer for questions:

- run configuration:

./configure

- answers for my GPU `NVIDIA RTX A4000 Laptop GPU` in many case will be the same:

Do you wish to build TensorFlow with ROCm support? [y/N]: N

Do you wish to build TensorFlow with CUDA support? [y/N]: y

Do you wish to build TensorFlow with TensorRT support? [y/N]: y

[Leave empty to default to CUDA 11]: 11

[Leave empty to default to cuDNN 2]: 8

[Leave empty to default to TensorRT 6]: 8

NCCL version you want to use. [Leave empty to use http://github.com/nvidia/nccl]: 2

Please specify the comma-separated list of base paths to look for CUDA libraries and headers. [Leave empty to use the default]:

/usr/include/,/usr/lib/x86_64-linux-gnu/,/usr/local/,/usr/local/include/,/usr/local/lib/,/usr/local/cuda/,/usr/local/cuda/include/,/opt/nvidia/tensorrt/,/opt/nvidia/tensorrt/include/

Please note that each additional compute capability significantly increases your build time and binary size, and that TensorFlow only supports compute capabilities >= 3.5 [Default is: 8.6]: 8.6

Do you want to use clang as CUDA compiler? [Y/n]: n

Please specify which gcc should be used by nvcc as the host compiler. [Default is /usr/bin/gcc]:

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]: N

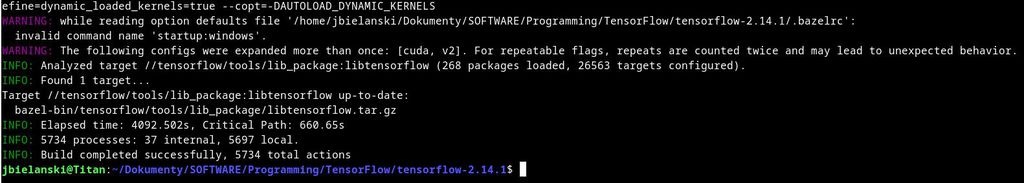

4e) Start compilation and finger crossed (this stage take a lot of time...):

bazel build --config opt //tensorflow/tools/lib_package:libtensorflow --config=cuda --config=v2 --config=nonccl --verbose_failures

when it's done, you see:

the results of compilation will be in:

tensorflow-2.14.1/bazel-bin/tensorflow/

libtensorflow.so.2.14.1

libtensorflow_framework.so.2.14.1

5) Copy libraries to PixInsight

- make backup of original TensorFlow files in PixInsight:

sudo mv /opt/PixInsight/bin/lib/libtensorflow.so.2.11.0 /opt/PixInsight/bin/lib/libtensorflow.so.2.11.0_cpu

sudo mv /opt/PixInsight/bin/lib/libtensorflow_framework.so.2.11.0 /opt/PixInsight/bin/lib/libtensorflow_framework.so.2.11.0_cpu

- copy new TensorFlow files with GPU support:

sudo cp bazel-bin/tensorflow/libtensorflow.so.2.14.1 /opt/PixInsight/bin/lib/libtensorflow.so_gpu

sudo cp bazel-bin/tensorflow/libtensorflow_framework.so.2.14.1 /opt/PixInsight/bin/lib/libtensorflow_framework.so_gpu

ln -sf /opt/PixInsight/bin/lib/libtensorflow.so_gpu /opt/PixInsight/bin/lib/libtensorflow.so.2.11.0

ln -sf /opt/PixInsight/bin/lib/libtensorflow_framework.so_gpu /opt/PixInsight/bin/lib/libtensorflow_framework.so.2.11.0

- switch back TensorFlow with support CPU only:

ln -sf /opt/PixInsight/bin/lib/libtensorflow.so.2.11.0_cpu /opt/PixInsight/bin/lib/libtensorflow.so.2.11.0

ln -sf /opt/PixInsight/bin/lib/libtensorflow_framework.so.2.11.0_cpu /opt/PixInsight/bin/lib/libtensorflow_framework.so.2.11.0

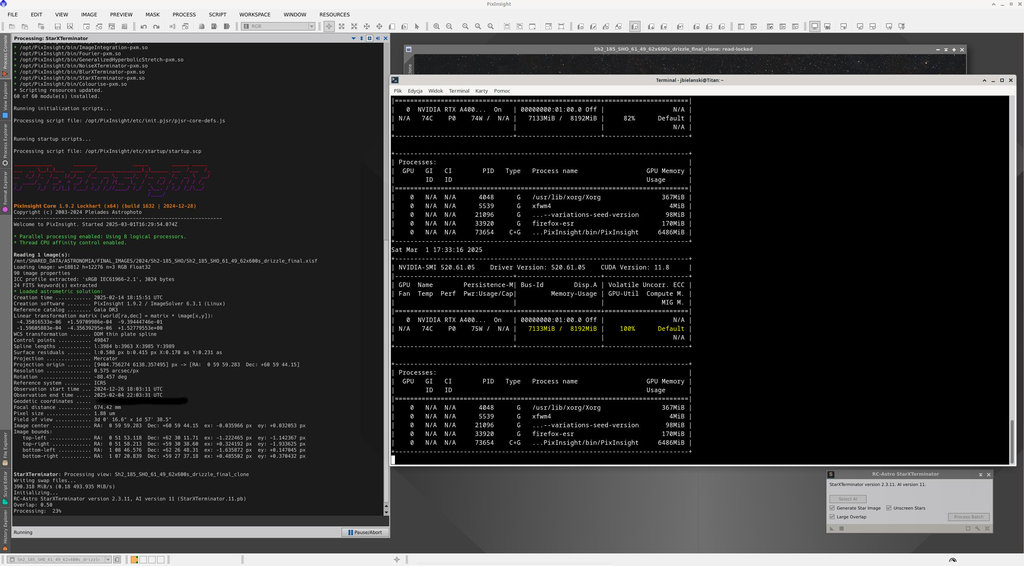

6) If everything goes well then GPU will be supporting calculations in RC Astro: NoiseXTerminator, StarXTerminator and BlurXTerminator

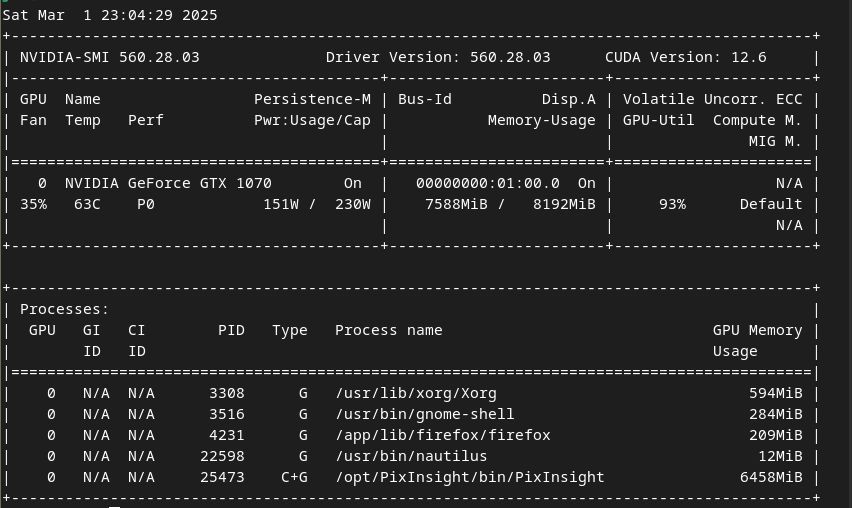

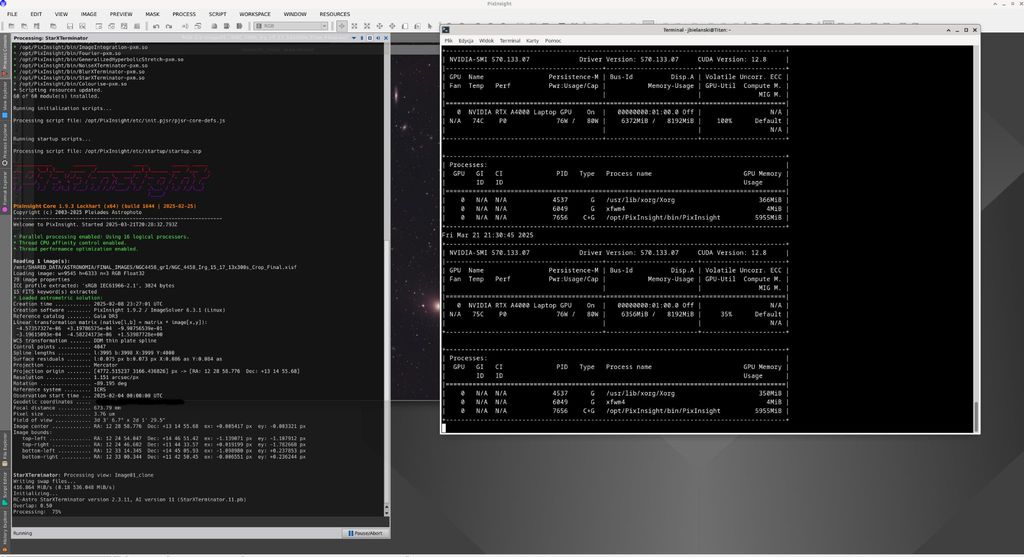

- GPU working:

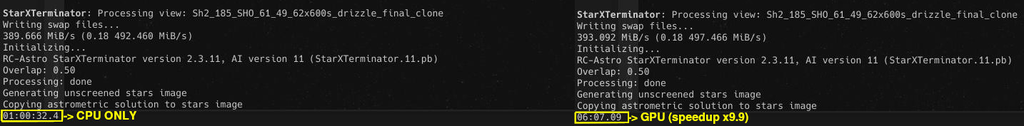

- Comparison execution time CPU vs GPU:

Time execution reduced 10 times, from 1 hour to 6 minutes for image 18812x12276 RGB Float32!!!