Would applying spcc after the lrgb combine help correct for any imperfections in the matching of lightness channels?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Mark McComiskey:

Would applying spcc after the legs combine help correct for any imperfections in the matching of lightness channels? since we are dealing with perceptual color space, which is nonlinear, SPCC should be done before LRGB combination or the linear RGB file, since the color calibration will effect the relative weightings of R, G, and B and hence the value of the extracted L.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

So, displaying my rank amateur understanding of this, why is it not possible to replace the lightness in the linear state? Then SPCC would correct for color issues?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Charles Hagen:

Raúl Hussein:

Very interesting @Noah Tingey . You should keep in mind that RGB is not equivalent to L due to the type of filters you are using. The downside to using luminance is that the extra signal obtained is colorless, which is why the tones appear white. More signal, yes, means less color.

This is misguided. While yes, Luminance, depending on the filter set used, can include regions of the EM spectrum that are not covered by the RGB filters, this does NOT mean that they will be without any hue or color in the final image. This is simply incorrect. The color is entirely determined by the RGB data - if there is a hue present in the RGB, it will be there in the LRGB just the same. The only case where you would observe colorless signal would be an emission line (Not continuum signal) that is not covered by any of the RGB filters. In space, there are almost none of these, certainly none in the visible spectrum that are dominant or even distinguishable on their own. Every object you will be imaging will get its color from continuum, where the color emitted or reflected at each point occupies a broad area of the visual spectrum and the differences in response across the three filters give its color. There is just no justification for this silly "less color" argument. I'm sorry if this sounds absurd, but it's not an opinion. Experimentally, LRGB doesn't produce color in regions where RGB doesn't have a signal, but where it does exist in the luminance. This is due to using filters like the one I'm pointing out, or capturing information on different nights or skies. It just happens.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

I agree with Charles. As long as you have RGB, there is color. Many claims of loss of color are often simply caused by a mismatch between L and the luminance of RGB during the LRGB composition. If your L is generally brighter than the luminance of RGB, then you will see a drop in color saturation, and vice versa.

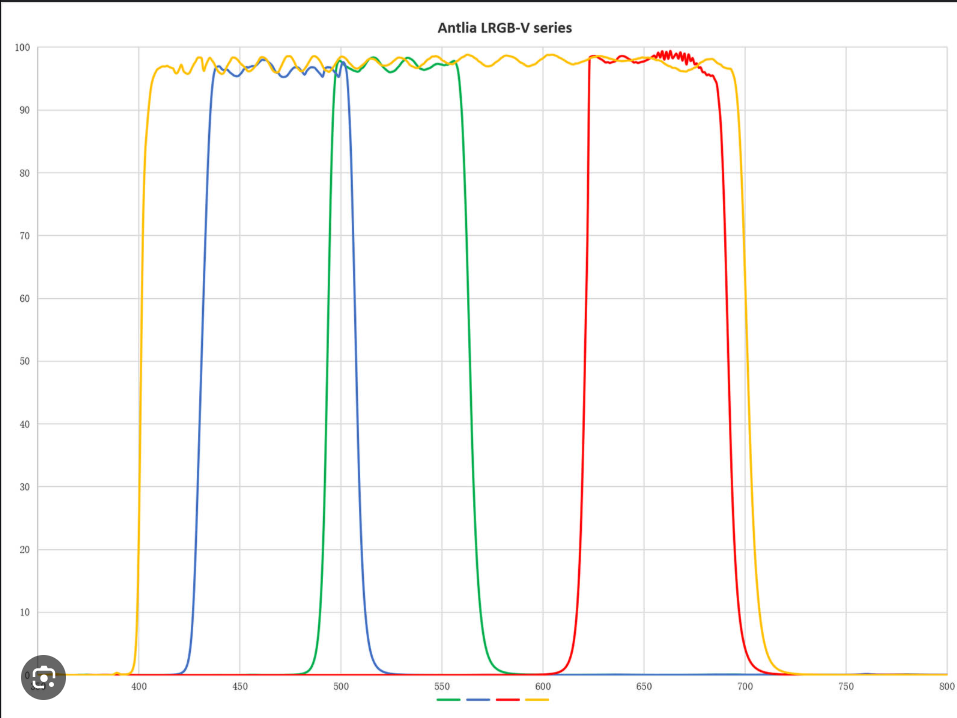

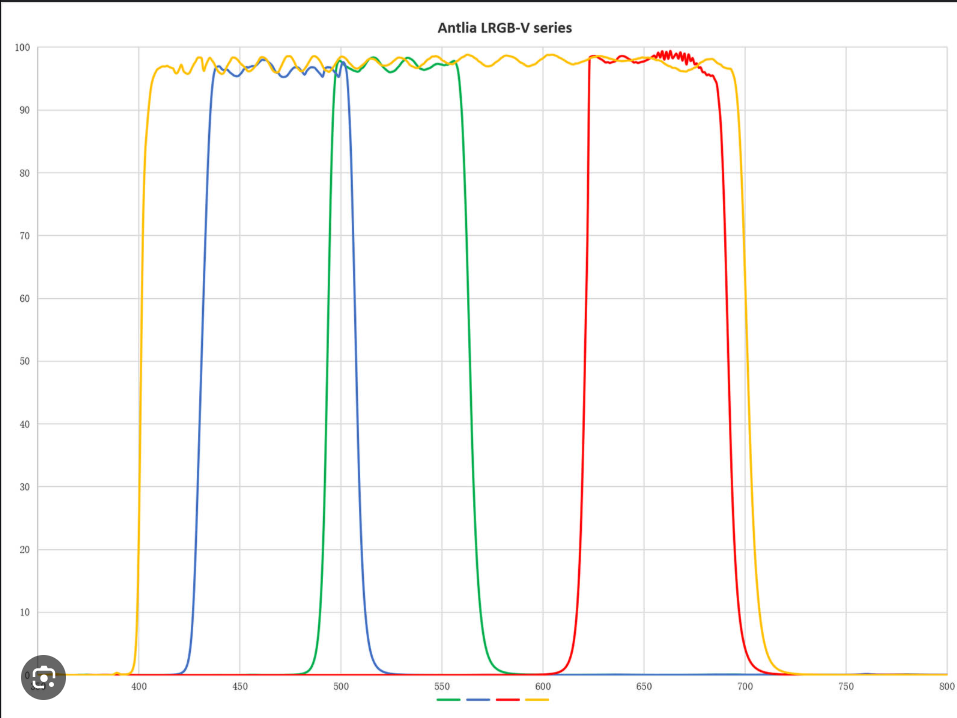

In the filter transmission curve above, L does passes way more light than R+G+B, but this can be compensated during the matching process. A mismatch that leads to a global drop in color saturation simply means the matching is not done well. What's wrong is the matching process, not the L filter.

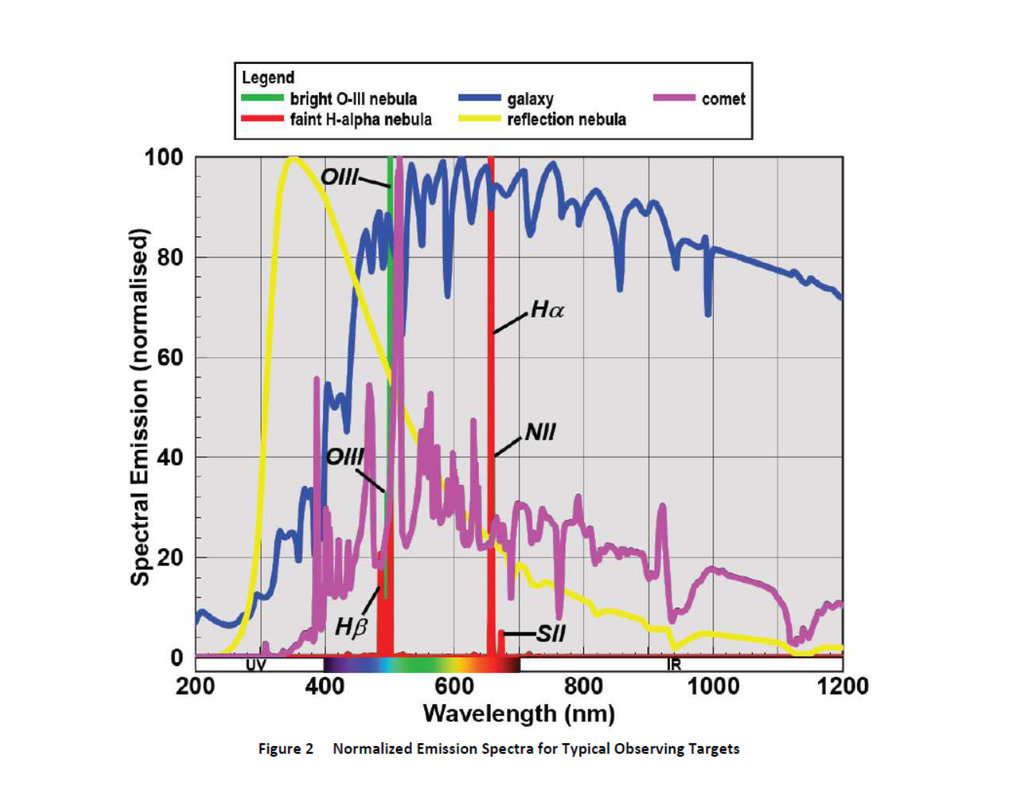

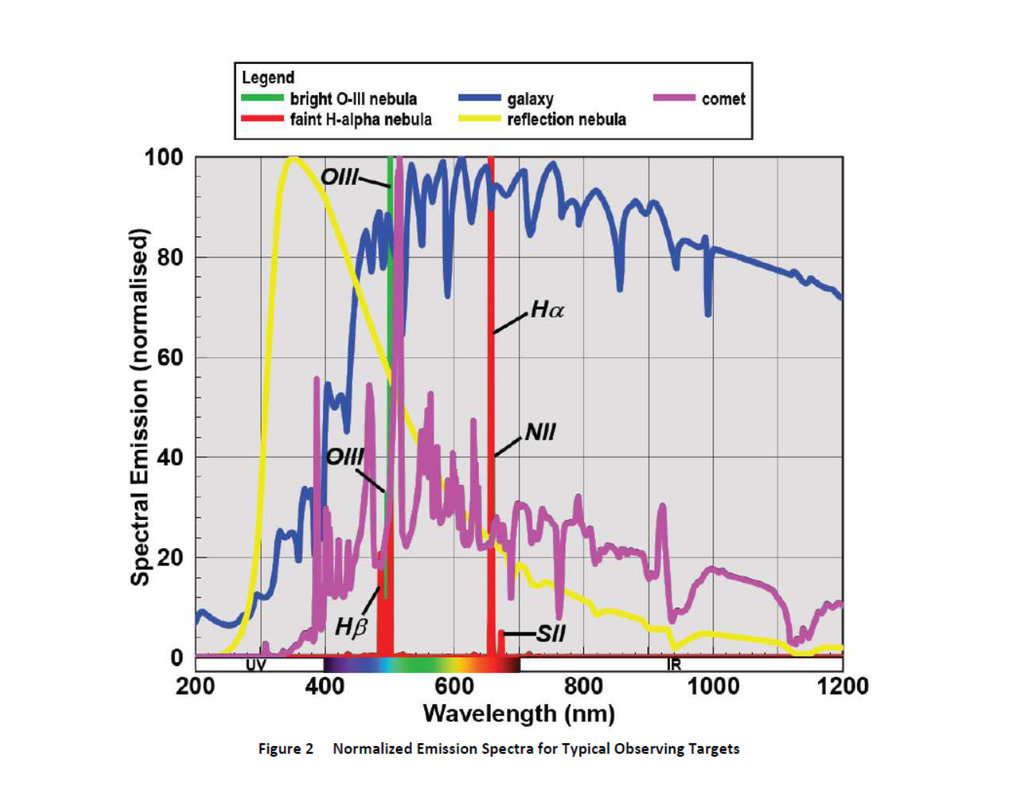

That being said, there could be a subtle effect caused by the fact that L is often not simply R+G+B. For example, an L filter may allow additional short-wavelength blue light and UV to come in, compared to the B filter, like the case in the filter transmission curves above. In such a case, blue components in your target that are particularly bright in the UV (galaxy spiral arms, for example) may appear brighter than it should be in the L, compared to the luminance of RGB. This will indeed cause a drop in saturation for that component. An easy fix is to decrease the brightness of the L image to better match that, but this will case the red and green component in the target appear too dark in the L image, leading to an often unpleasant boost in saturation for these components. If instead, the L filter passes too much near-infrared light in the red side, then you will have some trouble in the part of your target where it emits stronger in the red/infrared. This is usually the core of spiral galaxies or anything deeply extinguished by foreground dust.

So yes, the mismatch between the transmissions of L and RGB can cause some troubles, but just using "less color" or "colorless" to describe this is oversimplifying the situation. The effect is subtle anyway. If you see something dramatic, the problem is the matching process in post processing, not the L filter. I was talking about situations where the pixels illuminated by the luminance don't match the RGB. This is sometimes due to the filter used or if the capture context changes.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Raúl Hussein:

I was talking about situations where the pixels illuminated by the luminance don't match the RGB. This is sometimes due to the filter used or if the capture context changes. L can capture more light than R+G+B, and you can tune down its brightness in post processing. There is nothing wrong with this.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

my 5 cents

One of the contribution of luminance ( master)is the SNR factor , when we apply (image integration tool ) with SNR as a weights mean more SNR, more details

and many of the problems i hear are solved by applying hdrmultiscaletransform to the SUPERLUM to equalize both master( rgb and superlum) it solve many problems for galaxies and other

CS

Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

Raúl Hussein:

I was talking about situations where the pixels illuminated by the luminance don't match the RGB. This is sometimes due to the filter used or if the capture context changes.

L can capture more light than R+G+B, and you can tune down its brightness in post processing. There is nothing wrong with this. I think you're misunderstanding me, and I'm going to give a radical example. Imagine I use the L-QEF luminance filter to capture UV and NIR band signals. What signal do you have in your RGB that matches and colors it? The reason for using this filter is to combat light pollution and because some objects emit more signal in those bands. Here's an example.  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

You completely misunderstand LRGB composition. Please go back and check Charles's and Arun's post again.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Mark McComiskey:

So, displaying my rank amateur understanding of this, why is it not possible to replace the lightness in the linear state? Then SPCC would correct for color issues? Remember that color calibration, whether SPCC or something else, is simply a global relative scaling of R,G, and B values. It cannot correct for the many complex and local saturation and hue mismatches that can result from a poor LRGB combination. Also - one other thing. The whole point about a color space like L* a* b* is that it is correlated to human perception. In other words, what makes the L* value special is that it is correlated to the human perception of lightness. Human perception is nonlinear. So you want to stretch (a non linear transform) both the luminance file and the RGB file to be something "close" to what you would like to perceive before doing the LRGB combination for it to be meaningful. And in the end, remember that the LRGB combination is just one step in the overall post processing workflow. We all will do many follow up adjustments to get a "pleasing" image.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Arun H:

So you want to stretch (a non linear transform) both the luminance file and the RGB file to be something "close" to what you would like to perceive before doing the LRGB combination for it to be meaningful. Haha. This part. I don't agree with. It's true that human perception is nonlinear, and L*a*b is a nonlinear space. But mathematically this doesn't imply that a linear LRGB cannot work. Check the LRGB and HaLRGB images that I produced in recent two years. They were all composed while the individual channels were still linear. Linear LRGB composition has the advantage that brightness/contrast stretch is very "straightforward" since only straight lines in function form are allowed. So matching L and the luminance of RGB is very easy, as there is little room to mess up things if all you can do is to apply straight lines. Personally, I think the many negative feeling about LRGB stems from the very tricky operation in nonlinear space. People, particularly those who wrote software programs, insist to do LRGB composition on nonlinear images. This unnecessarily increases the complexity and thus greatly increases the chance of failure.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

But mathematically this doesn't imply that a linear LRGB cannot work. Check the LRGB and HaLRGB images that I produced in recent two years. They were all composed while the individual channels were still linear. Linear LRGB composition has the advantage that brightness/contrast stretch is very "straightforward" since only straight lines in function form are allowed. So matching L and the luminance of RGB is very easy, as there is little room to mess up things if all you can do is to apply straight lines. Hi Wei-Hao - I actually don't disagree with this. There is absolutely nothing in the mathematics that says you cannot convert linear RGB to L* a* b*. It just means that the extracted L* is not correlated to human perception. I actually suspect that there is a secondary reason this works so well for you. You (and people like John, Charles, and Kevin) are careful to get very good color data (original RGB) to begin with, so it then becomes less important whether this is done in linear or non linear.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Hi Arun,

I am not very sure what you meant by very good color data. My rule of thumb is that I spend about equal times on L and on R+G+B. So roughly speaking, my R or G or B exposure is about 1/6 of that of L. I am not sure if this is "very good." To me, this is just barely good enough. My RGB image appears quite noisy. So before I combine L and RGB, I apply very aggressive noise reduction to RGB. And even that, after the LRGB image is created and stretched nonlinearly, I often apply additional pass(es) of noise reduction to the ab channels in the Lab mode (and often just in the starless layer).

I absolutely agree that the quality of RGB cannot be too bad. But I kind of suspect that this is not the point of failure for other people, as my own RGB exposures are not extraordinarily long.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Arun H:

Mark McComiskey:

So, displaying my rank amateur understanding of this, why is it not possible to replace the lightness in the linear state? Then SPCC would correct for color issues?

Remember that color calibration, whether SPCC or something else, is simply a global relative scaling of R,G, and B values. It cannot correct for the many complex and local saturation and hue mismatches that can result from a poor LRGB combination.

Also - one other thing. The whole point about a color space like L* a* b* is that it is correlated to human perception. In other words, what makes the L* value special is that it is correlated to the human perception of lightness. Human perception is nonlinear. So you want to stretch (a non linear transform) both the luminance file and the RGB file to be something "close" to what you would like to perceive before doing the LRGB combination for it to be meaningful.

And in the end, remember that the LRGB combination is just one step in the overall post processing workflow. We all will do many follow up adjustments to get a "pleasing" image. Okay on SPCC. Though what gave me the idea was the Pixinisight Youtube video where they add Ha to the red channel when everything is linear and then run SPCC. On the rest -- I don't follow that. As I understand it, if we could magically get the same flux throughput with just RGB as we do with L, we would dispense with L entirely and just do RGB. The rational for LRGB in the current world is that we can collect more signal more rapidly, and achieve a higher SNR (and therefore bring out more detail in our images) in the lightness component of an image (where our eyes are more sensitive to detail) than we can using an equal amount of exposure time with RGB filters (ie 6 hours of L plus 2 hours each of RGB, offers a better SNR in the lightness comonent of an LRGB image than is possible with 12 hours of RGB). If if just do RGB, I can utilize stretch tools to achieve whatever effect I am looking to accomplish. So it is unclear to me why there would be a necessity to stretch both the RGB and the L before swapping in the L to the lightness channel? If I could match the levels closely in the L and the RGB lightness in linear format, wouldn't it be simpler and better to do that, and then manipulate the strtech on the new image to achieve the desired outcome? I assume that is what Wei-Hao was referring to in terms of her processing approach. To be sure - I am not at all clear on how one can do that, as I would assume any manipulation moves you out of the strictly linear. Any inisght on that front would be appreciated, @Wei-Hao Wang...

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Mark McComiskey:

effect I am looking to accomplish. So it is unclear to me why there would be a necessity to stretch both the RGB and the L before swapping in the L to the lightness channel? If I could match the levels closely in the L and the RGB lightness in linear format, wouldn't it be simpler and better to do that, and then manipulate the strtech on the new image to achieve the desired outcome? I assume that is what Wei-Hao was referring to in terms of her processing approach. An extracted L* is only meaningfully related to the human perception of lightness if one starts with an image that is meaningfully interpretable by the human eye. A stretched RGB (or L) image is, linear data isn't. Here is an excellent thread that goes into this - see Juan Conejero's comments: https://pixinsight.com/forum/index.php?threads/lrgb-comb-on-linear-or-stretched-data.18885/For LRGB to provide a meaningful result the RGB and L input images must be nonlinear (stretched). LRGB may 'seem to work' with linear images in some cases, but these are casual results because the implemented algorithms expect nonlinear data.

Color images can be interpreted in different ways, depending on specific applications. You can think of an RGB color image as three separate planes or channels, sometimes with interesting mathematical relationships among them (for example, algorithms such as TGVDenoise can treat an RGB image as three independent grayscale images; GREYCstoration builds tensors with adjacent RGB pixel components, etc). Or you can interpret a color image as a single object composed of color and brightness (for example, when we treat chroma and lightness separately, as happens in LRGB). Or color images may not exist at all and all you see is a big bag plenty of numbers (for example, the FITS standard).

The LRGB combination process generates an RGB color image. To describe it in simple terms, the LRGB combination technique consists of replacing the lightness component of a color image with a new lightness component that usually has a higher resolution (for example, binned RGB and unbinned L) and often more signal. To achieve this the algorithm works in the CIE Lab color space, which separates lightness (L) from chroma (ab), and some parts also in the Lch space in our implementation (where chroma is interpreted as colorfulness (c) and hue (h)). Both color spaces are nonlinear and hence require nonlinear data. Theoretically we could perform an LRGB combination with linear data in the CIE XYZ space, where Y is luminance and XZ represent the chroma component, but this is difficult in practice (we investigated this option years ago) because mutual adaptation between luminance and chroma is difficult to understand and achieve when working with linear data. Note that Wei-Hao is doing his combination in Photoshop, not PI. There may be subtleties to this that are different than how PI does it. FYI, that thread also goes into why color calibration should be done on linear data.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

Hi Arun,

I am not very sure what you meant by very good color data. My rule of thumb is that I spend about equal times on L and on R+G+B. So roughly speaking, my R or G or B exposure is about 1/6 of that of L. I am not sure if this is "very good." To me, this is just barely good enough. My RGB image appears quite noisy. So before I combine L and RGB, I apply very aggressive noise reduction to RGB. And even that, after the LRGB image is created and stretched nonlinearly, I often apply additional pass(es) of noise reduction to the ab channels in the Lab mode (and often just in the starless layer).

I absolutely agree that the quality of RGB cannot be too bad. But I kind of suspect that this is not the point of failure for other people, as my own RGB exposures are not extraordinarily long. Wei-Hao Wang:

Hi Arun,

I am not very sure what you meant by very good color data. My rule of thumb is that I spend about equal times on L and on R+G+B. So roughly speaking, my R or G or B exposure is about 1/6 of that of L. I am not sure if this is "very good." To me, this is just barely good enough. My RGB image appears quite noisy. So before I combine L and RGB, I apply very aggressive noise reduction to RGB. And even that, after the LRGB image is created and stretched nonlinearly, I often apply additional pass(es) of noise reduction to the ab channels in the Lab mode (and often just in the starless layer).

I absolutely agree that the quality of RGB cannot be too bad. But I kind of suspect that this is not the point of failure for other people, as my own RGB exposures are not extraordinarily long. I typically target the 3:1:1:1 you mention also. I consider that a minimum in most cases. But I sometimes end up with 2:1:1:1 just trying to better pull something I see out of the color. Maybe if I’m doing a galaxy in an IFN field I’d use more L than usual since I’d plenty of galaxy color signal quickly but need a lot more SNR on the IFN which doesn’t carry a lot of color. As usual, depends on what you are after. Kevin

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

And can I ask, when we are talking about Lum here, are we really talking about the Synthetic Lum created by taking the LRGB masters and intergating using the SNR weighting?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Mark McComiskey:

If if just do RGB, I can utilize stretch tools to achieve whatever effect I am looking to accomplish. So it is unclear to me why there would be a necessity to stretch both the RGB and the L before swapping in the L to the lightness channel? If I could match the levels closely in the L and the RGB lightness in linear format, wouldn't it be simpler and better to do that, and then manipulate the strtech on the new image to achieve the desired outcome? I assume that is what Wei-Hao was referring to in terms of her processing approach. Yes. That's what I meant. Arun did bring up one subtlety: I am using Photoshop to do this linear LRGB. It's not guaranteed that the same can be achieved in PixInsight using its LRGB tool. I once just tried throwing linear L and RGB data at it, and the result seems OK, but this doesn't mean it will work all the time.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I'm glad you are doing the comparison of LRGB vs RGB, nice job trying to hold all other variables constant!

But boy-oh-boy, this is a much more dramatic difference than any other comparison I've seen. I mean, when I compare my stacks with different levels of integration time using subs of similar quality, it takes me at least 4x the number of lights to generate that level of difference in noise. What your result is basically saying is that using LRGB vs RGB in the ratios you used is as effective as collecting 4x the amount of integration time… or maybe more.

When I compared the area around the upper blueish-white patch (about 760x560 pixel area), I get a 2.7x SNR difference using PI's SNR tool. (I converted the patch to grayscale to make the comparison luminance based). This is equivalent to collecting 7.8x the amount of data for that area (I used an area with a bit more signal because I'm not sure what's been done with the darker background).

So color be baffled. Can someone explain the imaging science behind this because I'm thinking it's a result that exceeds what the match indicates we should be seeing. With the ratios you described, I expected just under 2x the total photons in the LRGB case versus the RGB case, or a ~1.4x better SNR… not 2.7x better. So this is a mystery to me.

Don't get me wrong, I'm fully on board with L collecting 3x the photons so you should have better SNR the more L you collect relative to RGB given the same amount of total integration time. As was stated, this is demonstrating the obvious. I'm just trying to understand the mystery of the extremely large resulting difference in SNR both visually, and through analysis. The visual difference tipped me off based on all my experiences integrating different levels of integration time and analyzing them visually and with SNR analysis, it has given me a feeling for what 2x more photons should look like and this result greatly exceeded that difference in all of my experiences, which is why it struck me as dramatic.

Anyway, more to learn here perhaps…. There's always things to learn in any mystery.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Steven Miller:

I'm glad you are doing the comparison of LRGB vs RGB, nice job trying to hold all other variables constant!

But boy-oh-boy, this is a much more dramatic difference than any other comparison I've seen. I mean, when I compare my stacks with different levels of integration time using subs of similar quality, it takes me at least 4x the number of lights to generate that level of difference in noise. What your result is basically saying is that using LRGB vs RGB in the ratios you used is as effective as collecting 4x the amount of integration time... or maybe more.

When I compared the area around the upper blueish-white patch (about 760x560 pixel area), I get a 2.7x SNR difference using PI's SNR tool. (I converted the patch to grayscale to make the comparison luminance based). This is equivalent to collecting 7.8x the amount of data for that area (I used an area with a bit more signal because I'm not sure what's been done with the darker background).

So color be baffled. Can someone explain the imaging science behind this because I'm thinking it's a result that exceeds what the match indicates we should be seeing. With the ratios you described, I expected just under 2x the total photons in the LRGB case versus the RGB case, or a ~1.4x better SNR... not 2.7x better. So this is a mystery to me.

Don't get me wrong, I'm fully on board with L collecting 3x the photons so you should have better SNR the more L you collect relative to RGB given the same amount of total integration time. As was stated, this is demonstrating the obvious. I'm just trying to understand the mystery of the extremely large resulting difference in SNR both visually, and through analysis. The visual difference tipped me off based on all my experiences integrating different levels of integration time and analyzing them visually and with SNR analysis, it has given me a feeling for what 2x more photons should look like and this result greatly exceeded that difference in all of my experiences, which is why it struck me as dramatic.

Anyway, more to learn here perhaps.... There's always things to learn in any mystery. Perhaps the difference you're seeing is because, while I applied the same denoising parameters to both images, I treated the L (both the native L and the L extracted from the RGB-only data) differently than I did the RGB? I'm not an expert on how SNR is preserved (or not preserved) when it comes to nonlinear images and denoising processes and LRGB combination and such. But here is a breakdown of the denoising I remember doing: For LRGB: 1. Stretch L and RGB separately, making sure to keep them matched as best I could 2. Denoise L at a lower strength than I denoised RGB using DeepSNR's latest version. (At a separate strength because of what I discussed here https://www.astrobin.com/forum/post/189418/ and here https://www.astrobin.com/forum/post/189430/). 3. LRGB combination to add the denoised L to the denoised RGB For RGB-only: 1. Stretch RGB 2. Pull the L channel out of the RGB image 3. Denoise this extracted L at the same strength that I denoised the real L. And denoised RGB at the same strength I denoised the other RGB 4. LRGB combination to add back the denoised extracted L to the denoised RGB If anyone wants to compare the edit history of the two images, this is the pix project file that I used. https://www.dropbox.com/scl/fi/vz0uzdd9182ki8kfjsmw2/test.pxiproject.zip?rlkey=2wfmv77e6iepp7dhjzskjjfc5&dl=0 |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Noah Tingey:

Steven Miller:

I'm glad you are doing the comparison of LRGB vs RGB, nice job trying to hold all other variables constant!

But boy-oh-boy, this is a much more dramatic difference than any other comparison I've seen. I mean, when I compare my stacks with different levels of integration time using subs of similar quality, it takes me at least 4x the number of lights to generate that level of difference in noise. What your result is basically saying is that using LRGB vs RGB in the ratios you used is as effective as collecting 4x the amount of integration time... or maybe more.

When I compared the area around the upper blueish-white patch (about 760x560 pixel area), I get a 2.7x SNR difference using PI's SNR tool. (I converted the patch to grayscale to make the comparison luminance based). This is equivalent to collecting 7.8x the amount of data for that area (I used an area with a bit more signal because I'm not sure what's been done with the darker background).

So color be baffled. Can someone explain the imaging science behind this because I'm thinking it's a result that exceeds what the match indicates we should be seeing. With the ratios you described, I expected just under 2x the total photons in the LRGB case versus the RGB case, or a ~1.4x better SNR... not 2.7x better. So this is a mystery to me.

Don't get me wrong, I'm fully on board with L collecting 3x the photons so you should have better SNR the more L you collect relative to RGB given the same amount of total integration time. As was stated, this is demonstrating the obvious. I'm just trying to understand the mystery of the extremely large resulting difference in SNR both visually, and through analysis. The visual difference tipped me off based on all my experiences integrating different levels of integration time and analyzing them visually and with SNR analysis, it has given me a feeling for what 2x more photons should look like and this result greatly exceeded that difference in all of my experiences, which is why it struck me as dramatic.

Anyway, more to learn here perhaps.... There's always things to learn in any mystery.

Perhaps the difference you're seeing is because, while I applied the same denoising parameters to both images, I treated the L (both the native L and the L extracted from the RGB-only data) differently than I did the RGB? I'm not an expert on how SNR is preserved (or not preserved) when it comes to nonlinear images and denoising processes and LRGB combination and such. But here is a breakdown of the denoising I remember doing:

For LRGB:

1. Stretch L and RGB separately, making sure to keep them matched as best I could

2. Denoise L at a lower strength than I denoised RGB using DeepSNR's latest version. (At a separate strength because of what I discussed here https://www.astrobin.com/forum/post/189418/ and here https://www.astrobin.com/forum/post/189430/).

3. LRGB combination to add the denoised L to the denoised RGB

For RGB-only:

1. Stretch RGB

2. Pull the L channel out of the RGB image

3. Denoise this extracted L at the same strength that I denoised the real L. And denoised RGB at the same strength I denoised the other RGB

4. LRGB combination to add back the denoised extracted L to the denoised RGB

If anyone wants to compare the edit history of the two images, this is the pix project file that I used. https://www.dropbox.com/scl/fi/vz0uzdd9182ki8kfjsmw2/test.pxiproject.zip?rlkey=2wfmv77e6iepp7dhjzskjjfc5&dl=0 Good clarification. I don't know enough about those two processes to shed any light on it, but I think this is a fruitful area to explore as one possible variable that may have driven much of the difference beyond the larger number of photons captured when using LRGB.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.