So, integrating all the subs instead of the four channel masters? My L's are 250s and color subs are 600s, so would the color subs be weighted 2.4x over the L subs? Not sure about that, but also curious what others say. FWIW, when I create a superlum, I integrate the masters and use psf snr for weighting. For the masters, my own blend of psf signal weight and fwhm.

Cheers,

Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Scott Badger:

So, integrating all the subs instead of the four channel masters? My L's are 250s and color subs are 600s, so would the color subs be weighted 2.4x over the L subs? Not sure about that, but also curious what others say. FWIW, when I create a superlum, I integrate the masters and use psf snr for weighting. For the masters, my own blend of psf signal weight and fwhm.

Cheers,

Scott That's what I do too. I am curious about how John does it with Exposure time.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Ashraf AbuSara:

Ashraf, You are right! I was shooting from the hip when I suggested using exposure time for weighting and that won’t work for the reason that you’ve pointed out. I’ve always used SNR for weighting and I believe that should be the right way to do it. John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Scott Badger:

So, integrating all the subs instead of the four channel masters? My L's are 250s and color subs are 600s, so would the color subs be weighted 2.4x over the L subs? Not sure about that, but also curious what others say. FWIW, when I create a superlum, I integrate the masters and use psf snr for weighting. For the masters, my own blend of psf signal weight and fwhm.

Cheers,

Scott I call that approach the “washing machine” method of averaging. You just dump everything into the machine and turn it on. That approach might produce a workable result but it’s not really the right way to produce a correct super-lum image. You might get close if all of the signals are equal and balanced but in general I wouldn’t recommend using that method. John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

About combining an L filter image with the synthetic gray-scale from RGB, I usually don't do that. This is because:

1. I typically spend about half of the total integration time on L, and 1/6 on each of R,G,B (roughly). So although adding RGB to L can improve the S/N, the improvement is usually quite small. Precisely speaking, every small improvement should be welcome. However, because of the next point, this small improvement becomes not worthwhile.

2. My seeing is variable. Between weeks, it can vary from 1" to 2" or worse. Even within a night, in some nights it can go from 1.8" to 1.2". Because of this, I decide what to image in the next couple of hours based on the current seeing. When seeing is good (<1.5", or <1.3", depending on targets), I shoot L. When seeing is mediocre, I shoot R,G,B. When seeing is bad (>2"), I close the roof and go to sleep. So my RGB image almost always have poor image quality compared to L. In such a case, combining L and RGB does harm to image sharpness and makes the PSF shape ugly.

I encourage people to monitor seeing and then decide whether to shoot L or RGB. This will give you optimal image sharpness in your LRGB pictures. But of course, this require extra efforts. You can’t simply prepare a full night of NINA script, launch it, and go to sleep. You need to dynamically adjust your plan every few hours according to the conditions. I have no hope that trying to guess seeing would help to pick when to shoot Lum. The seeing can vary considerably over the night and sometimes over an hour. I simply shoot a lot of data and sort out the best stuff after the fact. John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

John Hayes:

Scott Badger:

So, integrating all the subs instead of the four channel masters? My L's are 250s and color subs are 600s, so would the color subs be weighted 2.4x over the L subs? Not sure about that, but also curious what others say. FWIW, when I create a superlum, I integrate the masters and use psf snr for weighting. For the masters, my own blend of psf signal weight and fwhm.

Cheers,

Scott

I call that approach the “washing machine” method of averaging. You just dump everything into the machine and turn it on. That approach might produce a workable result but it’s not really the right way to produce a correct super-lum image. You might get close if all of the signals are equal and balanced but in general I wouldn’t recommend using that method.

John The all subs integration, or the 4 masters integration too? I wasn't suggesting the all subs approach, just wasn't sure if it was what was being referred to (it wasn't). I usually don't use a superlum anyhow, since like Wei-Hao (congrats, btw!) I'm using Lum to counter seeing. I'm guessing the scale of your 'lot of data' dwarfs mine : ), plus when imaging through the night, I'm checking on things every 2-3 hours, so can react to changes and for me, the difference between best and poor-but-usable is arc seconds, not fractions of an arc second. Cheers, Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Arun H:

I think part of the problem is that the CIELAB color space is non linear and intended to mimic human perception. This is why captured luminance, which is linearly related to incident photons weighted by the spectral response of the sensor is not readily correlated to the L* from the conversion of RGB to L*a*b*. This is where a lot of people struggle, I think, in doing LRGB combine. If not done right, the colors look washed out. The displayed image is, in the end, RGB, so the replacement of the “luminance” from an RGB image is, in the end, a redistribution of the captured luminance frame in some way to the three color channels. Arun, It took me a while to find it but these two videos from PI show the method that I use to combine L with RGB. They do a great job of explaining why LAB space works so well. Check it out...its worth your time! This is the method that I use all the time. https://www.youtube.com/playlist?list=PL0Nr1Pazdc5_dsesz_sjMoBSMY0kaDcXwJohn

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

John Hayes:

I have no hope that trying to guess seeing would help to pick when to shoot Lum. The seeing can vary considerably over the night and sometimes over an hour. I simply shoot a lot of data and sort out the best stuff after the fact.

John Hi John, What you do is very reasonable if your site has such rapidly variable seeing. My site also has variable seeing in hour scales, but the amplitude for this time scale is rarely larger than 10%. It's interesting to know that your site has less consistent seeing within a night. If you don't tell me, I would have guessed the opposite.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

John Hayes:

Arun H:

I think part of the problem is that the CIELAB color space is non linear and intended to mimic human perception. This is why captured luminance, which is linearly related to incident photons weighted by the spectral response of the sensor is not readily correlated to the L* from the conversion of RGB to L*a*b*. This is where a lot of people struggle, I think, in doing LRGB combine. If not done right, the colors look washed out. The displayed image is, in the end, RGB, so the replacement of the “luminance” from an RGB image is, in the end, a redistribution of the captured luminance frame in some way to the three color channels.

Arun,

It took me a while to find it but these two videos from PI show the method that I use to combine L with RGB. They do a great job of explaining why LAB space works so well. Check it out...its worth your time! This is the method that I use all the time.

https://www.youtube.com/playlist?list=PL0Nr1Pazdc5_dsesz_sjMoBSMY0kaDcXw

John Thanks for those links, John. I recall the PI email when they released those. I never had a chance to watch them fully and will now do so. I should add - I think the term LRGB is a bit misleading. An image can be fully represented in three color dimensions (RGB, XYZ etc.) So L is not something that is dimensionally independent of RGB but rather something that can be computed from it. LRGB combination then is simply replacing redistributing something that has no color information but high SNR into each one of the original RGB dimensions through various conversions that attempt to preserve the color information originally captured. But the key, I think, is still to have high quality color information so that the original conversion from RGB to LAB results in good ab values. This is also why I think it is wrong to think there is a magic ratio of L to RGB that results in a good image any more than there is a magic exposure or integration time.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I have tried LRGB twice on emission nebulae targets, and I could not get the LRGB result to look nearly as nice as the RGB image in terms of color. I tried using LRGBCombination and ImageBlend script. Since stretching the L and RGB before combining appears to be some kind of mystical art, I don't doubt I could be lacking in that step of the process. However I figured that if I created a synthetic luminance from a stretched RGB image and tried to combine that back using ImageBlend I could get something that looks close to the original RGB image in terms of pleasing color, but I still could not quite get there. Thus I mostly stick to RGB imaging, though I do not rule out using LRGB in the future when I want to capture something faint like tidal features of a galaxy. To those who successfully use LRGB techniques to create pleasing color images in my eyes, many in this thread included, I hope to reach your level someday.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Joey Conenna:

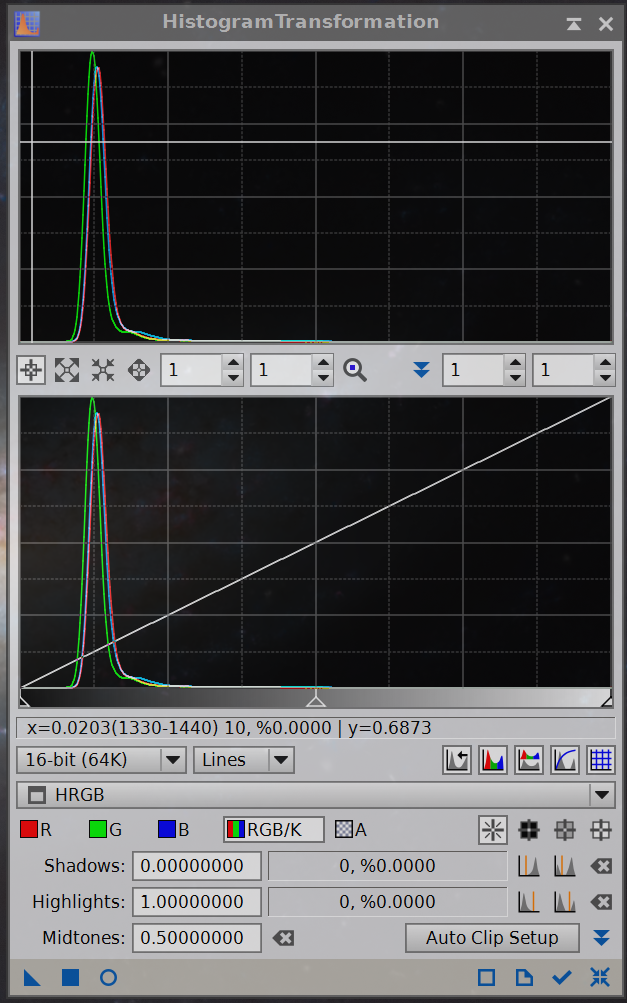

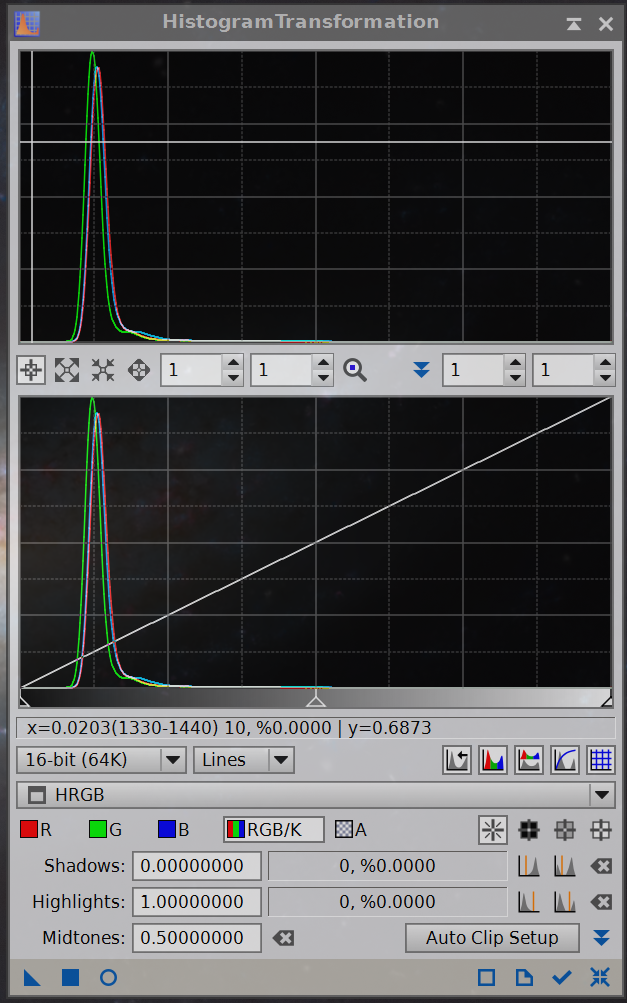

I have tried LRGB twice on emission nebulae targets, and I could not get the LRGB result to look nearly as nice as the RGB image in terms of color. I tried using LRGBCombination and ImageBlend script. Since stretching the L and RGB before combining appears to be some kind of mystical art, I don't doubt I could be lacking in that step of the process. However I figured that if I created a synthetic luminance from a stretched RGB image and tried to combine that back using ImageBlend I could get something that looks close to the original RGB image in terms of pleasing color, but I still could not quite get there. Thus I mostly stick to RGB imaging, though I do not rule out using LRGB in the future when I want to capture something faint like tidal features of a galaxy. To those who successfully use LRGB techniques to create pleasing color images in my eyes, many in this thread included, I hope to reach your level someday. Joey, The key to getting the L and RGB to blend well is to stretch them so that their peaks and black points are very close to the same. The easiest way to do that is to preview your histogram in Histogram Transformation (HT) and compare that to the histogram on your other file. So I typically stretch the RGB first looking for a histogram maybe something like this:  Then I change the view to my L master and drag the L's STF over to HT and see if the histogram curve is close. I make changes to the STF and reapply to HT checking to see that it matches the RGB. When I get it right, I keep that stretched L master and apply it to the RGB master via LRGB combination. Keep the LRGB combination sliders at .5 for both Lightness and Saturation. I also try to under-stretch a little at this beginning phase so I can always add more small curves and contrast adjustments as I fine tune things. Kevin

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Joey Conenna:

I have tried LRGB twice on emission nebulae targets, and I could not get the LRGB result to look nearly as nice as the RGB image in terms of color. I tried using LRGBCombination and ImageBlend script. Since stretching the L and RGB before combining appears to be some kind of mystical art, I don't doubt I could be lacking in that step of the process. However I figured that if I created a synthetic luminance from a stretched RGB image and tried to combine that back using ImageBlend I could get something that looks close to the original RGB image in terms of pleasing color, but I still could not quite get there. Thus I mostly stick to RGB imaging, though I do not rule out using LRGB in the future when I want to capture something faint like tidal features of a galaxy. To those who successfully use LRGB techniques to create pleasing color images in my eyes, many in this thread included, I hope to reach your level someday. Perceptual luminance or L* can be calculated from RGB coordinates. What you are doing in LRGB combination is substituting the perceptual luminance thus calculated with the stretched luminance that you captured separately. Then converting the new Lab values back to RGB for display. As you can see, this will only work if the luminance values you put back are close to the original ones. Most people do not use this level of care and will be disappointed with the results. Techniques such as what Kevin described above get you to that level of “closeness”. The midpoints are close together, the histogram is very similar. Understretching a little bit also preserves more of the color saturation and allows for better adjustment. if you look at old threads on this topic - LRGB was introduced not to improve the SNR primarily but to save time in the days of CCD imaging where download times were significant. The primary purpose was to have smaller RGB files (4x smaller with 2x2 bin). With CMOs, this is not a problem, so the purpose has morphed to SNR improvement.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Joey Conenna:

I have tried LRGB twice on emission nebulae targets, and I could not get the LRGB result to look nearly as nice as the RGB image in terms of color. I tried using LRGBCombination and ImageBlend script. Since stretching the L and RGB before combining appears to be some kind of mystical art, I don't doubt I could be lacking in that step of the process. However I figured that if I created a synthetic luminance from a stretched RGB image and tried to combine that back using ImageBlend I could get something that looks close to the original RGB image in terms of pleasing color, but I still could not quite get there. Thus I mostly stick to RGB imaging, though I do not rule out using LRGB in the future when I want to capture something faint like tidal features of a galaxy. To those who successfully use LRGB techniques to create pleasing color images in my eyes, many in this thread included, I hope to reach your level someday. For pure emission line targets, indeed you gain relatively little from LRGB. Usually one of the RGB filters gets most of the signal, so an L image which is approximately R+G+B doesn't give you much more than just that single color filter. Since decades ago, quite some experienced astrophotographers advertise that one should just use RGB for emission line objects instead of LRGB, and I agree with them. As for reproducing RGB color in LRGB, the key is to exactly match the luminance of them, like what Kevin said above. Personally I use Photoshop's layer functions to do this. This allows instant preview and instant comparison (by blinking) of the luminance of RGB and the luminance of the L image, while doing the adjustments. I haven't found a PI method that can lead to a better match, but this can be simply because my PI skills are lousy.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

Joey Conenna:

I have tried LRGB twice on emission nebulae targets, and I could not get the LRGB result to look nearly as nice as the RGB image in terms of color. I tried using LRGBCombination and ImageBlend script. Since stretching the L and RGB before combining appears to be some kind of mystical art, I don't doubt I could be lacking in that step of the process. However I figured that if I created a synthetic luminance from a stretched RGB image and tried to combine that back using ImageBlend I could get something that looks close to the original RGB image in terms of pleasing color, but I still could not quite get there. Thus I mostly stick to RGB imaging, though I do not rule out using LRGB in the future when I want to capture something faint like tidal features of a galaxy. To those who successfully use LRGB techniques to create pleasing color images in my eyes, many in this thread included, I hope to reach your level someday.

For pure emission line targets, indeed you gain relatively little from LRGB. Usually one of the RGB filters gets most of the signal, so an L image which is approximately R+G+B doesn't give you much more than just that single color filter. Since decades ago, quite some experienced astrophotographers advertise that one should just use RGB for emission line objects instead of LRGB, and I agree with them.

As for reproducing RGB color in LRGB, the key is to exactly match the luminance of them, like what Kevin said above. Personally I use Photoshop's layer functions to do this. This allows instant preview and instant comparison (by blinking) of the luminance of RGB and the luminance of the L image, while doing the adjustments. I haven't found a PI method that can lead to a better match, but this can be simply because my PI skills are lousy. Just on your last point. In order to match perceptual luminance to the RGB image I have been using the linear fit process in PI to first match the new luminance to the L extracted from the RGB before adding it into the RGB using the LRGB process. This usually seems to work and give a pleasing result. I don't know if it is rigorously correct though and otherwise I'd do something similar to Kevin's description above

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Tim Hawkes:

Just on your last point. In order to match perceptual luminance to the RGB image I have been using the linear fit process in PI to first match the new luminance to the L extracted from the RGB before adding it into the RGB using the LRGB process. This usually seems to work and give a pleasing result. I don't know if it is rigorously correct though and otherwise I'd do something similar to Kevin's description above I actually tried that a few times. Unfortunately very often the resultant L doesn't look like the luminance of RGB, and I can do much better in Photoshop manually within a reasonably short time. There aren't many parameters in linear fit that I can adjust. So there isn't an obvious way I could have messed it up, or could improve it.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

I actually tried that a few times. Unfortunately very often the resultant L doesn't look like the luminance of RGB, and I can do much better in Photoshop manually within a reasonably short time. There aren't many parameters in linear fit that I can adjust. So there isn't an obvious way I could have messed it up, or could improve it. It might be that the I have been fortunate- I don't know. Immediately following the LRGB step I have always needed to stretch the image back up using EXP and ARCSINh and increase saturation using curves before it looked OK. But the final results were usually good --i.e the new luminance added better detail (which was my aim) and the intensity of colour versus colour noise level in the final image was about the same (as far as I could judge) as that in the RGB image. It might be a question of when in the process one makes a judgement about whether the LRGB transfer has 'worked'?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Tim Hawkes:

It might be that the I have been fortunate- I don't know. Immediately following the LRGB step I have always needed to stretch the image back up using EXP and ARCSINh and increase saturation using curves before it looked OK. But the final results were usually good --i.e the new luminance added better detail (which was my aim) and the intensity of colour versus colour noise level in the final image was about the same (as far as I could judge) as that in the RGB image. It might be a question of when in the process one makes a judgement about whether the LRGB transfer has 'worked'? Unlike many others, I do LRGB composition in linear space, before any stretch. The only stretch I made to RGB or L is to match the luminance of RGB and L, and it's linear. Despite this, Photoshop's adjustment layers offers very high quality instant preview of the nonlinear stretching results (sort of like screen stretch in PI, but in a much more flexible way, and can include saturation boost as well) while keeping the underlying file linear. It also offers instant preview of the LRGB composition and the ability to switch back and forth quickly between RGB and LRGB. So even when the data are in their most original form, I can judge whether the LRGB composition has made any damage to color fidelity, by quickly blinking between RGB and LRGB.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

Tim Hawkes:

Just on your last point. In order to match perceptual luminance to the RGB image I have been using the linear fit process in PI to first match the new luminance to the L extracted from the RGB before adding it into the RGB using the LRGB process. This usually seems to work and give a pleasing result. I don't know if it is rigorously correct though and otherwise I'd do something similar to Kevin's description above

I actually tried that a few times. Unfortunately very often the resultant L doesn't look like the luminance of RGB, and I can do much better in Photoshop manually within a reasonably short time. There aren't many parameters in linear fit that I can adjust. So there isn't an obvious way I could have messed it up, or could improve it. This is a case where using a good layer based phot editor like Photoshop or Affinity Photo is a better way to go about it. Faster and easier to make fine adjustments.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Tony Gondola:

Wei-Hao Wang:

Tim Hawkes:

Just on your last point. In order to match perceptual luminance to the RGB image I have been using the linear fit process in PI to first match the new luminance to the L extracted from the RGB before adding it into the RGB using the LRGB process. This usually seems to work and give a pleasing result. I don't know if it is rigorously correct though and otherwise I'd do something similar to Kevin's description above

I actually tried that a few times. Unfortunately very often the resultant L doesn't look like the luminance of RGB, and I can do much better in Photoshop manually within a reasonably short time. There aren't many parameters in linear fit that I can adjust. So there isn't an obvious way I could have messed it up, or could improve it.

This is a case where using a good layer based phot editor like Photoshop or Affinity Photo is a better way to go about it. Faster and easier to make fine adjustments. OK thanks Tony and @Wei-Hao Wang . Clearly time that I educated myself in this . I have Affinity photo and will experiment to try and do something similar

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

John Hayes:

I have no hope that trying to guess seeing would help to pick when to shoot Lum. The seeing can vary considerably over the night and sometimes over an hour. I simply shoot a lot of data and sort out the best stuff after the fact.

John

Hi John,

What you do is very reasonable if your site has such rapidly variable seeing. My site also has variable seeing in hour scales, but the amplitude for this time scale is rarely larger than 10%. It's interesting to know that your site has less consistent seeing within a night. If you don't tell me, I would have guessed the opposite. for what it is worth, it is possible to construct a trigger in NINA based on FWHM using Powerups. So you could have a sequence that does Lum as long as FWHMs are below a threshold and RGB otherwise. That might allow you to get more sleep.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

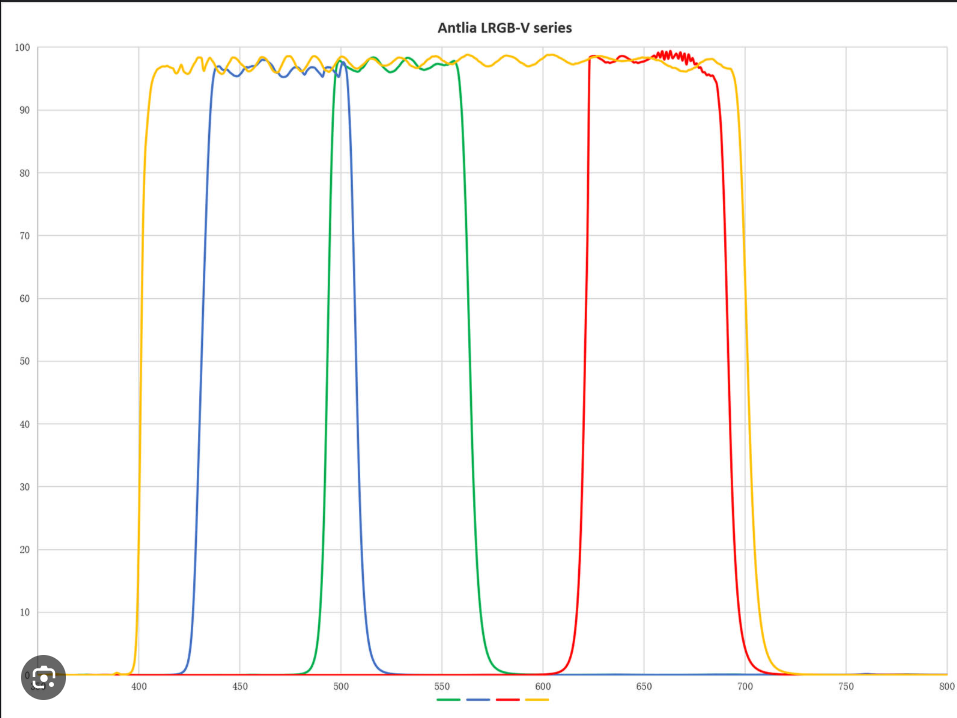

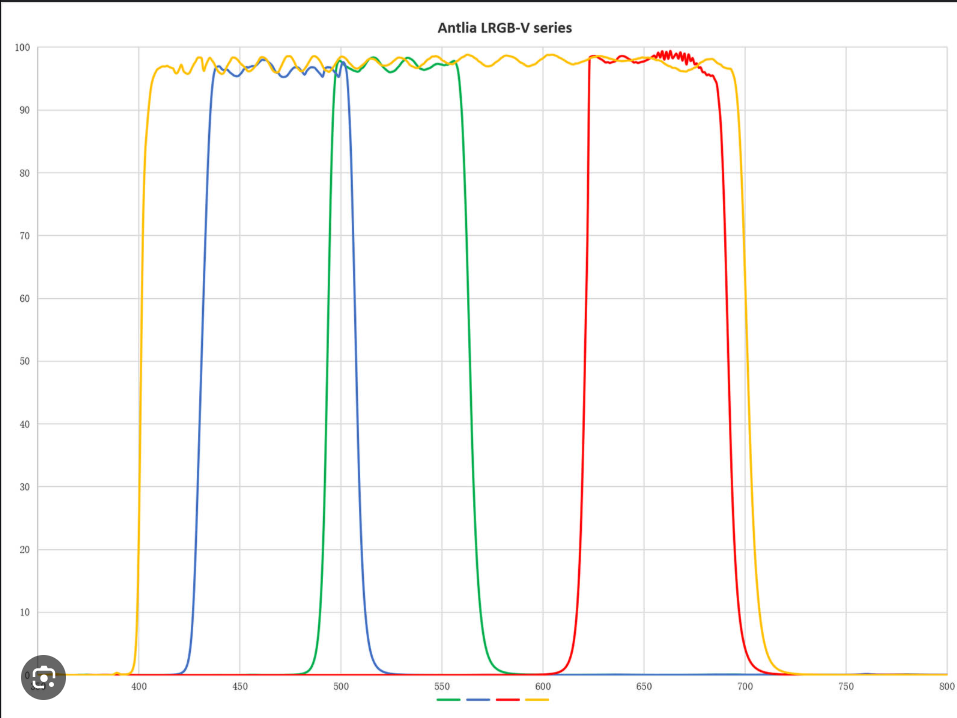

Very interesting @Noah Tingey . You should keep in mind that RGB is not equivalent to L due to the type of filters you are using. The downside to using luminance is that the extra signal obtained is colorless, which is why the tones appear white. More signal, yes, means less color.  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Raúl Hussein:

Very interesting @Noah Tingey . You should keep in mind that RGB is not equivalent to L due to the type of filters you are using. The downside to using luminance is that the extra signal obtained is colorless, which is why the tones appear white. More signal, yes, means less color.

This is misguided. While yes, Luminance, depending on the filter set used, can include regions of the EM spectrum that are not covered by the RGB filters, this does NOT mean that they will be without any hue or color in the final image. This is simply incorrect. The color is entirely determined by the RGB data - if there is a hue present in the RGB, it will be there in the LRGB just the same. The only case where you would observe colorless signal would be an emission line (Not continuum signal) that is not covered by any of the RGB filters. In space, there are almost none of these, certainly none in the visible spectrum that are dominant or even distinguishable on their own. Every object you will be imaging will get its color from continuum, where the color emitted or reflected at each point occupies a broad area of the visual spectrum and the differences in response across the three filters give its color. There is just no justification for this silly "less color" argument.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I agree with Charles. As long as you have RGB, there is color. Many claims of loss of color are often simply caused by a mismatch between L and the luminance of RGB during the LRGB composition. If your L is generally brighter than the luminance of RGB, then you will see a drop in color saturation, and vice versa.

In the filter transmission curve above, L does passes way more light than R+G+B, but this can be compensated during the matching process. A mismatch that leads to a global drop in color saturation simply means the matching is not done well. What's wrong is the matching process, not the L filter.

That being said, there could be a subtle effect caused by the fact that L is often not simply R+G+B. For example, an L filter may allow additional short-wavelength blue light and UV to come in, compared to the B filter, like the case in the filter transmission curves above. In such a case, blue components in your target that are particularly bright in the UV (galaxy spiral arms, for example) may appear brighter than it should be in the L, compared to the luminance of RGB. This will indeed cause a drop in saturation for that component. An easy fix is to decrease the brightness of the L image to better match that, but this will case the red and green component in the target appear too dark in the L image, leading to an often unpleasant boost in saturation for these components. If instead, the L filter passes too much near-infrared light in the red side, then you will have some trouble in the part of your target where it emits stronger in the red/infrared. This is usually the core of spiral galaxies or anything deeply extinguished by foreground dust.

So yes, the mismatch between the transmissions of L and RGB can cause some troubles, but just using "less color" or "colorless" to describe this is oversimplifying the situation. The effect is subtle anyway. If you see something dramatic, the problem is the matching process in post processing, not the L filter.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

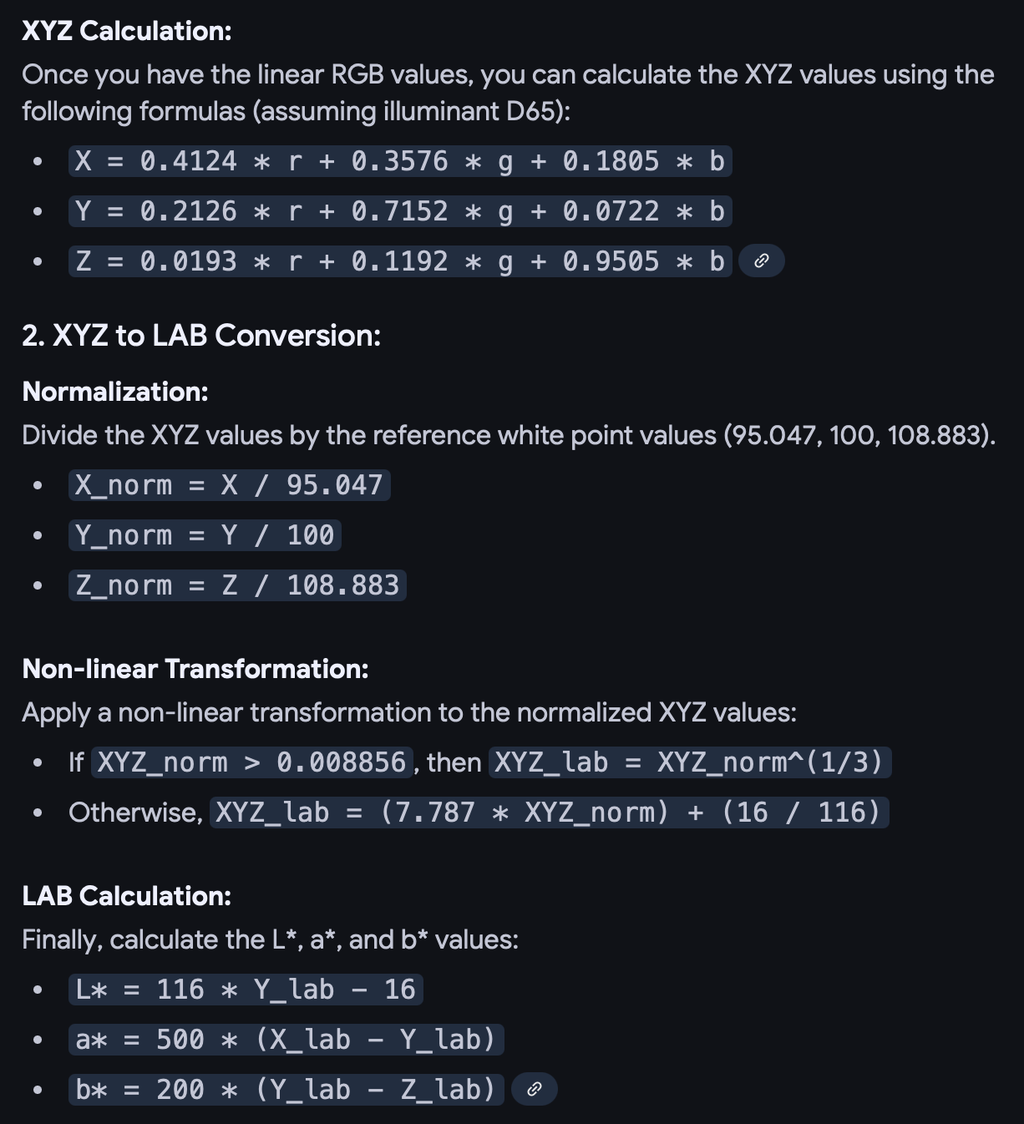

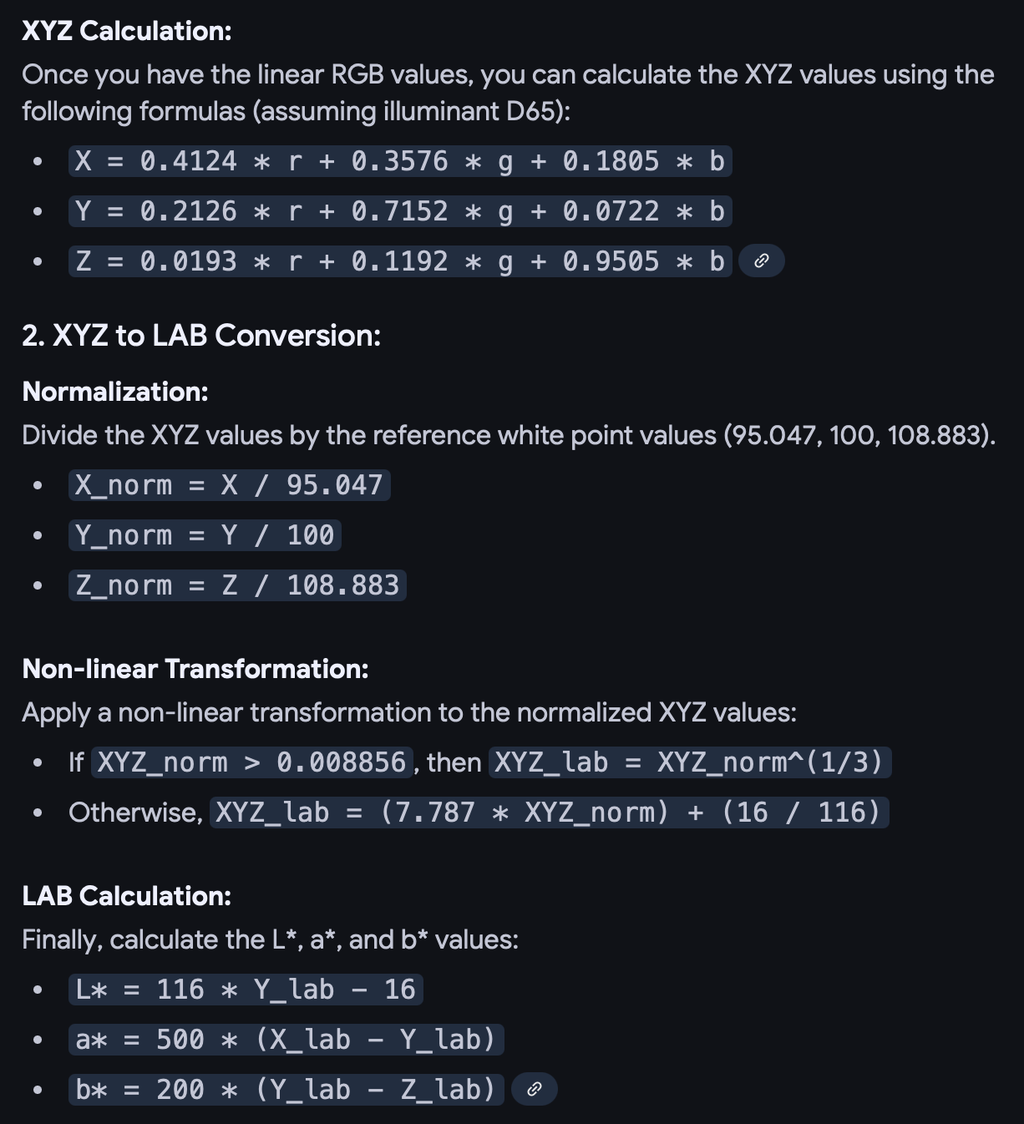

To see what is happening, it is helpful to look at the mathematics. As stated earlier - a color file can be fully described by either RGB or L* a* b*. Conversion from one to another can simply be viewed as a nonlinear coordinate transform. For example, the below is one way to do it:  If you have your original RGB data taken with color filters - you can use something like the above to transform it to L*a*b* space. Then replace the L* so calculated by your L from the luminance filter and convert back to RGB space for display. This step is the essence of LRGB combination. Note a few things: - Your luminance L will absolutely impact each one of the RGB values of the final file. Replacing luminance will have an impact on primary RGB color values.

- If your original RGB file is noisy, some of that noise will carry over the final RGB file after LRGB combination.

- The value of L* depends on the values of R,G, and B. Similarly, in the reverse calculation, the values of R, G, and B are impacted by L*. You can see that "luminance" (or actually perceptual lightness) is not something that is independent of RGB, rather, it is a function of RGB that aims to capture the human perception of lightness and depends on all three colors.

The whole idea here is to replace the L* component with something less noisy - which in turn will result in a less noisy final RGB file. By stretching and matching your luminance with the extracted luminance from RGB, you are effectively creating a "replacement" L* - a less noisy and sharper version of perceptual lightness that, together with the original a* and b*, you can then convert back to RGB for display. But unless the replacement of the original L* with the higher SNR L is done carefully, you can easily see that you will corrupt color - because your final RGB values will be impacted and not in a good way. Yes - the final file will be less noisy - but the color will be off. This is why it is crucial to have the L 's be matched before the combination.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Great discussion - really liked the examples that were shown.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.