John Hayes:

Kevin Morefield:

Brian Puhl:

andrea tasselli:

It seems like demonstrating the obvious.

You'd be surprised how many people are preaching that luminance is pointless, and the subsequent cult following they're developing.

The simplest way I can think of this question is "do I want to throw away 2/3s of my photons on all of my subs or some of my subs?"

I'm sorry Kevin, you lost me. Why are we throwing away 2/3 of the photons anywhere?

John If I shoot with an R, G, or B filter I block 2/3s of the photons collected by my optics. An R filter blocks G and B for example. If use an L filter I get all of the photons. So if I believe L is not needed I'm choosing to lose 2/3s of my photons on the subs that could have been shot with L.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kevin Morefield:

If I shoot with an R, G, or B filter I block 2/3s of the photons collected by my optics. An R filter blocks G and B for example. If use an L filter I get all of the photons. So if I believe L is not needed I'm choosing to lose 2/3s of my photons on the subs that could have been shot with L. In terms of signal (photons), you're not adding the shot L signal to the luminance signal inherent in the RGB, you're replacing it. FWIW, here's my take. You start with a time budget for a target, let's say 15 hours. You can shoot 5 hours each of R, G, & B (or 15 hours OSC), but maybe that's not quite enough to bring out that extra halo around a galaxy, or the tidal stream between two interacting galaxies, or the IFN surrounding the target, or some of the fainter features of a nebula, and you're willing to sacrifice some color information for extra detail info. You might; there's more you can do to accommodate weak color in processing than show detail not sufficiently represented in the data. How much L? At 5 hours (R, G, & B each now 3.33 hrs), you haven't gained anything. The 5 hr L equals the luminance of a 15 hr RGB (snr). How much beyond that is the choice of the photographer depending on detail to be gained vs additional color given up. That said, I use L differently. As I've mentioned before, my seeing is poor to terrible and highly variable. The average of the data I keep is 3-3.5" (fwhm), so seeing conditions overall averages significantly higher. During the winter, probably close to 40% of clear nights are 5" or worse. Seeing can also double, or halve, through the night, and there can be large differences across the sky (not just relative to the horizon). Anyhow, long sob story, long......I shoot L when seeing is best and R, G, & B otherwise. As has been discussed, the lower resolution RGB doesn't matter overall resolution-wise, and now even with just 5 hours of L, I might not be gaining any detail, but I'm improving the resolution of the detail I would have gotten out of a 15 hr RGB. As Wei-Hao and John have both noted, though, the L we shoot (whether with an L filter, clear filter, or no filter) isn't the same as the luminance inherent in the RGB. John, I'm interested in hearing more about the technique you describe. I assume a shot L (or SuperL) can be used in place of the syn-lum, but when integrating with the tru-lum, how is the weighting used to favor the profile(?) of the tru-lum? And would it defeat my purpose anyhow; essentially losing the resolution gain of my shot L? Cheers, Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

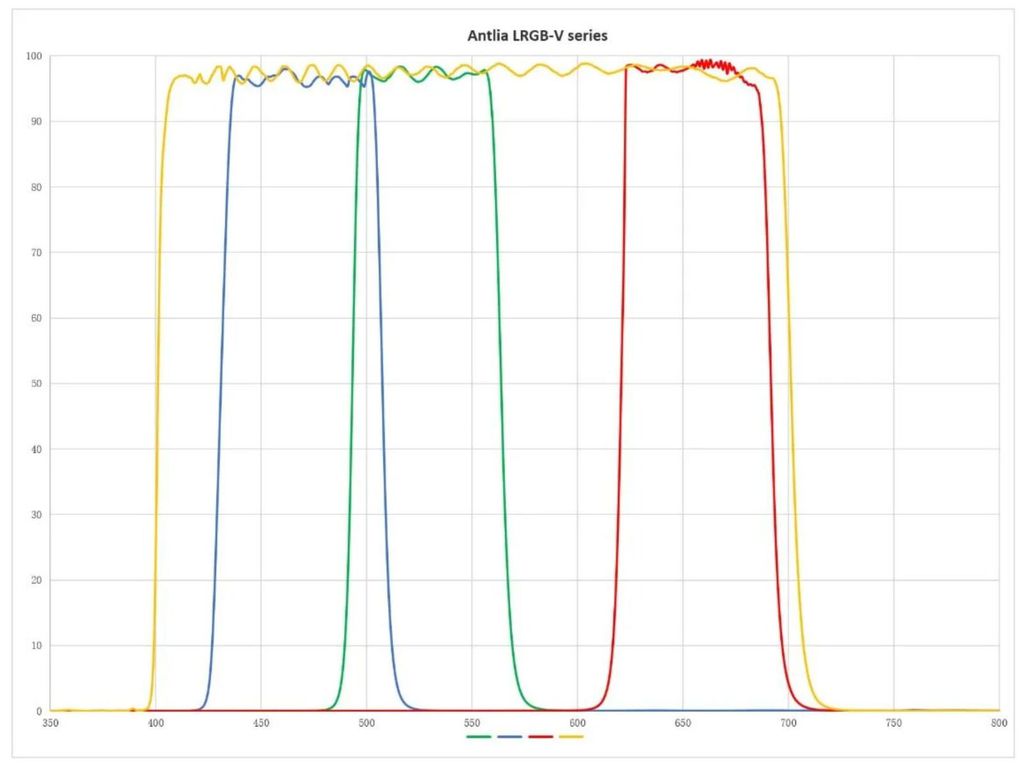

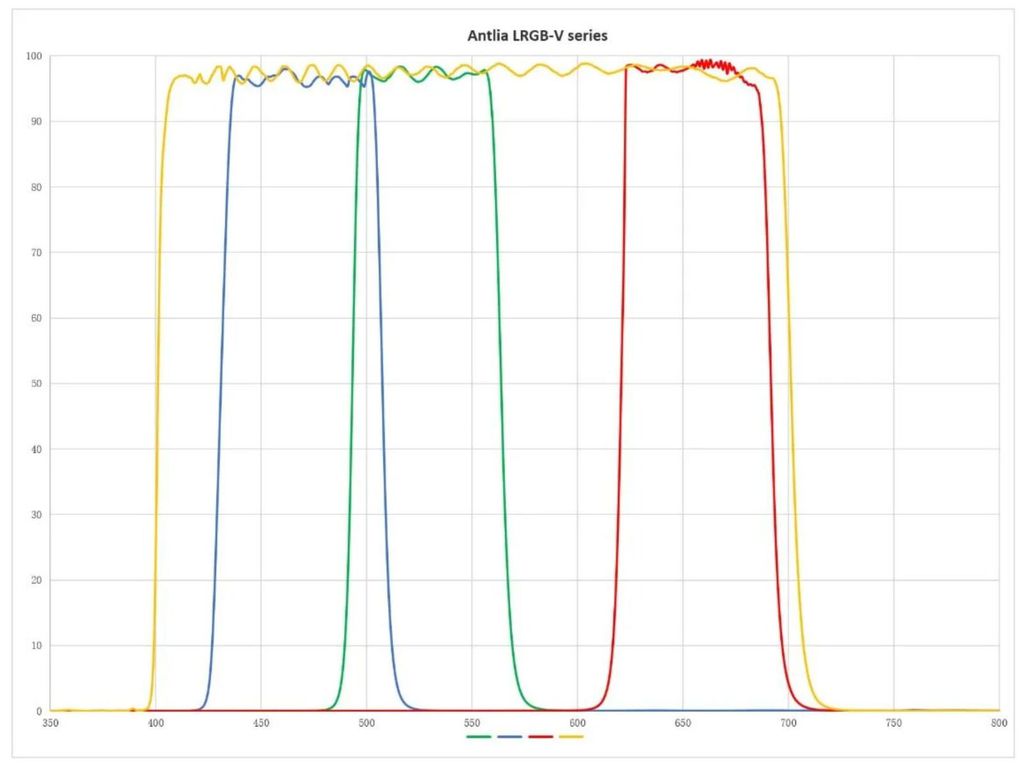

It didn't take long for this to kinda become one of those back and forth arguments we often have on here. For those that think luminance is the same signal as RGB combined... I implore you to look at the graphs for any LRGB filter set.  Luminance, demonstrated in yellow, encompasses considerably more signal in this filter set (just an example). In here, roughly a third of the signal is lost when compared to RGB. You don't gain sharpness, most of us should know that. You do however gain a substantial amount of signal in a single filter, compared to that of red, green, or blue.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

John Hayes:

I'm sorry Kevin, you lost me. Why are we throwing away 2/3 of the photons anywhere?

John I guess what Kevin meant is that when we image with an R filter, we are throwing away the B and G photons, compared to the case of imaging with an L filter. Of course R, G, B images are necessary for a color picture. So a reasonable compromise is to spend only a fraction of time on R, G, B and leave some fraction of time to L where photon throughput is maximized.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kevin Morefield:

If I shoot with an R, G, or B filter I block 2/3s of the photons collected by my optics. An R filter blocks G and B for example. If use an L filter I get all of the photons. So if I believe L is not needed I'm choosing to lose 2/3s of my photons on the subs that could have been shot with L. Thanks Kevin (and Wei-Hao) for the clarification. I was off thinking of something more esoteric while ignoring the obvious. The only way to solve this problem might be to use a Foveon sensor. It is a bit surprising that the Foveon idea never really took off. The problems must be with noise and color fidelity. John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Scott Badger:

John, I'm interested in hearing more about the technique you describe. I assume a shot L (or SuperL) can be used in place of the syn-lum, but when integrating with the tru-lum, how is the weighting used to favor the profile(?) of the tru-lum? And would it defeat my purpose anyhow; essentially losing the resolution gain of my shot L? The problem is easy to understand using an extreme case. Let's say that you've got 100 hours of RGB data and a single Lum frame. If you extract the synthetic Lum from your RGB data, it will be much cleaner than your single frame of true Lum. If you simply average those two frames your SNR will go down--and that's no good! The ONLY reason to combine synthetic-Lum with real Lum is to increase SNR. That's why you have to properly weight the two contributions in order to get the statistics "right". You can combine the syn-Lum with true-Lum using SNR to weight the contributions of each in the ImageIntegration tool. However, the ImageIntegration tool offers what I think might be an even better way to weight the combination: Exposure Time. That's probably the best way to combine the two data sets. Just make sure that your syn-lum data contains the correct total exposure time in the meta-data (i.e. the FITS/XISF header). One of these days, I'll have to play with some images and generate some statistics to show what works the best. If I had any software chops left, this would make for a great tool in PI. Just feed it RGB and Lum data, check a few boxes telling it what you want to do and have it automatically spit out a proper LRGB combination. (Yes... I know about the LRGB combination tool in PI but I don't think that's not doing the "right" calculation.) John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Thanks John! Maybe I misunderstood, I thought you were weighting the integration of the tru-lum (*percieved* luminance (L*) extracted from the Lab color space, right?) and syn-lum (integration of R, G, and B?) in some way that maintains the profile (probably not the right term) of the tru-lum and then re-inserting the integration back into the Lab space.

Cheers,

Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

John Hayes:

Scott Badger:

John, I'm interested in hearing more about the technique you describe. I assume a shot L (or SuperL) can be used in place of the syn-lum, but when integrating with the tru-lum, how is the weighting used to favor the profile(?) of the tru-lum? And would it defeat my purpose anyhow; essentially losing the resolution gain of my shot L?

The problem is easy to understand using an extreme case. Let's say that you've got 100 hours of RGB data and a single Lum frame. If you extract the synthetic Lum from your RGB data, it will be much cleaner than your single frame of true Lum. If you simply average those two frames your SNR will go down--and that's no good! The ONLY reason to combine synthetic-Lum with real Lum is to increase SNR. That's why you have to properly weight the two contributions in order to get the statistics "right". You can combine the syn-Lum with true-Lum using SNR to weight the contributions of each in the ImageIntegration tool. However, the ImageIntegration tool offers what I think might be an even better way to weight the combination: Exposure Time. That's probably the best way to combine the two data sets. Just make sure that your syn-lum data contains the correct total exposure time in the meta-data (i.e. the FITS/XISF header).

One of these days, I'll have to play with some images and generate some statistics to show what works the best. If I had any software chops left, this would make for a great tool in PI. Just feed it RGB and Lum data, check a few boxes telling it what you want to do and have it automatically spit out a proper LRGB combination. (Yes... I know about the LRGB combination tool in PI but I don't think that's not doing the "right" calculation.)

John Hi John, When combining LUM images (say from two separate night's viewing -- or-equally a syn lum and lum) I usually first align and then add them together in weighted proportion using PixMath in PixInsight. Assuming FWHM and other quality factors are not too different I usually just use SNR as the basis for the weighting . The SNR estimates are made directly comparable by estimating signal and background in two identical sub- fragments of the two aligned images -one in a clear signal area and the other deemed 'background' - and use the statistics tool to measure the signal and signal std-dev in these. I think that this works pretty well --but I'd be interested in your comments - it's just a procedure that I made up because it seemed a logical enough way forward Tim

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

John Hayes:

Wei-Hao Wang:

One subtle and yet important thing:

Luminance of an RGB color image is not R+G+B. Luminance contains more contribution from G than R and B. An L-filter mono image is closer (but not identical) to R+G+B than the luminance of RGB.

From S/N ratio's point of view, R+G+B could be slightly better than the luminance of RGB. But you will have some loss of color fidelity after you use this R+G+B to replace luminance, because as I said above, R+G+B is not true luminance. In some sense, you are sacrificing the color fidelity by gaining the small amount of S/N.

Wei-Hao,

I completely agree. I just want to add that the best way to extract an L channel from RGB data is to convert it to LAB space. Then you can either substitute the true Lum data to recombine the image to get an LRGB result -or- combine the syn-Lum data with the true Lum data to substitute back into the LAB data for conversion back to LRGB. The tricky thing about combining the syn-Lum with tru-Lum is that you want to do a weighted average to get the statistics right. I've done that by making a copy of each so that I can use the ImageIntegration tool along with it's weighting functions. The image copies are necessary because the ImageIntegration tool requires at least three images. By making copies, you don't disturb the statistics and you get around that minimum number of images requirement.

John I think part of the problem is that the CIELAB color space is non linear and intended to mimic human perception. This is why captured luminance, which is linearly related to incident photons weighted by the spectral response of the sensor is not readily correlated to the L* from the conversion of RGB to L*a*b*. This is where a lot of people struggle, I think, in doing LRGB combine. If not done right, the colors look washed out. The displayed image is, in the end, RGB, so the replacement of the “luminance” from an RGB image is, in the end, a redistribution of the captured luminance frame in some way to the three color channels.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Tim Hawkes:

John Hayes:

Scott Badger:

John, I'm interested in hearing more about the technique you describe. I assume a shot L (or SuperL) can be used in place of the syn-lum, but when integrating with the tru-lum, how is the weighting used to favor the profile(?) of the tru-lum? And would it defeat my purpose anyhow; essentially losing the resolution gain of my shot L?

The problem is easy to understand using an extreme case. Let's say that you've got 100 hours of RGB data and a single Lum frame. If you extract the synthetic Lum from your RGB data, it will be much cleaner than your single frame of true Lum. If you simply average those two frames your SNR will go down--and that's no good! The ONLY reason to combine synthetic-Lum with real Lum is to increase SNR. That's why you have to properly weight the two contributions in order to get the statistics "right". You can combine the syn-Lum with true-Lum using SNR to weight the contributions of each in the ImageIntegration tool. However, the ImageIntegration tool offers what I think might be an even better way to weight the combination: Exposure Time. That's probably the best way to combine the two data sets. Just make sure that your syn-lum data contains the correct total exposure time in the meta-data (i.e. the FITS/XISF header).

One of these days, I'll have to play with some images and generate some statistics to show what works the best. If I had any software chops left, this would make for a great tool in PI. Just feed it RGB and Lum data, check a few boxes telling it what you want to do and have it automatically spit out a proper LRGB combination. (Yes... I know about the LRGB combination tool in PI but I don't think that's not doing the "right" calculation.)

John

Hi John,

When combining LUM images (say from two separate night's viewing -- or-equally a syn lum and lum) I usually first align and then add them together in weighted proportion using PixMath in PixInsight. Assuming FWHM and other quality factors are not too different I usually just use SNR as the basis for the weighting . The SNR estimates are made directly comparable by estimating signal and background in two identical sub- fragments of the two aligned images -one in a clear signal area and the other deemed 'background' - and use the statistics tool to measure the signal and signal std-dev in these.

I think that this works pretty well --but I'd be interested in your comments - it's just a procedure that I made up because it seemed a logical enough way forward

Tim Tim, I think that works. You are just "manually" doing what I'm suggesting using the ImageIntegration tool to do. The main thing is to make sure that the data is properly weighted when you do the summation to make sure that you don't come up with a statistically incorrect result. John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Arun H:

I think part of the problem is that the CIELAB color space is non linear and intended to mimic human perception. This is why captured luminance, which is linearly related to incident photons weighted by the spectral response of the sensor is not readily correlated to the L* from the conversion of RGB to L*a*b*. This is where a lot of people struggle, I think, in doing LRGB combine. If not done right, the colors look washed out. The displayed image is, in the end, RGB, so the replacement of the “luminance” from an RGB image is, in the end, a redistribution of the captured luminance frame in some way to the three color channels. Arun, You are right. According to PI: - "The CIE L*a*b* color space. CIE L*, or lightness, is a nonlinear function of the luminance (or CIE Y), while CIE a* and CIE b* are two nonlinear chrominance components. The CIE *a component represents the ratio between red and green for each pixel, and CIE b* represents the ratio between yellow and blue."

In practice, I've never seen much of a difference between "lightness" and "luminance" but I also haven't looked very hard at it. I don't do LRGB combination on linear data. Instead, I first calibrate the colors in my RGB data and process what is essentially a finished, stretched RGB image. Then I process the Lum data to produce a finished, stretched Lum image. I then extract the Lightness component from a LAB decomposition of the RGB image and confirm that it looks "similar to my "finished" Lum image and then reconstruct a new LRGB image by substituting my Lum-data for the Lightness component and forming an image from the new LAB data. Since you are dealing with stretched data, the colors do not come out washed out with this approach. Perhaps I will experiment doing the same thing with with CIE XYZ, which PI describes as: - The CIE XYZ linear color space. CIE Y is the linear luminance, while CIE X and CIE Z are two linear chrominance components.

This is how to easily get at the "linear luminance" component of the image. I'll be surprised if it makes a big difference but that's how to get around using the nonlinear lightness component of the data instead of the linear luminance. John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

About combining an L filter image with the synthetic gray-scale from RGB, I usually don't do that. This is because:

1. I typically spend about half of the total integration time on L, and 1/6 on each of R,G,B (roughly). So although adding RGB to L can improve the S/N, the improvement is usually quite small. Precisely speaking, every small improvement should be welcome. However, because of the next point, this small improvement becomes not worthwhile.

2. My seeing is variable. Between weeks, it can vary from 1" to 2" or worse. Even within a night, in some nights it can go from 1.8" to 1.2". Because of this, I decide what to image in the next couple of hours based on the current seeing. When seeing is good (<1.5", or <1.3", depending on targets), I shoot L. When seeing is mediocre, I shoot R,G,B. When seeing is bad (>2"), I close the roof and go to sleep. So my RGB image almost always have poor image quality compared to L. In such a case, combining L and RGB does harm to image sharpness and makes the PSF shape ugly.

I encourage people to monitor seeing and then decide whether to shoot L or RGB. This will give you optimal image sharpness in your LRGB pictures. But of course, this require extra efforts. You can’t simply prepare a full night of NINA script, launch it, and go to sleep. You need to dynamically adjust your plan every few hours according to the conditions.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I feel the topic is a little meatless. If you have time, just take more L and also RGB as well. Please do not ignore RGB data. Sufficient RGB data can reduce the color noise significantly in the post-processing. I do not play with tricky and complicated post-processing approaches. When I get sufficient data, post-processing is pretty simple.

Yuexiao

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

About combining an L filter image with the synthetic gray-scale from RGB, I usually don't do that. This is because:

1. I typically spend about half of the total integration time on L, and 1/6 on each of R,G,B (roughly). So although adding RGB to L can improve the S/N, the improvement is usually quite small. Precisely speaking, every small improvement should be welcome. However, because of the next point, this small improvement becomes not worthwhile.

2. My seeing is variable. Between weeks, it can vary from 1" to 2" or worse. Even within a night, in some nights it can go from 1.8" to 1.2". Because of this, I decide what to image in the next couple of hours based on the current seeing. When seeing is good (<1.5", or <1.3", depending on targets), I shoot L. When seeing is mediocre, I shoot R,G,B. When seeing is bad (>2"), I close the roof and go to sleep. So my RGB image almost always have poor image quality compared to L. In such a case, combining L and RGB does harm to image sharpness and makes the PSF shape ugly.

I encourage people to monitor seeing and then decide whether to shoot L or RGB. This will give you optimal image sharpness in your LRGB pictures. But of course, this require extra efforts. You can simply prepare a full night of NINA script, launch it, and go to sleep. You need to dynamically adjust your plan every few hours according to the conditions. 100% agree with this, a tactic I employ as well

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

John Hayes:

Scott Badger:

John, I'm interested in hearing more about the technique you describe. I assume a shot L (or SuperL) can be used in place of the syn-lum, but when integrating with the tru-lum, how is the weighting used to favor the profile(?) of the tru-lum? And would it defeat my purpose anyhow; essentially losing the resolution gain of my shot L?

The problem is easy to understand using an extreme case. Let's say that you've got 100 hours of RGB data and a single Lum frame. If you extract the synthetic Lum from your RGB data, it will be much cleaner than your single frame of true Lum. If you simply average those two frames your SNR will go down--and that's no good! The ONLY reason to combine synthetic-Lum with real Lum is to increase SNR. That's why you have to properly weight the two contributions in order to get the statistics "right". You can combine the syn-Lum with true-Lum using SNR to weight the contributions of each in the ImageIntegration tool. However, the ImageIntegration tool offers what I think might be an even better way to weight the combination: Exposure Time. That's probably the best way to combine the two data sets. Just make sure that your syn-lum data contains the correct total exposure time in the meta-data (i.e. the FITS/XISF header).

One of these days, I'll have to play with some images and generate some statistics to show what works the best. If I had any software chops left, this would make for a great tool in PI. Just feed it RGB and Lum data, check a few boxes telling it what you want to do and have it automatically spit out a proper LRGB combination. (Yes... I know about the LRGB combination tool in PI but I don't think that's not doing the "right" calculation.)

John Interesting idea about combining the luminance of the L filter and the RGB filters. How does exposure time work in this context? If you have say 10 hours of L and 10 hours of RGB data, wouldn't the SNR from the L data be significantly higher than that of the equivalent exposure time of RGB data?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

About combining an L filter image with the synthetic gray-scale from RGB, I usually don't do that. This is because:

1. I typically spend about half of the total integration time on L, and 1/6 on each of R,G,B (roughly). So although adding RGB to L can improve the S/N, the improvement is usually quite small. Precisely speaking, every small improvement should be welcome. However, because of the next point, this small improvement becomes not worthwhile.

2. My seeing is variable. Between weeks, it can vary from 1" to 2" or worse. Even within a night, in some nights it can go from 1.8" to 1.2". Because of this, I decide what to image in the next couple of hours based on the current seeing. When seeing is good (<1.5", or <1.3", depending on targets), I shoot L. When seeing is mediocre, I shoot R,G,B. When seeing is bad (>2"), I close the roof and go to sleep. So my RGB image almost always have poor image quality compared to L. In such a case, combining L and RGB does harm to image sharpness and makes the PSF shape ugly.

I encourage people to monitor seeing and then decide whether to shoot L or RGB. This will give you optimal image sharpness in your LRGB pictures. But of course, this require extra efforts. You can’t simply prepare a full night of NINA script, launch it, and go to sleep. You need to dynamically adjust your plan every few hours according to the conditions. Hey Wei-Hao, interesting. What parameters do you look at to predict the seeing? hfr? cs anderl

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Anderl:

Hey Wei-Hao,

interesting. What parameters do you look at to predict the seeing? hfr?

cs

anderl Focusing. In a good night (no cloud, low humidity after sunset), I open the roof shortly after sunset to allow the scope to cool down for 0.5 to 1 hr before the twilight ends. In this period, I conduct a few coarse focusing (big focus steps between exposures). Typically I should see the FWHM at best focus progressively improves as the scope cools down. (At the same time, I progressively decrease the focus step to reach finer results.) This gives me very good idea about the seeing for the beginning of the night, and allows me to decide what to image. Then as the night goes on, I check the FWHM from time to time, and I can adjust my plan accordingly.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Yuexiao Shen:

I feel the topic is a little meatless. If you have time, just take more L and also RGB as well. Please do not ignore RGB data. Sufficient RGB data can reduce the color noise significantly in the post-processing. I do not play with tricky and complicated post-processing approaches. When I get sufficient data, post-processing is pretty simple.

Yuexiao It's always true that more data will lead to better results. When one has infinite amount of time, he/she should go RGB only and the result will be infinite times better than an LRGB image taken under a time constraint. Unfortunately this is not the reality for most of us. Many of us only have limited number of clear nights, and have multiple targets that we want to try. So practically, there is always a time limit for a target. For a challenging target or a target that we really want to make it better, sometimes we can allocate 2x or 3x or 4x more time on it. But there is always a limit. Under a time limit, for continuum objects, it is almost always true that an LRGB composition gives visually better result than an RGB composition of the same total integration time.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Scott Badger:

I shoot L when seeing is best and R, G, & B otherwise. I do the same for the same reasons that you describe - also get improved resolution in the LUM by using shorter subs and stacking more -- plus sampling at resolutions that gives BlurExterminator more headroom. On the RGB - unless the weather forecast is unusually good - I use an OSC for the RGB because there are so few usable - generally unpredictable - nights that it just works better to avoid having lots of just two colours and waiting for ever to get the third.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Ashraf AbuSara:

Interesting idea about combining the luminance of the L filter and the RGB filters. How does exposure time work in this context? If you have say 10 hours of L and 10 hours of RGB data, wouldn't the SNR from the L data be significantly higher than that of the equivalent exposure time of RGB data? Yes, if it's just 10 hours combined of RGB data (or, 10 hrs OSC), but if you have 10 hours each of R, G, and B (30 hours combined), then there's no difference. On another note, thought I'd tally up the 'luminance's' at play: L filter Clear (or no) filter L* - the CIELAB lightness component or non-linear 'perceived' luminance extracted from the RGB (the lightness tool in PI) CIE Y linear luminance extracted from the RGB Integration of the R, G, and B channels Integration of the R, G, B, and L channels (Superlum) Any others? Cheers, Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

Yuexiao Shen:

I feel the topic is a little meatless. If you have time, just take more L and also RGB as well. Please do not ignore RGB data. Sufficient RGB data can reduce the color noise significantly in the post-processing. I do not play with tricky and complicated post-processing approaches. When I get sufficient data, post-processing is pretty simple.

Yuexiao

It's always true that more data will lead to better results. When one has infinite amount of time, he/she should go RGB only and the result will be infinite times better than an LRGB image taken under a time constraint. Unfortunately this is not the reality for most of us. Many of us only have limited number of clear nights, and have multiple targets that we want to try. So practically, there is always a time limit for a target. For a challenging target or a target that we really want to make it better, sometimes we can allocate 2x or 3x or 4x more time on it. But there is always a limit. Under a time limit, for continuum objects, it is almost always true that an LRGB composition gives visually better result than an RGB composition of the same total integration time. The discussion about taking Lum or not and if yes how to process it together is always interesting , but like many other discussion tends to diverge to many stereotypes , and many things get mixed up easily (like color fidelity, SNR, ...). Wei-Hao summarized the essence very nicely. Lum makes visually more appealing images, but Lum brings little to none information into properly taken RGB image. So Lum makes images nicer, but not necessarily and/or always better. Perhaps Lum would show its best when processed and published as monochromatic image. When processing very asymmetric LRGB image (L having much longer integration time than RGB together) , what we in-fact do ? We paint perfect, high SNR Lum image with relatively poor and noisy RGB data, so I wonder whether can one ever make the colors right. This is just my personal thinking, not to challenge anyone or argue what is wrong and what is right.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Scott Badger:

Ashraf AbuSara:

Interesting idea about combining the luminance of the L filter and the RGB filters. How does exposure time work in this context? If you have say 10 hours of L and 10 hours of RGB data, wouldn't the SNR from the L data be significantly higher than that of the equivalent exposure time of RGB data?

Yes, if it's just 10 hours combined of RGB data (or, 10 hrs OSC), but if you have 10 hours each of R, G, and B (30 hours combined), then there's no difference.

On another note, thought I'd tally up the 'luminance's' at play:

L filter

Clear (or no) filter

L* - the CIELAB lightness component or non-linear 'perceived' luminance extracted from the RGB (the lightness tool in PI)

CIE Y linear luminance extracted from the RGB

Integration of the R, G, and B channels

Integration of the R, G, B, and L channels (Superlum)

Any others?

Cheers,

Scott But you would be weighing the 30 hours of RGB data 3 times more than the 10 hours of L data, when in reality they should be weighed equally? Not to mention that most folks would typically get equal or more L data than RGB data.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Stjepan Prugovečki:

Perhaps Lum would show its best when processed and published as monochromatic image. Sometimes b/w versions of our targets can be pretty compelling, though more often nebulae imo, so the NB integration instead of Lum. The one galaxy I like in b/w is M51. But.....when processing a Lum to be combined with RGB, it generally should not 'show its best'. Stjepan Prugovečki:

We have enough to chew on without diving into that wormhole!..... And something of a red-herring I think. Aren't our targets generally so dim that we wouldn't perceive any color, no matter how close our vantage? Cheers, Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Ashraf AbuSara:

But you would be weighing the 30 hours of RGB data 3 times more than the 10 hours of L data, when in reality they should be weighed equally?

Not to mention that most folks would typically get equal or more L data than RGB data. Not sure I follow.... I was saying that the Luminance from a 1:1:1:1 acquisition ratio (10hrs L, 10hrs R, 10hrs G, 10hrs B) will have the same snr as the RGB, so no gain. I generally follow a 2:1:1:1 ratio which doesn't gain me much snr, but I'm mostly using the L to counter poor seeing as others have mentioned. For better snr, 3:1:1:1 or 4:1:1:1 seem more typical. Cheers, Scott

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Scott Badger:

Ashraf AbuSara:

But you would be weighing the 30 hours of RGB data 3 times more than the 10 hours of L data, when in reality they should be weighed equally?

Not to mention that most folks would typically get equal or more L data than RGB data.

Not sure I follow.... I was saying that the Luminance from a 1:1:1:1 acquisition ratio (10hrs L, 10hrs R, 10hrs G, 10hrs B) will have the same snr as the RGB, so no gain.

I generally follow a 2:1:1:1 ratio which doesn't gain me much snr, but I'm mostly using the L to counter poor seeing as others have mentioned. For better snr, 3:1:1:1 or 4:1:1:1 seem more typical.

Cheers,

Scott Sorry if it was not clear, but my original question was to @John Hayes regarding using "Image Integration" tool in PI to combine a Super Lum from L and RGB data using Exposure time instead of SNR to weigh the frames.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.