This is my first real post and well it's kind of a doozy.

I'm working on a project with a couple of other AP friends. We collectively gathered a bunch of data on M33.

I gathered Ha and Oiii data and my friends did a bunch of time using some OSC cameras with uv/ir filters. We planned on using both sets for the RGB data. One telescope is a astrograph 6" newtonian, the other an askar FRA 500 refractor.

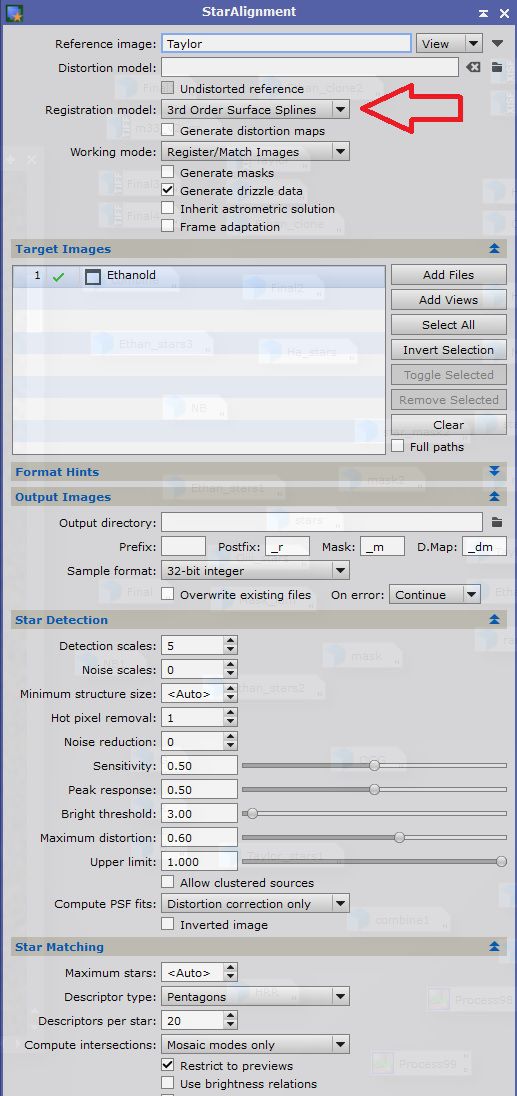

This is an alpha blend of both their data sets using Pixel math in pixinsight. They were star aligned prior.

Looks awesome right!?

Well..

In processing I'm seeing some major issues that I've spent a few weeks trying to combat.

The curvature issue seems to be most apparent in the corners. The stars are even separating at the very edges, like this top left corner.

I can take care of this by only using one person's star data however...

You can see this still affecting areas near the center of the galaxy, pulling them radially. Not as extreme but still detail loss compared to what I can get with just one of the 2 images. I cannot reliably remove these small star clusters of the galaxy even with starXtermintator so I'm at a loss on how to utilize both data sets without taking a detail hit.

This is the same area with just one of the telescopes. The stars don't pull to one side nearly as much. Both have stars like this so it's not guiding error on a singular scope either.

Anyone out there that has worked with multiple data sets have any suggestions on this?

Any help would be greatly appreciated!