Kevin Morefield:

Please try it out and let me know what you see. I will. Looks like I'll have a chance to try it tomorrow. I may have to do 0 and 90 since I've limited my rotators to 0-180. I'll try the luminance filter first.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Timothy Martin:

Kevin Morefield:

Please try it out and let me know what you see.

I will. Looks like I'll have a chance to try it tomorrow. I may have to do 0 and 90 since I've limited my rotators to 0-180. I'll try the luminance filter first. That should catch it if it's there but I think 0 and 179 would produce the biggest difference. Here's my 0 and 159 for example (didn't have 180 handy):  If you get a black result when you subtract one from the other just reverse the equation. Kevin

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kevin Morefield:

I didn’t use rotation matched flats for years. But after advent of the new more sensitive, low noise cameras and going deep in faint objects, I started seeing odd circular gradients. I ran flats at 0 and 180, subtracted one from the other and voila - the same circle appeared. After testing rotation matched flats the circular gradient was resolved. I believe this is coming mostly from the central obstruction on the CDK17 but I’m testing it on my refractor too.

The solution is flats for each object, not each night thankfully.

Kevin I take flats for each rotation angle. The case for my CDK 20 is that the illumination center is offset from the camera rotation axis substantially. In my flat, it's obvious that the brightest spot is not at the center of the image, and it shifts after a camera rotation. Maybe your CDK17 also has this, but not as apparent as mine (so it only shows up after dividing an unmatched flat).

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

Kevin Morefield:

I didn’t use rotation matched flats for years. But after advent of the new more sensitive, low noise cameras and going deep in faint objects, I started seeing odd circular gradients. I ran flats at 0 and 180, subtracted one from the other and voila - the same circle appeared. After testing rotation matched flats the circular gradient was resolved. I believe this is coming mostly from the central obstruction on the CDK17 but I’m testing it on my refractor too.

The solution is flats for each object, not each night thankfully.

Kevin

I take flats for each rotation angle. The case for my CDK 20 is that the illumination center is offset from the camera rotation axis substantially. In my flat, it's obvious that the brightest spot is not at the center of the image, and it shifts after a camera rotation. Maybe your CDK17 also has this, but not as apparent as mine (so it only shows up after dividing an unmatched flat). Yes, I'm sure that's it Wei-Hao. I'm also somewhat concerned that shooting sky flats could have a very slight gradient. I'd like to test sky vs. flat panel but I don't have one available in Chile.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

I take flats for each rotation angle. For me, there's a pretty low threshold of what I'm willing to put up with to keep pursuing this avocation. I love doing this, but there's only so much wrangling that I can tolerate before it becomes burdensome. I didn't work 100-hour weeks for 50 years only to wind up doing the same in my old age--for zero compensation. I might consider taking flats for a particular target in certain circumstances. But in most situations, like a 24-panel mosaic where the rotation angles differ by 1 to 3 degrees across the field, there's no way I'll do that. I just won't. I want to produce the best images that I can--but the key words there are "that I can." I don't have either the skill or the desire to produce the best images in the world. That's an admirable goal--but it's one for someone who is much younger and much more energetic than I am. This has to be fun or it's not worth doing.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kevin Morefield:

Yes, I'm sure that's it Wei-Hao. I'm also somewhat concerned that shooting sky flats could have a very slight gradient. I'd like to test sky vs. flat panel but I don't have one available in Chile. Twilight sky should always have some gradient built in. However, if that gradient is in the flat, its inverse will equally exist in every calibrated sub, so it should not impact any processes during registration and stacking. For the FoV of CDKs, the gradient in sky should be quite linear. So it should not add additional difficulty to the final gradient removal. So although I always worry about it, in practice I have found no cases where I can say for sure that the gradient in sky flat had any real impact in the final results. We also have a flat panel in our telescope enclosure. Unfortunately the width of the panel is only about 10% wider than the frame of CDK20. So I kind of suspect that it's not large enough. So I only use it for situations where twilight flat is not possible. Timothy Martin:

For me, there's a pretty low threshold of what I'm willing to put up with to keep pursuing this avocation. I love doing this, but there's only so much wrangling that I can tolerate before it becomes burdensome. I didn't work 100-hour weeks for 50 years only to wind up doing the same in my old age--for zero compensation. I might consider taking flats for a particular target in certain circumstances. But in most situations, like a 24-panel mosaic where the rotation angles differ by 1 to 3 degrees across the field, there's no way I'll do that. I just won't. I want to produce the best images that I can--but the key words there are "that I can." I don't have either the skill or the desire to produce the best images in the world. That's an admirable goal--but it's one for someone who is much younger and much more energetic than I am. This has to be fun or it's not worth doing. What you said is quite reasonable. I said I take flats for each rotation angle. This is because I rarely use rotation angles other than 0 and 90 degrees. I try as much as possible to fix my framing exactly east-west or north-south. If you are doing wide-field mosaics with many different rotation angles, then it obviously will be very tedious if you take flats for every angle, and I doubt whether that's necessary. You may try taking flat every 1 or 2 degrees and see how it works.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Timothy Martin:

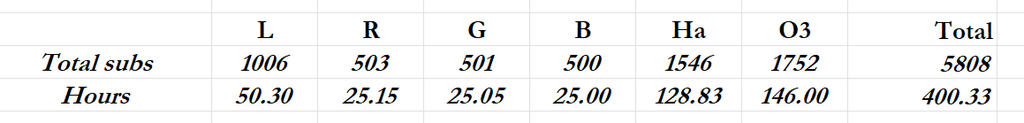

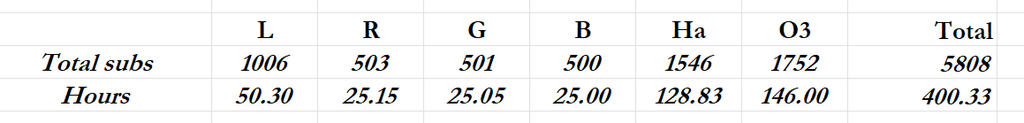

Recently, I've completed capture on a single frame with more than 400 hours of acquisition time--my longest ever, obtained only after suffering the pain equivalent to delivering a breach birth. It's a project I started in 2024, when I got 50 hours of data, and then completed this year by slogging through another 350 hours of data starting in January. 5808 subs for all filters. Here are the basic stats:

When I stacked the entire image, the results were less than pleasing. For example, using the Image Analysis-->SNR script in PI, the Ha master came up with an SNR of 53.38db. The Ha stack from last year, about 10 hours of Ha, came up with an SNR of 61.2. Results from all the other filter masters were very similar. The 10 hours of Ha from last year not only had a higher SNR, but also it just looked better and processed better than the 128 hours of data I bled for.

Okay, maybe I had some bad data in those 1546 Ha subs that I missed somehow with Blink and Subframe Selector. That's simply not the case, and here's how I know: I split the data up into chunks of 150 to 200 subs and stacked those chunks separately--eight of them. They each had an SNR of 75+ and all of them looked way better than the full stack of 1546 subs.

(Please note that I did both the full stack and the split stacks in both APP and WBPP. The results were very consistent)

After I stacked the 8 chunks of Ha data into 8 masters, I then stacked those 8 masters using PI's ImageIntegration process. The result was effing spectacular. An SNR of 89.66--and it looks like a million dollars--exactly what I was hoping for. I started processing it and was astounded at how much better it was than the full stack of 1546 subs.

(Again, these results were basically the same in both APP and WBPP)

So my question is: WTF?

Or better still: W. T. Holy. F?

Note: I'm sorry I can't share screen shots or raw files here. The image isn't finished and I don't want to disclose anything about what it is yet. So you'll have to trust me on these numbers and descriptions. But any insights could be helpful.

Without knowing anything at all about the stacking algorithms or the math involved, here are my theories--none of which I'm very confident in.

1. Somehow Mabula and the PI team both failed to account for extreme integration times and their algorithms simply aren't robust enough to handle such things

2. Somehow weighting is playing a role--perhaps weighting lesser quality data over higher quality data when enough lesser quality data is there

3. Rejection algorithms are playing an unexpected role over a very large sample size

All I know is that splitting this project up into ~200-sub stacks and then stacking the results of those chunks creates vastly--vastly superior results. I haven't been this discombobulated since November 5th. Please explain this to me if you can. If no one knows, then I'm just going to change my pre-processing workflow to stacking in chunks of no more than 250 subs, which will be a giant PITA. I wonder if extremely long integration times would be a thing if we didn't state the length of integration. Just show the image. I've seen 30-40+ hours of integration that did not look better than my 2 hours.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I'm looking forward to seeing the resulting image from so much data. The only other I know of by an individual was Rolf Olsen's centaurus a, with 421h or there about. And it was phenomenal.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

BryanHudson:

Timothy Martin:

Recently, I've completed capture on a single frame with more than 400 hours of acquisition time--my longest ever, obtained only after suffering the pain equivalent to delivering a breach birth. It's a project I started in 2024, when I got 50 hours of data, and then completed this year by slogging through another 350 hours of data starting in January. 5808 subs for all filters. Here are the basic stats:

When I stacked the entire image, the results were less than pleasing. For example, using the Image Analysis-->SNR script in PI, the Ha master came up with an SNR of 53.38db. The Ha stack from last year, about 10 hours of Ha, came up with an SNR of 61.2. Results from all the other filter masters were very similar. The 10 hours of Ha from last year not only had a higher SNR, but also it just looked better and processed better than the 128 hours of data I bled for.

Okay, maybe I had some bad data in those 1546 Ha subs that I missed somehow with Blink and Subframe Selector. That's simply not the case, and here's how I know: I split the data up into chunks of 150 to 200 subs and stacked those chunks separately--eight of them. They each had an SNR of 75+ and all of them looked way better than the full stack of 1546 subs.

(Please note that I did both the full stack and the split stacks in both APP and WBPP. The results were very consistent)

After I stacked the 8 chunks of Ha data into 8 masters, I then stacked those 8 masters using PI's ImageIntegration process. The result was effing spectacular. An SNR of 89.66--and it looks like a million dollars--exactly what I was hoping for. I started processing it and was astounded at how much better it was than the full stack of 1546 subs.

(Again, these results were basically the same in both APP and WBPP)

So my question is: WTF?

Or better still: W. T. Holy. F?

Note: I'm sorry I can't share screen shots or raw files here. The image isn't finished and I don't want to disclose anything about what it is yet. So you'll have to trust me on these numbers and descriptions. But any insights could be helpful.

Without knowing anything at all about the stacking algorithms or the math involved, here are my theories--none of which I'm very confident in.

1. Somehow Mabula and the PI team both failed to account for extreme integration times and their algorithms simply aren't robust enough to handle such things

2. Somehow weighting is playing a role--perhaps weighting lesser quality data over higher quality data when enough lesser quality data is there

3. Rejection algorithms are playing an unexpected role over a very large sample size

All I know is that splitting this project up into ~200-sub stacks and then stacking the results of those chunks creates vastly--vastly superior results. I haven't been this discombobulated since November 5th. Please explain this to me if you can. If no one knows, then I'm just going to change my pre-processing workflow to stacking in chunks of no more than 250 subs, which will be a giant PITA.

I wonder if extremely long integration times would be a thing if we didn't state the length of integration. Just show the image.

I've seen 30-40+ hours of integration that did not look better than my 2 hours. I will agree with this sometimes. And certainly with some targets. Historically for me, my top pick nominations and top picks have all come from sub 20h integration images, and some coming from 2~5h

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

BryanHudson:

I wonder if extremely long integration times would be a thing if we didn't state the length of integration. They would--at least for me. There are a lot of extremely faint targets out there that you're not going to get with 2 hours no matter how good your processing skills are.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Fascinating thread! My procedure is to still use manual calibration. Maybe that is because I am just old-school or maybe it is just a bit too much of a "black box" for me. I take flats any time I rotate the camera system (or open up the system for maintenance), and integrate with NSG, typically making sure that gradient lines do not cross the object (when possible). This is after manual rejection of some frames using Blink then auto rejection using Subframe Selector. But then I am too faint hearted (or maybe lazy?) to try that many frames and that much total exposure time.  The longest I have done so far is about 600 frames and 88 hours.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Hi Tim,

I've yet to go beyond about 30 hours or so of integration time so I don't have much to add to the conversation, except to tell you that you make me laugh, and laughter is a critical medicine these days!

Jerry

p.s.

What does NSG stand for?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

My goodness this is right up my alley!!

I have twin TOA 150s in the desert , and I don’t process anything under 60 to 80 hours. I like doing 100 and 200 projects…

Bray Falls integrates Masters. I don’t think that’s the same results as integrating individual subs.

But hey, he’s got the awards

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Jerry Gerber:

What does NSG stand for? Normalize Scale Gradient. A great script by John Murphy. Here is an explanation by Adam Block.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Bill McLaughlin:

But then I am too faint hearted (or maybe lazy?) to try that many frames and that much total exposure time. I usually am, too. My typical target is around 40-80 hours. But it depends on the scope and the target. In this case, the focal length is short, and there's not a whole lot of stuff to shoot at 382mm during galaxy season. So I thought I might as well go deep on an area where I know there's a payoff at the end of the rainbow. Incidentally, three days in, with three computers working, I'm about halfway through stacking this monster in ~200-sub chunks. To paraphrase the late Justice Scalia, 'Extreme integration times are not for sissies.'

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Rafa:

Bray Falls integrates Masters. I don’t think that’s the same results as integrating individual subs. I'm not totally sure what you mean here. I would much prefer just to tee up WBPP and let it do its thing on the whole data set. But that's what this whole thread is about. In any case, I've long stacked the L, R, G, and B channel masters to create a super-luminance master for broadband images. I usually see an SNR improvement of 10 to 20 percent, which is well worth it. And for narrowband, I create a synthetic luminance master from the channel-combined narrowband masters. I find that a great luminance master is better than all the noise-reduction processes on the planet. And it's far easier to deal with dynamic range and contrast using a good luminance master than it is using a color image.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Timothy Martin:

Rafa:

Bray Falls integrates Masters. I don’t think that’s the same results as integrating individual subs.

I'm not totally sure what you mean here. I would much prefer just to tee up WBPP and let it do its thing on the whole data set. But that's what this whole thread is about.

In any case, I've long stacked the L, R, G, and B channel masters to create a super-luminance master for broadband images. I usually see an SNR improvement of 10 to 20 percent, which is well worth it. And for narrowband, I create a synthetic luminance master from the channel-combined narrowband masters. I find that a great luminance master is better than all the noise-reduction processes on the planet. And it's far easier to deal with dynamic range and contrast using a good luminance master than it is using a

like you, I also subscribe to the WBPP One Stop shop.

When I heard about bray Falls technique( he’s also integrating over 20 masters), I wanted to challenge it as well. I felt I could improve upon it by integrating every sub in one swoop.

Now, with your data and brays results, I’m having second thoughts…. |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

You could set the local normalization process to actually output the normalized images then you could blink through them to look for any "funny business" that might cause the integration process to not work the way you want.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kevin Morefield:

Timothy Martin:

Arny:

Timothy Martin:

I typically only take flats about once every six months.

That is interesting - I take them every night ...

Do you not touch your setup or make any changes for 6 month and do you net get dust inside in between?

Arny

My setups are remote so I only physically touch them when I'm on site--about once a year. The image trains have fared very well in that I rarely see any new dust motes that create the need for new flats. When it comes to rotation, I'm off the reservation. I shoot with all kinds of rotations all the time and don't take flats when I change rotation angle. I've never seen a problem with that. Any dust particles that manifest themselves in the data are going to rotate with the image train. But interestingly, that's not an issue here. All three sets of flats were taken at the same rotation angle as the target I'm working on here. If I had to take flats every night, I simply wouldn't do this at all. I'd sooner have a colonoscopy without anesthesia.

I didn’t use rotation matched flats for years. But after advent of the new more sensitive, low noise cameras and going deep in faint objects, I started seeing odd circular gradients. I ran flats at 0 and 180, subtracted one from the other and voila - the same circle appeared. After testing rotation matched flats the circular gradient was resolved. I believe this is coming mostly from the central obstruction on the CDK17 but I’m testing it on my refractor too.

The solution is flats for each object, not each night thankfully.

Kevin Great point Kevin. My CDK14 has a rather large central 'bright' spot on the flats when you look at them with a stretch, and its not perfectly symmetrical, requiring me to also take flats for different camera angles. Pete

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

One thing I would recommend looking at that I am running into with my own dataset… Look and see how many “rejected” images you have based on SNR weighting, and what sort of weighting your images are getting. I have some H-alpha images I am trying to stack that were taken across a range of lunar phases, and the images taken while the moon was out have muuuccchhh lower weightings than I would have expected. I suddenly had WBPP throwing out 80% of my images because I had a few images take with minimal light pollution, and those had measurements suggesting everything else was so bad as to either be omitted or to receive weighting under 10%. The end result? My full stack is worse than it was a few days ago when I was only stacking images taken with the moon out.

I would definitely expect a generally higher weighting on nights when there was no moon, but for narrowband data in particular, I would have expected the difference in weighting to be much lower than it seems to be. Ten minute images with background ADU that are just 8-10 counts higher (with a moon) receive weightings in the 7% or 8% range compared to nights with no moon. I would have expected significantly closer weightings. I'm running some tests to see if that is where things are going wrong with my particular dataset, but I don't buy that the difference in quality with a 3nm narrowband filter is as dramatic between moonless nights and, say, first quarter moon.

By default, I was using PSF Signal Weight for my image. The subject covers a fairly small area of sky compared to the overall image.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kevin Morefield:

Please try it out and let me know what you see. I'm pretty sure that any consistent difference in the flats would have an equal (and opposite) consistent difference in the lights. I didn't notice it till I was looking at background structures with a single ADU or less in differentiation. To update this for Kevin--I haven't gotten to it yet. We've been having good weather in NM for the last week, so I've been capturing data rather than flats. The observatory is fairly dark inside during the day, but not completely dark. I'd prefer running this test at night when it's very dark in there in order to get really reliable results from this test. I'm sure I'll probably find that you're completely right about this--as you always are. But I'm not looking forward to taking a bite from the fruit of that tree of knowledge. Even only shooting flats on a per-rotation-angle basis, it means a whole new level of complexity. Managing 210 additional Moravian C5 FITS files for almost every image, creating flats from them, and storing them is a special kind of pain. But if it results in an improvement in quality, no matter how minuscule, I guess I'll be forced to stop whining about it and just do it. The good news is that this is completely automatable in NINA given that all three of my remote scopes are equipped with Alto-Giotto combos. I actually already have the code for it in my main sequence template, so I'll just need to activate that whenever I shoot a new target. Sure beats the days when I thought I had to get up at 4:30am every morning and go outside to do this manually in the dark.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

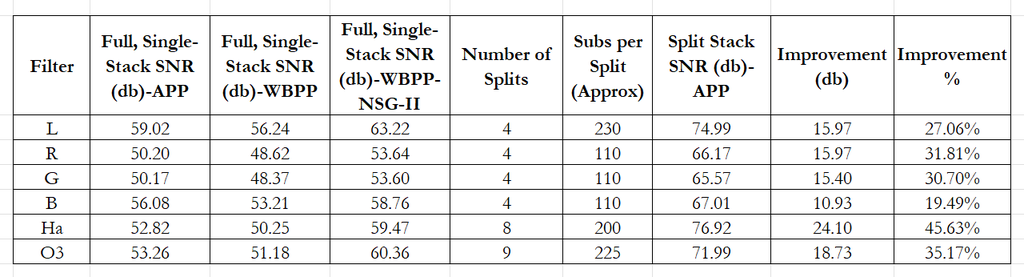

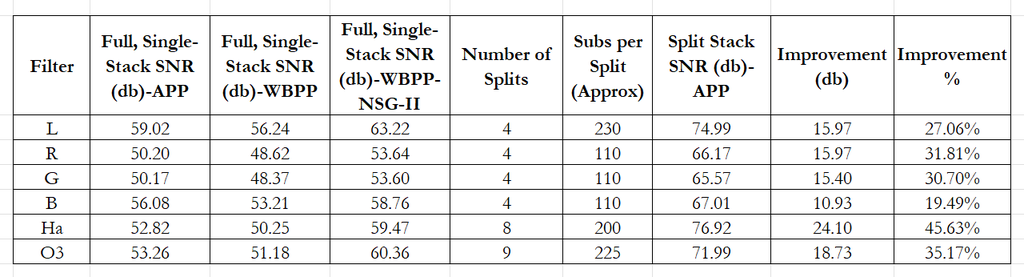

I've finished working this issue just about every which way I can. I did (1) a full stack of all subs for all channels using AstroPixelProcessor (APP), (2) a full stack of all subs for all channels using WBPP, (3) a full integration of all subs for all channels using PI's ImageIntegration after spending a mind-numbing number of hours with Normalize Scale Gradient (NSG), and (4) I split the integration into chunks using APP and then stacked the resulting masters to produce final channel masters with PI's ImageIntegration (with rejection turned off). I then ran the SNR script on all the final masters generated from this mess and put those results in a spreadsheet:  The results were a bit surprising to me. Mostly I was struck by the 5%-ish loss in quality going from APP to WBPP. I ran some comparisons a couple of years ago before WBPP had Local Normalization Correction (LNC) and determined that I was getting better quality stacks out of APP. I hadn't really done much with WBPP since, having settled into habit. I'm a bit surprised to see that while the gap has narrowed, at least in this example, the addition of LNC hasn't completely closed that gap. I'll note that while NSG did produce the best results of the full stack sets, it was still nowhere near the split-stack results. Some of that--but probably not all of it--is the result of having a loose nut behind the wheel. This was my very first foray into NSG, so I'm sure I'm not doing it in the best possible way. I just followed John Murphy's five-part tutorial as best I could. There are two obvious paths here I have yet to go down. First, I did not use WBPP to produce the split stacks. And second, I did not use WBPP in combination with NSG to produce the split stacks. I'll save that for a rainy week. I felt like the results I got from the APP split stacks were pretty darn good, so I stopped there for now. I do have a life outside astrophotography. I generally loathe the APP user interface and find WBPP to be much easier to use--but when you put NSG into the mix, the scale tips hard back the other way. These extreme integration times are not something I can and will do very often, so it might well be worth the trouble. I'm still finalizing the image, so I'll post it later today or tomorrow. The bottom line here is that I think I've found a new tool to put in my arsenal. But it's no panacea. I used the same approach on another image I just finished capturing. That image was only ~130 hours total with ~90 hours of Ha captured over the last two months. I used the split method on the Ha. That result actually turned out worse than the single, full stack. This leads me to believe that the split approach likely works best when data capture is spread out over many months or even years--probably because stacking in contemporaneous chunks allows LNC to function better. I have another image I'm capturing that just passed 350 hours heading to 400 that I can try this on in a month or two. It'll be interesting to see what happens there. I do still plan to execute the flat exercise @Kevin Morefield recommended. But note that it's not relevant here because all the flats for this image were taken at 0-degrees sky rotation, which is the same rotation angle as the image in question.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I'd like to point out the PixInsight now has a Local Normalization process built into WBPP that takes the place of the older NSG script. You could save a lot manual labor if tried that instead... just load up the lights, darks, flats into WBPP and press go. I don't understand how or why a stack of all the images is producing less SNR than one made up of sub-stacks unless there's something funky going on with the weighting scheme as reported by @Jared Willson just above.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

@Timothy Martin Well, the integration time does not really matter here. The number of single subs is more important. I have processed a lot more frames within my M31 Arc Project: https://app.astrobin.com/u/pmneo?i=x4gcpi#galleryf.e. 945 images of luminance. I used NSG a lot in this project. I haven't tried the aproach with splitting it into multiple junks. But I have tried a lot with NSG here to get the best out of it. But with my latest projects I used the manual mode in WBPP of Local Normalization, with this you can use the best 30 or 20 images to be stacked as reference. I also figured out, that you can apply decent gradient correction to those reference files before normalization starts (just open a new instance, correct and save before you select the ref frame). The only thing you really need with that large number of frames is a fast pc and time ;) CS Philip

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

John Stone:

I'd like to point out the PixInsight now has a Local Normalization process built into WBPP that takes the place of the older NSG script. I used LNC in the full WBPP stacks. Maybe he's off base, or maybe he's been overtaken by events, but John Murphy discusses why he thinks NSG is ostensibly superior to LNC. I found that to be the case this time around, but still not close to the result I got by splitting things up.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.