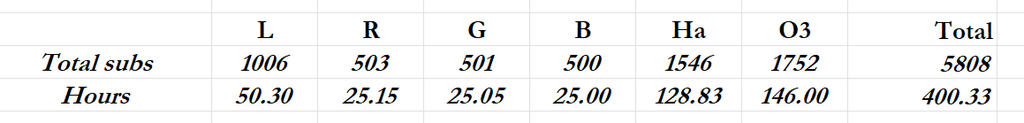

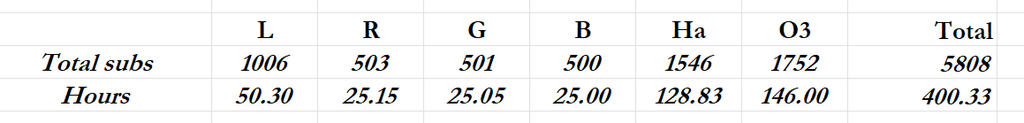

Recently, I've completed capture on a single frame with more than 400 hours of acquisition time--my longest ever, obtained only after suffering the pain equivalent to delivering a breach birth. It's a project I started in 2024, when I got 50 hours of data, and then completed this year by slogging through another 350 hours of data starting in January. 5808 subs for all filters. Here are the basic stats:  When I stacked the entire image, the results were less than pleasing. For example, using the Image Analysis-->SNR script in PI, the Ha master came up with an SNR of 53.38db. The Ha stack from last year, about 10 hours of Ha, came up with an SNR of 61.2. Results from all the other filter masters were very similar. The 10 hours of Ha from last year not only had a higher SNR, but also it just looked better and processed better than the 128 hours of data I bled for. Okay, maybe I had some bad data in those 1546 Ha subs that I missed somehow with Blink and Subframe Selector. That's simply not the case, and here's how I know: I split the data up into chunks of 150 to 200 subs and stacked those chunks separately--eight of them. They each had an SNR of 75+ and all of them looked way better than the full stack of 1546 subs. (Please note that I did both the full stack and the split stacks in both APP and WBPP. The results were very consistent) After I stacked the 8 chunks of Ha data into 8 masters, I then stacked those 8 masters using PI's ImageIntegration process. The result was effing spectacular. An SNR of 89.66--and it looks like a million dollars--exactly what I was hoping for. I started processing it and was astounded at how much better it was than the full stack of 1546 subs. (Again, these results were basically the same in both APP and WBPP) So my question is: WTF? Or better still: W. T. Holy. F? Note: I'm sorry I can't share screen shots or raw files here. The image isn't finished and I don't want to disclose anything about what it is yet. So you'll have to trust me on these numbers and descriptions. But any insights could be helpful. Without knowing anything at all about the stacking algorithms or the math involved, here are my theories--none of which I'm very confident in. 1. Somehow Mabula and the PI team both failed to account for extreme integration times and their algorithms simply aren't robust enough to handle such things 2. Somehow weighting is playing a role--perhaps weighting lesser quality data over higher quality data when enough lesser quality data is there 3. Rejection algorithms are playing an unexpected role over a very large sample size All I know is that splitting this project up into ~200-sub stacks and then stacking the results of those chunks creates vastly-- vastly superior results. I haven't been this discombobulated since November 5th. Please explain this to me if you can. If no one knows, then I'm just going to change my pre-processing workflow to stacking in chunks of no more than 250 subs, which will be a giant PITA.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

When I started reading your post, I thought the best strategy would be to split those numerous amount of exposures into smaller groups (each group represents a consecutive 1-2 hours of sequence), stack them separately normally, run local normalization (or John Murphy's normalize scale gradient script) on the many stacked images, and then stack them all. Then I read along and found that you did try this and got better results.

When you have many many exposures, they must come from different nights with possibly very different conditions when targets are on different directions of your local sky. This leads to very different background gradients in the image. Regular stacking and rejection don't work well on this kind of dataset. PI's local normalization and John Murphy's normalize scale gradient script are to solve this. However, running either on huge number of exposures will be terribly time consuming. The normalize scale gradient script is substantially faster than local normalization, but it can still take unnecessarily large amount of time in your case. One way to solve this is to split images into groups and stack them separately. If within each group the conditions were not that different, than stacking without local normalization should be just fine.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Wei-Hao Wang:

When you have many many exposures, they must come from different nights with possibly very different conditions when targets are on different directions of your local sky. This makes a lot of sense. Thank you, Wei-Hao!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Tim,

First, I spit out my coffee laughing when I read your post. Thanks for that…

How did you calibrate, align and integrated the data? I only recently started using WBPP and I love it for its ability to cut down on the drudgery of getting the data channels integrated. The thing that I'm still exploring is how to regain more of the control that I had when I did everything manually. Some of the operations within WBPP are hidden from view and depending on how you have the various options set, it may not be properly rejecting data that could be screwing up your final result. In the end, you did what I would have suggested by integrating subsets of data. Ultimately, you should be able to integrated the full set without any problems but it would require to some experimenting to determine why you aren't getting the right result with the entire data set. I doubt that it's a problem with the ImageIntegration tool. Either way, the quality of the input data can make a huge difference in the output. Doing things manually and inspecting each step is the best way to figure out where the problem lies.

John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

John Hayes:

Doing things manually and inspecting each step is the best way to figure out where the problem lies. Thanks so much for taking the time to read and respond, John. I really appreciate it. And I'm glad I could provide a chuckle to your day. It looks like I'm getting good results from splitting things up. But that could be just a happy accident. I think your approach is a good long-term way to go about this. I'll work through the process manually and see what I can uncover. That could, however, take quite a while. So hopefully, I can complete this particular project soon using elbow grease and counting on good fortune and then pursue it in more detail to develop a better long-term strategy. I've got two more images in the hopper with pretty extreme integration times. While they have served me well in the past, I don't think I can count on my good looks and charm for the other two images  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

John Hayes:

How did you calibrate, align and integrated the data? I guess I should answer your questions. I had one set of flats and darks for last year's data and two other sets for this year's data. All the calibration, alignment, and integration was done first by APP, and then again from scratch by WBPP. I think the calibration frames are good--they seem to produce very good results on the splits. And I've culled the hell out of the raw subs. I've probably tossed at least 200 hours of data (hopefully, that's the bathwater and not the breach baby).

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I wonder if you are somehow running into fp32 floating point precision issues when stacking the large dataset based on the underlying algorithmic implementation in either APP or PI; essentially where a full “multiply-add” across the gigantic number of subs before normalization (divide) is overflowing something that isn’t happening with a smaller set of subs.

Could also be potentially SIMD optimization related issues in the underlying implementation, albeit APP uses Java straight up from what I understand while PI uses a mix of Java and C++. Have you tried another program like Siril to see if it is any different?

Ani

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Ani Shastry:

Have you tried another program like Siril to see if it is any different? Hi Tim, if you do try to stack it in Siril, I strongly suggest to do it via Sirilic as it's easier to handle multiple channels and multiple sessions.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Ani Shastry:

Have you tried another program like Siril to see if it is any different? I don't have Siril and don't know anything about it. Might be worth a shot down the road, but with upcoming travel most of the month of May, I don't think I could get to it very soon.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

This is a very interesting and meaningful topic. I think what Weihao Wang mentioned is probably the main reason. Gradient differences in data spanning multiple days or even across years may lead to inaccuracies in weight and Pixel Rejection calculations.

Imagine if someone's flat frames are very inaccurate, resulting in calibrated images that are darker on the left and brighter on the right. After alignment, it might look like this: before the Meridian Flip, the images (Group A) appear darker on the left and brighter on the right, while after the Meridian Flip (Group B), the opposite is true. If Group A has more images, the left side of Group B might be considered outliers due to being too bright, or it could cause errors in weight calculations.

I think you could try manually performing the image integration process. ImageIntegration will output status in the console, allowing you to observe whether there are significant differences in the weights of each frame and whether a large number of pixels (e.g., more than 1%) are being rejected during Pixel Rejection.

I’ve encountered similar issues before. At that time, I noticed that weight algorithms containing "PSF" in their names didn’t seem to handle data from different equipments very well. However, I didn’t encounter this problem when processing data from the same set of devices, possibly because my exposure times and number of frames weren’t sufficient.

Additionally, I’d like to ask: when you perform batch stacking, are the alignment references the same for the images within each batch? In other words, when you stack the 8 masters for the second time, do they need to be aligned again?

–translate by AI.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Divide and conquer is the best strategy for handling large data sets. I'm pretty sure that the stacking algorithm in PI is pretty robust and can handle your gazillion hours data set too.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Crazy amount of integration - How long did the integration run for these 5800 frames ??

And did I understand you correctly that you use only one set of flats - that would not work well I‘d think …?

Arny

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

One possibility - some sessions as a whole are sub-par, even after per session culling. Which could be for a variety of reasons.

When I use the Transmission graph in NSG, the low quality subs typically are all from the same session(s). The problem in doing this for a large number of subs (I've gone to a max of 500, nothing like yours), is the time taken by PI/NSG.

A faster first pass is using Sirilic (with Siril as the backend). It's extremely simple to stack multiple nights. More importantly here, it is much much faster, and you can set it to stack the subs in each session separately before doing the master stack. In one run, you get the session wise sub-stacks as well as the master stack.

This has helped figure out which sessions had lousy SNR / number of stars (confirmed experimentally with NSG), uncorrectable dust motes / reflections and what not. I generally chuck out that entire session and redo.

Or I just restack the other sub-stacks which seem fine.

Either way, the final stack comes out better, and it's a much faster diagnosis than relying on PI alone.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

我可是汞:

Additionally, I’d like to ask: when you perform batch stacking, are the alignment references the same for the images within each batch? In other words, when you stack the 8 masters for the second time, do they need to be aligned again? Yes, the 8 masters had to be aligned again. I looked closely at the stars after integrating the 8 masters and they looked better than they did in the full stack, so I don't think that's an issue.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

This is a really helpful thread Timothy! Curious if anyone thinks NSG would have helped here?

Kevin

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Arny:

Crazy amount of integration - How long did the integration run for these 5800 frames ?? About five days in both cases. Arny:

And did I understand you correctly that you use only one set of flats - that would not work well I‘d think …? No--I had three sets of flats. One set for the 2024 data, one set for January 2025, and another set for the rest. I don't think calibration is the issue. The 8 masters generated from Ha data subsets look very clean. I typically only take flats about once every six months.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kevin Morefield:

This is a really helpful thread Timothy! Curious if anyone thinks NSG would have helped here?

Kevin That's a possibility. I'll add it to the list of things to try when I get time. It's not really a simple matter to tweak a setting or run some other pre-pre-process and then try a full integration again. It just takes so darn long to run. I can isolate various experimental iterations to, say, the B channel, which only has 500 subs and takes less time. But then I can't really be confident about what will happen when I try it on the Ha or O3 channels, which each take a day or two to process. But in the long run, it will be worth the effort to wrestle this to the ground. As I mentioned, I have two projects of similar scope in the hopper right now and I hope to have many more large projects like this in the coming years. There are only so many objects to shoot in the northern night sky. One of the only options open to me to distinguish shots of commonly targeted objects is to go for extreme integration time. I could process the disappointing masters I already have and create a nice image, but after spending the better part of a year on this, I'd like to wring out the maximum quality the data can deliver.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Timothy Martin:

I typically only take flats about once every six months. That is interesting - I take them every night ... Do you not touch your setup or make any changes for 6 month and do you net get dust inside in between? Arny

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Ani Shastry:

I wonder if you are somehow running into fp32 floating point precision issues when stacking the large dataset Another distinct possibility. Many floating-point operations resolve to Taylor (or other) series, which can involve dozens or even hundreds of sub-calculations just to do one main calculation. That can get very dicey. In grad school in the early 80s, I had a protracted argument with my numerical analysis prof about calculating error bounds for these types of computations. My contention was that the error-bound calculation itself contained error and, depending on its complexity, those errors could accumulate in the error-bound calculation and render it meaningless. I was finally able to prove this contention using the school's mainframe and Dr. Summers was convinced--so much so that he wanted me to do my thesis on the subject (I didn't--I had already been working on my thesis for a year).

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Arny:

Timothy Martin:

I typically only take flats about once every six months.

That is interesting - I take them every night ...

Do you not touch your setup or make any changes for 6 month and do you net get dust inside in between?

Arny My setups are remote so I only physically touch them when I'm on site--about once a year. The image trains have fared very well in that I rarely see any new dust motes that create the need for new flats. When it comes to rotation, I'm off the reservation. I shoot with all kinds of rotations all the time and don't take flats when I change rotation angle. I've never seen a problem with that. Any dust particles that manifest themselves in the data are going to rotate with the image train. But interestingly, that's not an issue here. All three sets of flats were taken at the same rotation angle as the target I'm working on here. If I had to take flats every night, I simply wouldn't do this at all. I'd sooner have a colonoscopy without anesthesia.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

For any given sample set you are dependant on the sum of those samples taken at a particular time to present the optimum of that sample set … bad seeing will accumulate as more and more subs are added to your selection set. There will be a 'sweet spot' where more data doesn't improve the selection set but just contributes to it's averaging.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Timothy Martin:

Arny:

Timothy Martin:

I typically only take flats about once every six months.

That is interesting - I take them every night ...

Do you not touch your setup or make any changes for 6 month and do you net get dust inside in between?

Arny

My setups are remote so I only physically touch them when I'm on site--about once a year. The image trains have fared very well in that I rarely see any new dust motes that create the need for new flats. When it comes to rotation, I'm off the reservation. I shoot with all kinds of rotations all the time and don't take flats when I change rotation angle. I've never seen a problem with that. Any dust particles that manifest themselves in the data are going to rotate with the image train. But interestingly, that's not an issue here. All three sets of flats were taken at the same rotation angle as the target I'm working on here. If I had to take flats every night, I simply wouldn't do this at all. I'd sooner have a colonoscopy without anesthesia. I didn’t use rotation matched flats for years. But after advent of the new more sensitive, low noise cameras and going deep in faint objects, I started seeing odd circular gradients. I ran flats at 0 and 180, subtracted one from the other and voila - the same circle appeared. After testing rotation matched flats the circular gradient was resolved. I believe this is coming mostly from the central obstruction on the CDK17 but I’m testing it on my refractor too. The solution is flats for each object, not each night thankfully. Kevin

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kevin Morefield:

I ran flats at 0 and 180, subtracted one from the other and voila - the same circle appeared. After testing rotation matched flats the circular gradient was resolved. I haven't yet seen this occur--but maybe it is and I'm just missing it. This is definitely an exercise worth doing on a cloudy night.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kevin Morefield:

I didn’t use rotation matched flats for years. But after advent of the new more sensitive, low noise cameras and going deep in faint objects, I started seeing odd circular gradients. I ran flats at 0 and 180, subtracted one from the other and voila - the same circle appeared. After testing rotation matched flats the circular gradient was resolved. I believe this is coming mostly from the central obstruction on the CDK17 but I’m testing it on my refractor too.

The solution is flats for each object, not each night thankfully.

Kevin That's interesting Kevin. I only retake flats maybe once a year but I also don't have a rotator so maybe that's why I don't see the problem. My system appears to be perfectly dust tight so the few mote's that I have are stable over time. John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Timothy Martin:

Kevin Morefield:

I ran flats at 0 and 180, subtracted one from the other and voila - the same circle appeared. After testing rotation matched flats the circular gradient was resolved.

I haven't yet seen this occur--but maybe it is and I'm just missing it. This is definitely an exercise worth doing on a cloudy night. Please try it out and let me know what you see. I'm pretty sure that any consistent difference in the flats would have an equal (and opposite) consistent difference in the lights. I didn't notice it till I was looking at background structures with a single ADU or less in differentiation.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.