Chris:

Thanks for this post...been having similar issues so I'll give some of the recommended changes in here a try

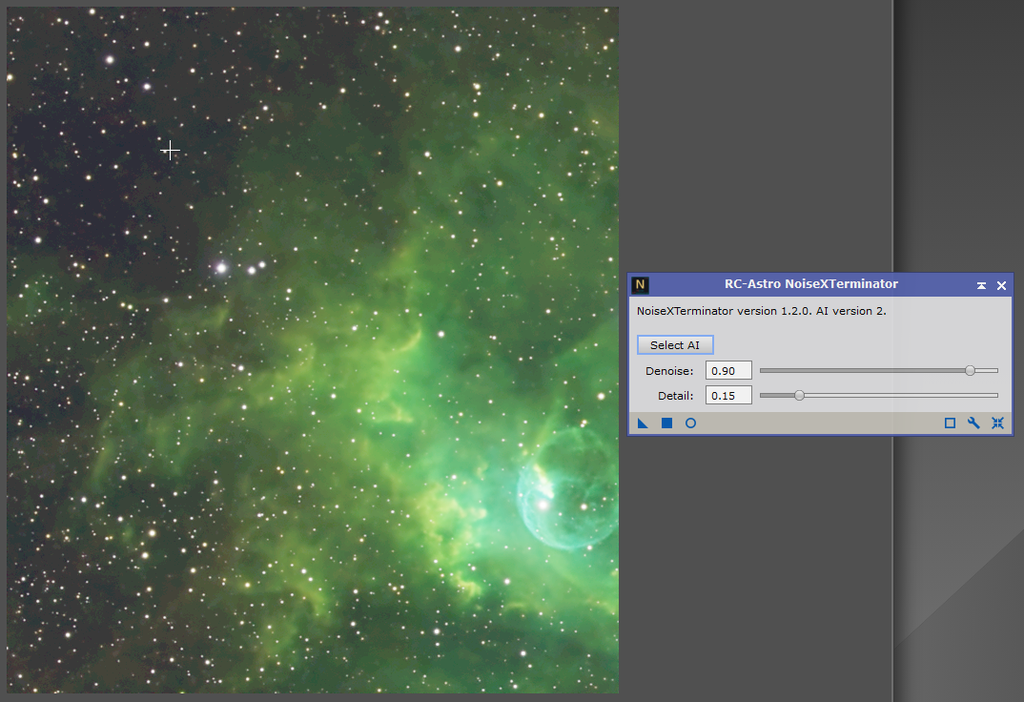

My strongly held opinion here is, this is WAY, WAY too much noise reduction.

I mentioned earlier in the thread the concept of "obliteration"? This right here is noise obliteration. In fact, its OBLITERATION!

I know that there are shifting aesthetic preferences in any endeavor like astrophotography. That said, I think there IS a proper way to handle any signal. I think there are often misconceptions that noise and signal are...well, lacking a better term, "orthogonal" to each other (orthogonality between distinct signals is a different thing, but I'm specifically talking about a given signal, and its noise here). By this, I mean, I think people assume noise can be eliminated from a signal, without unduly affecting the signal.

The reality is that the signal IS NOISY. The noise is not orthogonal to the signal...it is an intrinsic aspect of uncertainty IN the signal. An ASPECT OF the signal. It cannot be "eliminated"...or as I've been putting it, OBLITERATED.

Not, at least, without having a detrimental effect on the signal itself.

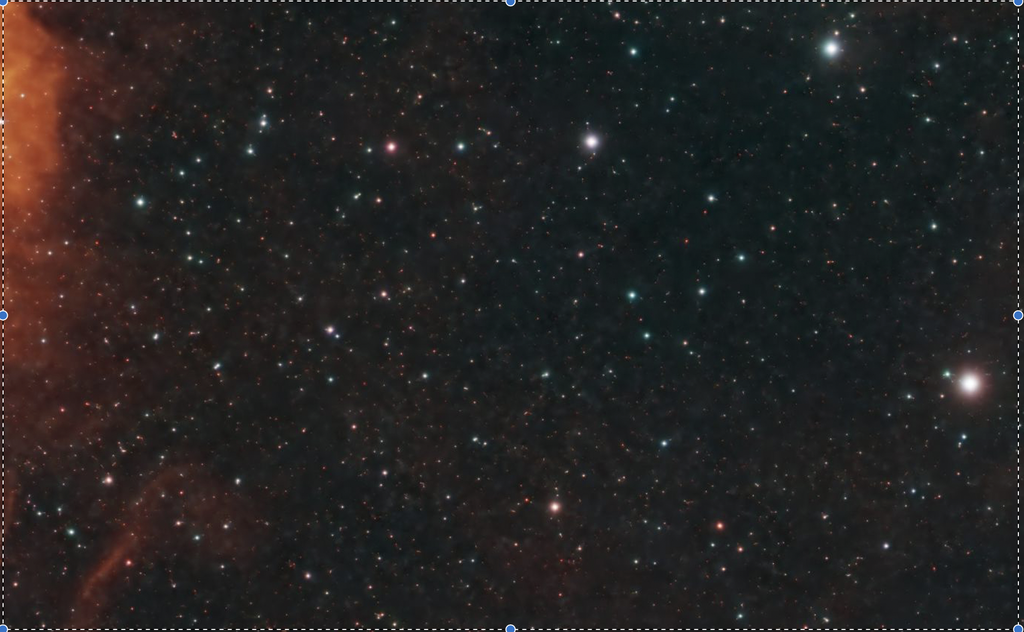

The mottling, orange peel, otherwise undesired effect in this image here, is the result of attempting to obliterate, or completely remove, noise from the signal.

Approach the problem differently. REDUCE the noise. Don't obliterate it. The noise is an intrinsic part of, an inherent aspect of, the signal. Obliteration decimates the quality of the signal, which is not really a desirable outcome. Instead of obliteration, aim to mitigate the impact of noise, while at the same time trying to affect the quality of the signal as minimally as possible. THE SIGNAL IS NOISY! That's a fact. You can manage the noise, adjust its nature, so that it becomes less apparent, while having a minimal effect on the information you can SEE and OBSERVE within the signal.

I never try to eliminate the noise, or the "graininess" of my images. The noise is intrinsic, inherent, and a fundamental part of the image. Instead, I try to minimize its visual impact. This comes via reduction. You can think of noise as an amplitude...one pixel might be brighter than it "should" be, the next darker than it "should" be, the next after that pretty close to what it "should" be. We don't actually, and simply cannot actually, KNOW what each pixel really "should" be!! This unknowability, or what we call "uncertainty" in the signal, IS noise! By changing the noise, we are affecting the accuracy of our signals...which are measurements of some amount of light (the signal) at some point in the sky. Even if we just reduce the amplitude of the noise, we are still in fact affecting the signal...you cannot affect noise without affecting signal. You always affect both at the same time. But, reducing the amplitude can mitigate the impact noise has on our ability to see the pretty picture within.

Eliminating (i.e. obliterating) the noise is effectively flattening any meaningful differences between adjacent pixels, and that WILL, ALWAYS, have an effect on the signal...usually a detrimental one. To preserve the signal, you have to reduce the noise to a degree, but not obliterate.

Say we have something like this

---

|

|

| ---

| |

| |

| --- |

| | |

| | |

Obliteration would likely produce something like this:

---

| --- ---

| | |

| | |

When in fact, what we really wanted was something more like this:

---

| ---

| --- |

| | |

| | |

| | |

Reduction. Manage the noise, finesse it, to bring out the pretty picture without destroying it. Don't obliterate. ;)