I'm really new to astrophotography. I had no idea structures changed or disappeared with longer integrations. @Oscar (messierman3000) I've had really good luck with the original Topaz denoise on my images but it should only be used on starless images. I'll run NXT at .4-.6 with the detail slider at .15 in a linear state after I've run MMT to target chromenance noise (NXT seems to have an easier time with salt and pepper noise). Then stretch however you like. For topaz, I recommend leaving the "recover original detail" slider maxed and the sharpen setting at 0. Typically, I'll use the severe noise setting and set the noise reduction to 1 (regardless of whether or not the noise is actually "severe") this seems to have little to no impact on structure and targets only BG noise. The other presets like standard and low light seem to modify/enhance/add structure. Then I'll continue my process in PI... stretch a little further/do color work and determine whether or not another pass of NXT is necessary or not. Hope this helps!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

@Kay Ogetay I appreciate the response. It seems like you continue to miss the mark. I'll outline this below. First of all, I think there is still confusion here. Switching between Astrobin images and seeing some minor parts that are slightly different on a very faint object has absolutely no impact on any of our lives. Let's admit that. Nobody will say 'oh shoot, that 30px long filament seems to be curved in by 10 degrees more, I'm not shooting it!'. The majority of the structure remains the same. What do you think is an acceptable level of "closeness" that two images can occupy such that you can dismiss the evidence shown herein by myself and others? I must reiterate and ask you to consider this again, In the case of the O3 arc, the data presented in the scientific papers has been shown to be patently false. With integration times as mentioned above of ~8x greater. Multiple deep integrations have been done since the release. And the ones that have been done since then agree within themselves that the O3 arc presented in the scientific paper is a false structure. You said yourself that scientific papers are for the data... well the data has beyond reasonable doubt been shown to be false. You are now sidestepping the point. I cant state it any clearer. In the case of the goblet of fire, this data has major discrepancies involved as well. No two images agree with each on the major structural outline of the object. We arent talking about small differences here. The quite opposite actually. Not pointing anyone here, but there was an IOTD I took as a reference, because of a simple aspect in the image -a blue brightness between the dark nebulosity-- and I took image of that region for 20+ hours. Turns out there is no such blue there! You do not disprove the point here, you just add hot air. I don't follow with what you're getting at in response to what I said, Again it seems you are missing the point here. Regards, Joe

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kay Ogetay:

Joseph Biscoe IV:

This topic may have started with good intent to learn, but quickly some haters show the opportunity to take the discussion to defamation level. When some people here seem to make logical statements but call 'all of us' with insults behind the doors, we can't have a meaningful discussion with them here. They keep diverging the topic for their intent. We know their intention is not to learn, but public bashing. But as you make kind comments and raise some questions, I'm happy to answer them. Hope this further clarifies my points.

First of all, I think there is still confusion here. Switching between Astrobin images and seeing some minor parts that are slightly different on a very faint object has absolutely no impact on any of our lives. Let's admit that. Nobody will say 'oh shoot, that 30px long filament seems to be curved in by 10 degrees more, I'm not shooting it!'. The majority of the structure remains the same. It is not deceptive to me, because what I'll remember from this object does not change from one image to another. A very faint detail seems diffused, so what? That data difference is maybe on linear data only 1-2 value difference. That is a very natural and expected outcome.

Now this might matter to you of course! But in such case, you should be aware that this is a very faint object with heavy noise reduction. So you should expect such difference anyway.

One can always make a better version and we'll appreciate their work. It is as simple as that. I personally know how Bray process those images and there is absolutely no 'human interference' (like brushing etc) that can cause an intentional change. Every difference is solely due to very low signal noise reduction.

Note that if two different people with two different eyesight would look at the raw image, they'd see such differences too! So it's not even about the noise reduction. It is the lack of data, the contrast. We are just not realizing this since we can't compare what our eyes see. And discussing over these tiny details, has no merit to me --especially when it doesn't even scientifically matter, let alone for aesthetic purposes.

If you are taking these as a reference for your future work, one can always figure out a solution for this: If the target is too faint and has a heavy noise reduction for aesthetic reasons, then I know what I'll face if I shoot this image. There might be slight differences. If you perceive this as 'not slight', then you act accordingly.

There is a good deal of "us" that like to reveal structure detail in our images and look to astrobin not as a scientific release, but as a planning software. You miss the mark here.

The quite opposite actually. Not pointing anyone here, but there was an IOTD I took as a reference, because of a simple aspect in the image -a blue brightness between the dark nebulosity-- and I took image of that region for 20+ hours. Turns out there is no such blue there! And no other image shows such blue whatsoever. I ended up with something that I did not want. But I did not get mad. I learned something, still got a nice picture. And I also know we take a risk when we are on the edge of discovery. Sometimes we work on a theory over years and at the end we realize it doesn't work. If you start the journey with the assumption of a good result, then you are not the right adventurer for this kind of journey. Many become experimentalists because of this and avoid theoretical works for example.

Maybe I'll post these findings when I post the image. Even in science --intentional or unintentional-- such cases occur. We figure it out in the community by replicating and posting our results. Pages-long discussions are not the solution.

If the concern is scientific. Then you don't compare the scientific paper to the Astrobin post (as the Astrobin post is intended for aesthetic purposes, not scientific purposes). If there is actually a 'significant difference' on the raw data on the scientific paper then you leave a comment to the paper. But to my expertise in this field, even these differences do not matter. I know that no one will take you seriously if you try to do that. Simply because it doesn't matter. Pixel-by-pixel structure doesn't have a significance --unless you derive information from that structure-- say the magnetic field around the object is constructed in such way because the filaments show the field lines --which is not the case here whatsoever. And you always know that there is a 'margin of error' in every measurement. If you don't acknowledge that and conclude with 'significant difference' then the issue isn't the OP's data.

So we can discuss this forever, where to draw the red line between fake and real. To me, these discussions usually restrict this community from being more creative which is very sad to me because we all like experimenting with our data. I'm pretty sure many of you who read this thread had the hesitation to share something really interesting because of the worry of such criticisms. I did and I still do. Such harsh comments only harm the diversity we could possibly have. We don't have to like it at all. Different styles can just have a small audience. Why neglect them? Why suppress them? So in terms of styles, yes we can simply move on. They will naturally fail anyway. If it doesn't fail but gets more attention, maybe it is just because we are not the audience anymore.

Jazz music is great, but it doesn't have the same audience anymore. But it doesn't make Rock more valuable or Jazz. It is great to have both. You can look at my image gallery. I have no pretensions to be close to generating the type of images we are discussing here. But basic data integrity matters to me. Changes in direction of massive structures go well beyond noise reduction tools or processing decisions. I have been an AB member since 2017. Data integrity matters to me. That is the only dog I have in this fight. Pointing out these types of inconsistencies is not defamation. These are real issues. We just need to decide if this is OK or not. If you think this is ethically OK without putting in a disclaimer that it is an artists impression, simply put it in writing in the manifesto of the IOTD. Then we all know what we are dealing with and can go on and choose to be members or not.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

First of all, I think there is still confusion here. Switching between Astrobin images and seeing some minor parts that are slightly different on a very faint object has absolutely no impact on any of our lives. Let's admit that. Nobody will say 'oh shoot, that 30px long filament seems to be curved in by 10 degrees more, I'm not shooting it!'. The majority of the structure remains the same. It is not deceptive to me, because what I'll remember from this object does not change from one image to another. A very faint detail seems diffused, so what? That data difference is maybe on linear data only 1-2 value difference. That is a very natural and expected outcome.

Now this might matter to you of course! But in such case, you should be aware that this is a very faint object with heavy noise reduction. So you should expect such difference anyway. No idea what noise reduction methods you're thinking of but the ones I use don't make structures magically change shape, placement or texture... all by quite a significant amount, not just by a couple pixels like you suggest

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Joseph Biscoe IV:

@Kay Ogetay I appreciate the response. It seems like you continue to miss the mark. I'll outline this below.

First of all, I think there is still confusion here. Switching between Astrobin images and seeing some minor parts that are slightly different on a very faint object has absolutely no impact on any of our lives. Let's admit that. Nobody will say 'oh shoot, that 30px long filament seems to be curved in by 10 degrees more, I'm not shooting it!'. The majority of the structure remains the same.

What do you think is an acceptable level of "closeness" that two images can occupy such that you can dismiss the evidence shown herein by myself and others? I must reiterate and ask you to consider this again, In the case of the O3 arc, the data presented in the scientific papers has been shown to be patently false. With integration times as mentioned above of ~8x greater. Multiple deep integrations have been done since the release. And the ones that have been done since then agree within themselves that the O3 arc presented in the scientific paper is a false structure. You said yourself that scientific papers are for the data... well the data has beyond reasonable doubt been shown to be false. You are now sidestepping the point. I cant state it any clearer. In the case of the goblet of fire, this data has major discrepancies involved as well. No two images agree with each on the major structural outline of the object. We arent talking about small differences here.

The quite opposite actually. Not pointing anyone here, but there was an IOTD I took as a reference, because of a simple aspect in the image -a blue brightness between the dark nebulosity-- and I took image of that region for 20+ hours. Turns out there is no such blue there!

You do not disprove the point here, you just add hot air. I don't follow with what you're getting at in response to what I said, Again it seems you are missing the point here.

Regards,

Joe I'm sorry if I looked like sidestepping, it was not my intention. I came here to make only two points: 1) There can always be differences in low signal faint structures as every measurement has a margin of error and one should acknowledge this natural result before starting such works --as all scientists are naturally trained to do. 2) We should let the community free for different styles. I'm quite busy and fractionalized between different works and spent maybe a month's of worth forum reading here already. I do not intend to keep it going as I made my comments. I'm sorry if I'm missing any details in the discussion because of this. In case there is a scientific inaccuracy as claimed that is 'significant', what needs to be done is pretty clear. You post your scientific findings in a journal. Every day, in every field, some research is 'corrected' in this way. In fact, that is literally the definition of science by Popper. There is nothing wrong with this. This is the progress. One starts it, others improve it. And we appreciate it all. Anyone who is doing scientific research will find this pretty natural. I'm surprised that this community it is a topic of discussion as I rather find this community more scientific than others. Apparently we fail to explain what science is, I take the blame. But this discussion keeps turning around 'what is significant to me and what is not significant to others'. But for science it is not subjective. If you find there is something wrong on a published paper, you get your ideas published. And that's it. This is how scientific progress is made, not through forum discussions (my first point). And if it is about aesthetics, then it is my point two. But I have to admit that this made me so sad. Because I always wanted to see this community more involved in science. This is practically my job --science and getting people more involved in science-- and my favorite group (the astro community) is having hate discussions over these works instead of being more productive together. It is very sad in an already polarizing world. With this, I hope some people see what I try to point out. Thanks for the kind discussion, but this is all from me. I may miss new comments as all my notifications are off. But you can reach out to me through email if you'd like to discuss the philosophical or scientific aspect of this. I'm always happy to discuss and maybe even meet to discuss. Kind regards, Kay

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Arun H:

Kay Ogetay:

Joseph Biscoe IV:

Joseph Biscoe IV (Jbis29)

You can look at my image gallery. I have no pretensions to be close to generating the type of images we are discussing here. But basic data integrity matters to me. Changes in direction of massive structures go well beyond noise reduction tools or processing decisions. I have been an AB member since 2017. Data integrity matters to me. That is the only dog I have in this fight. Pointing out these types of inconsistencies is not defamation. These are real issues. We just need to decide if this is OK or not. If you think this is ethically OK without putting in a disclaimer that it is an artists impression, simply put it in writing in the manifesto of the IOTD. Then we all know what we are dealing with and can go on and choose to be members or not. Just saw this after posting. I already know you Arun, I like your images and even follow you. And I know how Bray process those images and it is not a secret. Many who watched him doing it know what he does. That's how many people here know that isn't about brush or intentional fabrication as many claim. It is a different tool for heavy noise reduction, that's all. It is not up to me to explain it. I understand for some, this might be too much. And again, this is my point two in the above post. Maybe as suggested, we can add a disclaimer to the post something like 'heavy noise reduction is used in the processing of this faint target, some details might show differences' --as we all accept by default when we buy things from internet. If this is helpful.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

@Kay Ogetay Well its interesting to see you go this way. But I have to admit that this made me so sad. Because I always wanted to see this community more involved in science. This is practically my job --science and getting people more involved in science-- and my favorite group (the astro community) is having hate discussions over these works instead of being more productive together. I would argue that this is a scientific discussion. The results of findings in the scientific community and their interpretation are always debated and discussed. The fact that you call this a "hate discussion" is interesting. It is not a hate discussion. No one has expressed any hate towards anyone here. What has been expressed is data that was purveyed as scientific and accurate being found to be false. As a scientist yourself this sort of discussion should engage you to get to the bottom of this and find the facts. I wonder why you havent? There can always be differences in low signal faint structures as every measurement has a margin of error and one should acknowledge this natural result before starting such works - again, we aren't talking about "margin of error" level discrepancies.. we are talking whole structures. And at that point it is easy to say "whole objects" Best Regards, Joe

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

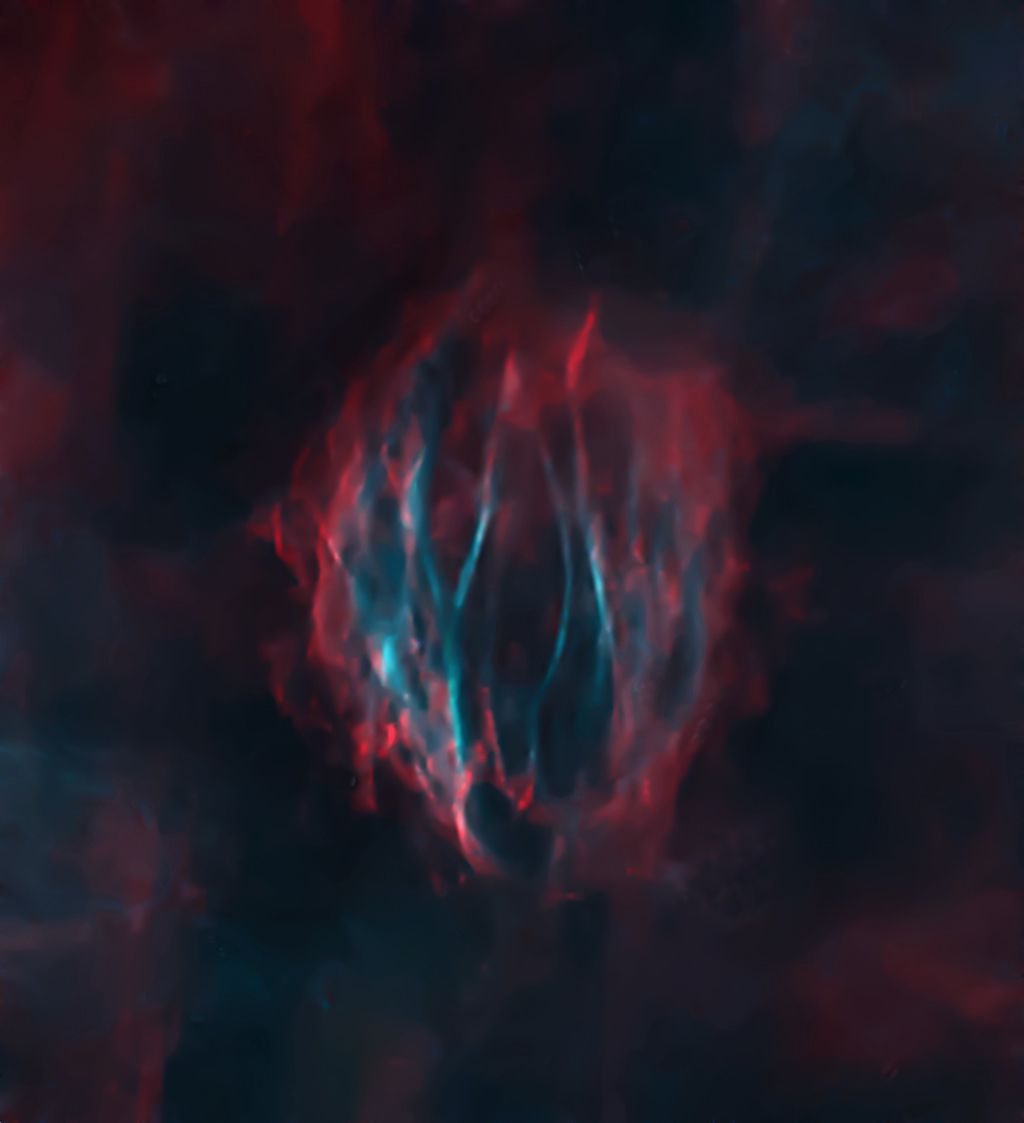

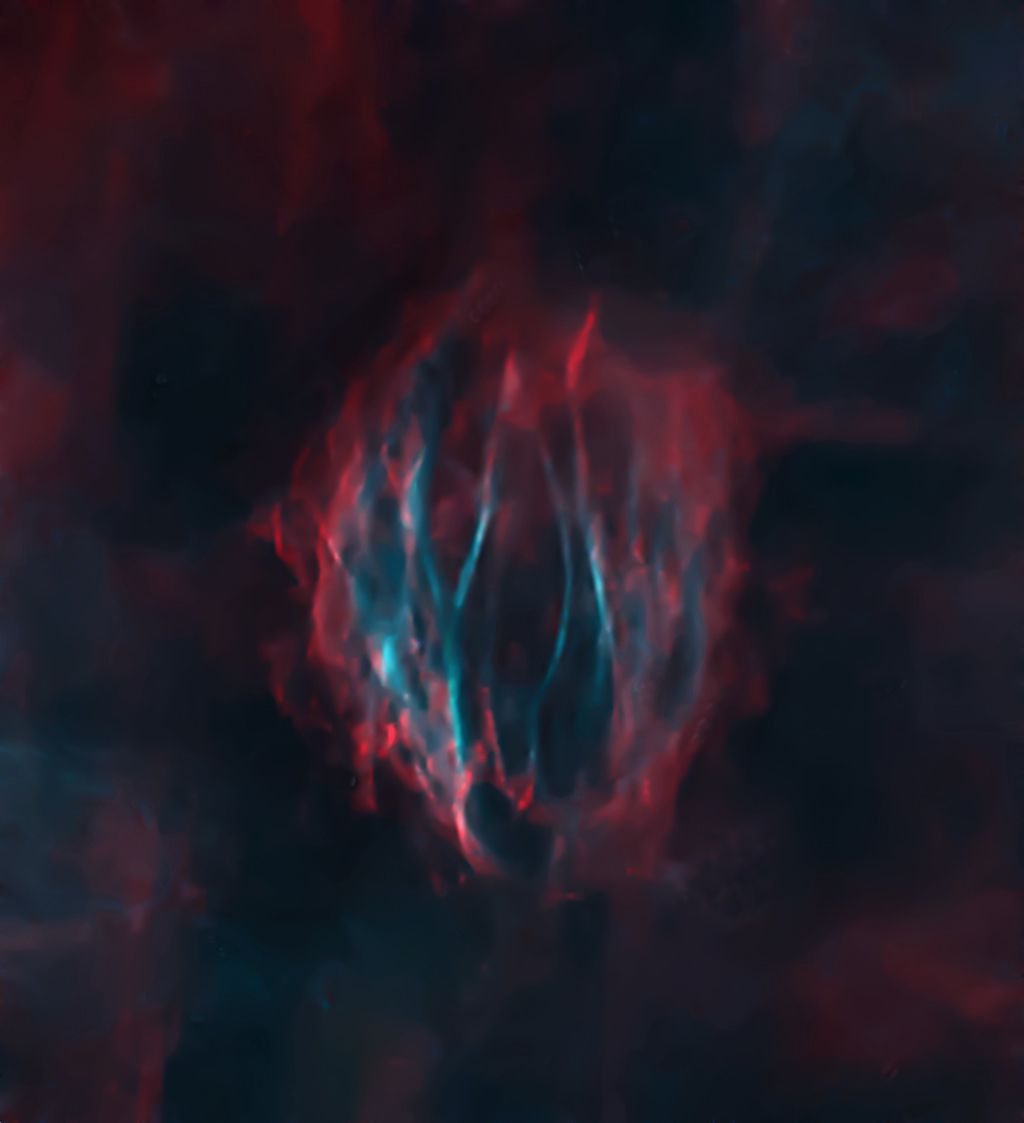

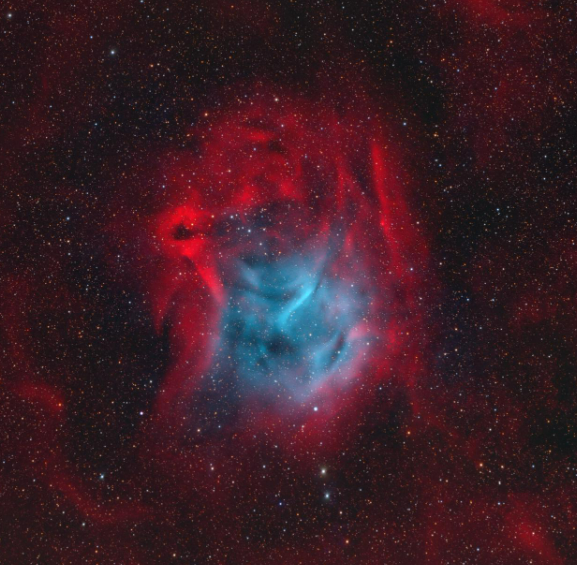

Rather than continue beating a dead horse, I decided to take a different approach to see whether I could take the noisy image that Bray posted and replicate a result that resembled what he accomplished. The original post was centered on how the noise reduction was accomplished so my intent was to see if I could figure out how to tame the extreme noise in that specific image. The low resolution image of the noisy version wasn't much to work with and I literally only invested 20 minutes into my non-scientific experiment. My workflow was as follows: 1) Did a channel extraction in Pixinsight to separate the image into separate RGB channels 2) Applied NoiseXterminator to the 3 seperate RGB channels using the default settings 3) Saved each of the 3 channels as a 16 bit Tiff 4) Opened the 3 channels in Photoshop and applied a very heavy application of Photoshops noise reduction within the camera raw filter 5) Combined the RGB channels in Pixinsight 6) Used a Range mask to isolate the brightest features within the goblet so that curves adjustment could be used to dial down the blown out features 7) Did a quick Red and Cyan color mask to do some color tweaks using curves adjustment 8) Clone stamped out the bright blown out objects 9) Brought the finished image back into Photoshop for some final tweaks (contrast, levels. saturation) This is what I managed spending 20 minutes with an extremely low resolution version of the "noisy" version of the image. So in answer to the original post, it certainly seems plausible to replicate the noise reduction seen with the original data. As far as any debate as to whether this level of data manipulation is appropriate, I choose to abstain from that discussion. I was more curious as to how the noise reduction might be accomplished and I was surprised at how easy it was. I would love to have a chance to take a crack at the high resolution data set. My imaging skill set is pretty basic but it would be fun to see what I could do with quality data and investing the processing time worthy of a 200+ hour data set.  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Kay Ogetay:

Arun H:

Kay Ogetay:

Joseph Biscoe IV:

Joseph Biscoe IV (Jbis29)

You can look at my image gallery. I have no pretensions to be close to generating the type of images we are discussing here. But basic data integrity matters to me. Changes in direction of massive structures go well beyond noise reduction tools or processing decisions. I have been an AB member since 2017. Data integrity matters to me. That is the only dog I have in this fight. Pointing out these types of inconsistencies is not defamation. These are real issues. We just need to decide if this is OK or not. If you think this is ethically OK without putting in a disclaimer that it is an artists impression, simply put it in writing in the manifesto of the IOTD. Then we all know what we are dealing with and can go on and choose to be members or not.

Just saw this after posting. I already know you Arun, I like your images and even follow you. And I know how Bray process those images and it is not a secret.

Maybe as suggested, we can add a disclaimer to the post something like 'heavy noise reduction is used in the processing of this faint target, some details might show differences' --as we all accept by default when we buy things from internet. If this is helpful. Thanks for the comment, Kay. And I recognize now I was more direct in that comment than I should have been. For that, I apologize. Perhaps a lot of the friction comes from those of us with scientific backgrounds versus those with artistic ones. Maybe, as especially as some of us push the bounds of what is possible, we just need to make it explicit and accepted that many of the details are up to interpretation.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I'm really new to astrophotography. I had no idea structures changed or disappeared with longer integrations.

@Oscar (messierman3000) I've had really good luck with the original Topaz denoise on my images but it should only be used on starless images. I'll run NXT at .4-.6 with the detail slider at .15 in a linear state after I've run MMT to target chromenance noise (NXT seems to have an easier time with salt and pepper noise). Then stretch however you like. For topaz, I recommend leaving the "recover original detail" slider maxed and the sharpen setting at 0. Typically, I'll use the severe noise setting and set the noise reduction to 1 (regardless of whether or not the noise is actually "severe") this seems to have little to no impact on structure and targets only BG noise. The other presets like standard and low light seem to modify/enhance/add structure. Then I'll continue my process in PI... stretch a little further/do color work and determine whether or not another pass of NXT is necessary or not. Hope this helps! +1 on this, especially the advise about the recover original detail slider. If you keep that at 100% it will minimize the problem with over-sharpened detail.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I'd say that Bray Falls, Marcel Drechsler et al. are entitled to keep their original processing methods private, if they so wish. Or sell them as processing courses. It's their business.

That said, striving to preserve data integrity is the proper way to do this, as far as I'm concerned, but we do compromise on this to create aesthetically pleasing images. It's not science, most of us want to make pretty pictures. Noise reduction on very faint targets will affect data, there's just no way around this, when you're on the bleeding edge of SNR. Some details will fade but some non-existent features can also pop up. Hard to distinguish them, when there's no reference image.

I use a bit of everything (NX, TopazDenoise sans AI "sharpening", CameraRaw, Dust and Scratches, etc). Fully agree with this, everyone here is allowed to not fully disclose their processes, regardless of fame, or experience- even if there are objectors (albeit, I think an exception can be made for plagerism and VERY blatent false positives [literally making up objects that don't exist/ai generated] ). Do I think that there is the possibility of heavier than usual photomanipulation? Well, yes, but that's fine, as long as it isn't becoming unshakable scientific paradigm, which I don't think it is, as it's just one paper of many to come, which is only showing a rough view- I do believe it should be raw-er than it is, but that's just my personal taste. As long as it just gets the point across that the arc is there, there shouldn't be much fuss. Otherwise, just enjoy yourself and do what you have to do. This isn't astrophysics, it's astrophotography. The lack of science is in the name. Though if you are doing things for scientific gain, the creation of multiple highly accurate, and extremely deep references should be a paramount goal when a new object is discovered. Consider the first, say, 5 or so images as semiaccurate 'prototypes' of sorts, until the shape is well-known and well-defined. This way we can have a good idea of what to study and where on the science side of things. I think this being said, I believe that every single person that has raw data to contribute to a reference project, should. Minute differences in data can add up. I think we should wrap this off-topic discussion up, as it seems we have deviated immensely from the original goal of the thread, and it has been blown way out of proportion.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Mikolaj Wadowski:

First of all, I think there is still confusion here. Switching between Astrobin images and seeing some minor parts that are slightly different on a very faint object has absolutely no impact on any of our lives. Let's admit that. Nobody will say 'oh shoot, that 30px long filament seems to be curved in by 10 degrees more, I'm not shooting it!'. The majority of the structure remains the same. It is not deceptive to me, because what I'll remember from this object does not change from one image to another. A very faint detail seems diffused, so what? That data difference is maybe on linear data only 1-2 value difference. That is a very natural and expected outcome.

Now this might matter to you of course! But in such case, you should be aware that this is a very faint object with heavy noise reduction. So you should expect such difference anyway.

No idea what noise reduction methods you're thinking of but the ones I use don't make structures magically change shape, placement or texture... all by quite a significant amount, not just by a couple pixels like you suggest I have had DeepSNR create a sort of Dust Halo around NGC 3953 in my NIR data, which I can say is probably not there. Albeit, the data being NIR there is always that .0000000001% chance it is something unseen.  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

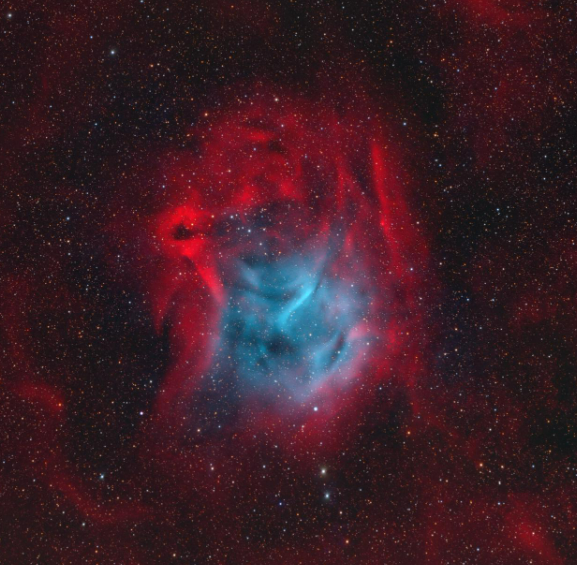

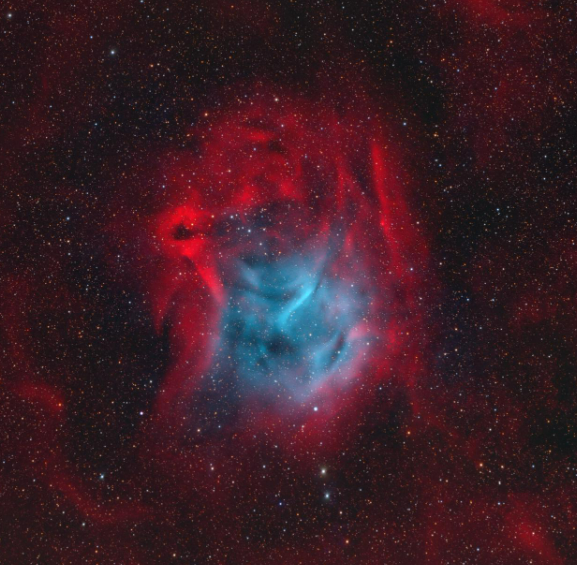

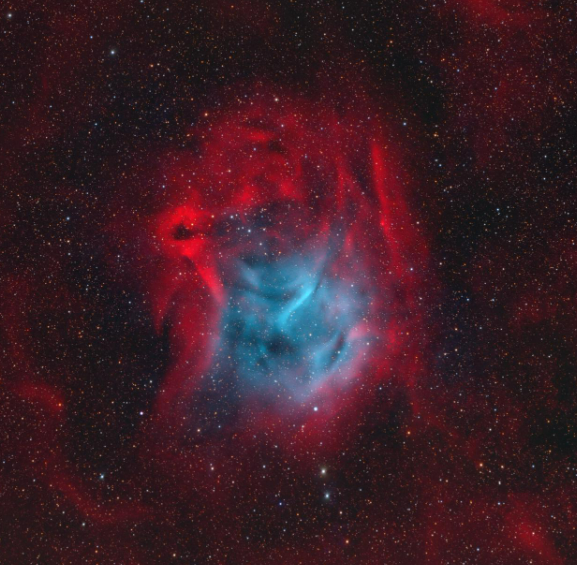

@AstroSidivis , thank you, it does help ------------ Mathew Ludgate, a collaborator of one of Bray's projects has responded to me he told me his own NR method, the one he used for this:  (credit: Mathew Ludgate, PN Candidate Lu4) I asked him if he could come here and write and what he told me, plus any thoughts he may have about all of this IMO, it looks similar to Bray's nebulae in regards to NR, I mean it looks smooth, but not completely diffused like what would happen with just plain convolution I think Bray might be using a very similar NR workflow

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

@Bob RuckerI duplicated your image and inverted the duplicate, then added that as a mask onto the original, then added some convolution to the masked image, looks a little closer to Bray's edit but I think Mathew has the closest recipe to Bray NR workflow - I'm hoping he's willing to talk here

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Arun H:

Perhaps a lot of the friction comes from those of us with scientific backgrounds versus those with artistic ones. Maybe, as especially as some of us push the bounds of what is possible, we just need to make it explicit and accepted that many of the details are up to interpretation. Agreed! That discussion is as old as astroimaging and I can recall similar discussions way back in the 1990's at several imaging conferences. In those days some of the "science purists" were even more aggressively "pure" than these days.  They even objected to some of the then new forms of stretching!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

everyone here is allowed to not fully disclose their processes LOL, sometimes by the time I finish processing an image I cannot even remember all the steps myself much less tell someone else what they were!  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Oscar:

@Bob Rucker

I duplicated the image and inverted the duplicate, then added that as a mask onto the original, then added some convolution, looks a little closer to Bray's edit

but I think Mathew has the closest recipe to Bray NR workflow - I'm hoping he's willing to talk here It's a given that Matthew has a lot more expertise than me so any processes he might share will be far superior to anything I did. I think that my simple experiment shows that it's possible to achieve a result similar to Bray even using basic NR tools. I suspect that the results of my experiment would have been much better using the original fits rather than the 365 kb JPG image posted with Bray's image. That highly compressed low resolution image really did not give me much to work with.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Oscar:

@AstroSidivis , thank you, it does help

------------

Mathew Ludgate, a collaborator of one of Bray's projects has responded to me

he told me his own NR method, the one he used for this:

(credit: Mathew Ludgate, PN Candidate Lu4)

I asked him if he could come here and write and what he told me, plus any thoughts he may have about all of this

IMO, it looks similar to Bray's nebulae in regards to NR, I mean it looks smooth, but not completely diffused like what would happen with just plain convolution

I think Bray might be using a very similar NR workflow Well, if he decides to open up about his workflow, you and whoever is interested on this, including me, will have the answers we are so much looking for. This image is very very close to what Bray and a few others do. Excited to have his word!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Oscar:

@AstroSidivis , thank you, it does help

------------

Mathew Ludgate, a collaborator of one of Bray's projects has responded to me

he told me his own NR method, the one he used for this:

(credit: Mathew Ludgate, PN Candidate Lu4)

I asked him if he could come here and write and what he told me, plus any thoughts he may have about all of this

IMO, it looks similar to Bray's nebulae in regards to NR, I mean it looks smooth, but not completely diffused like what would happen with just plain convolution

I think Bray might be using a very similar NR workflow @Oscar (messierman3000) I had one additional thought I'll add to the NR discussion. Your chosen method of background extraction will help keep the noise ceiling low. The more aggressive the DBE the higher the noise seems to go. If the data will support it and you've got a dab hand at using Gradient Correction I think you'll find you have a much smoother result to work with. I realize that some data requires a good ole fashioned DBE to get a nice flat result, but this has really helped me out in the past....GOOD LUCK and CS! **EDIT** Charles Hagen has pointed out to me privately... thanks Charles! - Neither actually has any affect on small scale noise in the images, it's just that with fewer gradients the image is allowed to be stretched further, accentuating the noise. Gradient correction can be slightly less aggressive due to the structure protection, but they are mathematically equivalent operations that both exclusively work on very large scale structures in the image

- That is - if you got an appropriate gradient subtraction with either tool, the noise would be identical

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Charles Hagen:

This is verifiably false. In fact in an earlier post in this thread I pointed out an example of Bray and Marcel's O-III arc paper that contains heavily manipulated images presented as real and honest data. I will be clear, it is my claim that these mid-scale structures have been almost entirely fabricated. They make no attempt to be transparent with their processing. After thinking about it over the past day or so just my thoughts: - If an error of some kind was made in a scientific paper, I feel like that should be up to the journal's peer review process to catch. I am not sure what journal the paper was published in, but shouldn't the reviewers have realized that the data presented was stretched? Seems like a rather obvious thing. They could then have asked that the raw data be shared prior to accepting the article for publication. Or insisted that an established processing method be used. Maybe it was done? If it wasn't, I would say it is more a hole in the peer review process than anything else. Certainly, although it has been many years since I have published a journal article, the reviewers did ask me to add or clarify things.

- I think we should be careful when claiming that something was fabricated. If this was an established and well known DSO - then if someone did fabricate a structure, it would be easily detected. But, and neither having access to the raw data nor the processing method, it is better to assume that a non repeatable structure was a result of the processing method, or perhaps even an error in the acqusition, than a deliberate fabrication. In the excitement of a discovery, the default could have been to publish. Certainly, when I was impressed with an image of my own, I have been excited enough to publish it, without more careful processing that could have resulted in a better or more accurate image.

- We also have to remember that the author in this case is not a professor or grad student. The approach in academia would have been to publish the base discovery while doing the more careful work in parallel and then publishing subsequent papers with additional details.

- Regardless the accuracy of the structures presented, it nonetheless motivated others to capture them more carefully. It isn't as if a completely absent structure was presented. There IS without question an OIII arc in the M31 area, and there is such a thing as a Goblet of Fire Nebula, and we now know more about it than we did.

- Finally, as @Kay Ogetay, I, and others have said - we should have calibrated expectations on what to expect when we go out and try to image something that has rarely been imaged before. Even in academia, new discoveries, when attempted to be repeated, will show differences. It is part of the learning process.

I still believe that Bray should be more transparent in the process he uses as it would help the community. It would, quite likely, even help him, since whatever process he comes up with can be refined and made better than he could alone. And I honestly very much doubt it would take away from his source of income. As he has mentioned - he does not even cover these methods in his courses. But, hopefully, other experienced members of the community will step in and share the same knowledge.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I'm just too excited, I lost patience with Mathew (sorry Mathew) and am going to tell you what he told me, but I also just found out something, no one told me about this, Mathew didn't talk about this, I found out accidentally

just try it on an image:

Gigapixel AI, AI model: HQ - I suggest experimenting with the slider values, but I put all 3 at 100% for the Jpeg Goblet of Fire that that last guy denoised with NXT - Gigapixel gave the nebula the "Bray look"

Mathew's NR is nothing too special or too specific anyway, I don't think Mathew will mind at all

for NR, Mathew uses "NXT, dust and scratches, sometimes a little gaussian blur, neat image and an old version of topaz. Topaz is usually the final NR step with the sharpening dialed down and careful checking to ensure no new structures are added"

those are his own words

by Topaz I assume he means Topaz Denoise, because he talks about an "old version"

what I can conclude is, the general workflow to get those Bray-quality images is NR first, like Topaz Denoise with NO sharpening, (NXT or DeepSNR, or just any other NR software could work also), then use Gigapixel afterwards

the Gigapixel test was done with a color Jpeg image, it might or might not work better with grayscale - I haven't tried that yet

I contacted another guy, Aygan (another Bray collaborator), to see if he'll talk here too about his NR method, just to confirm things

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Oscar:

neat image Just tried the demo version and it does wonders. Of course it must not be used at 100% because it creates fake structures, but it looks like what @Kay Ogetay used in his Lagoon image and it gives a nice noise reduction without any mottling.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Oscar:

just try it on an image:

Gigapixel AI, AI model: HQ Interesting. It does make a certain amount of sense in terms of what we are seeing. Of course as it is AI and not specifically designed for or trained on Astro images, it could perhaps sometimes result in, shall we say, "deviations" from reality.  OTOH, I do have some of the older Topaz (non-AI) stuff that I use once in a while, more often for non-Astro, so might as well get Gigapixel (it's on sale for BF/CM). If nothing else I can use it for terrestrial images.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

From what I saw on the Gigapixel web page, I wouldn't let it anywhere near my data. It truly is using AI to create detail that it guesses from it's learning about human faces. Just look at the right eye of the little boy, last demo image.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.