I'm sure it's a loaded question as to some extent most astrophotographers use moderate AI tools like Star exterminator etc.

But, I've spent 3 nights photographing and more nights processing the Cygnus Loop. I came across some images that strike me odd. For example, one person had a stunning image of the nebula. Standing beside myself, I wondered how they got that image with the same equipment that I had. I'd like to produce images with such detail so I was hoping to learn how.

I'm in a bortle 5 and their image was taken in a bortle 9 location. They had 34 frames, I had 121. Both theirs and my images taken for 300" and same gain. They didn't mention a filter but I used my Optolong L-Quad.

So, either I'm terrible at processing (I don't think I am) or they are using AI. However since I'm relatively new at astrophotography (not regular photography though), I was wondering…

How common is AI used to produce such stunning images in this hobby? Am I wrong to compare my work, hoping to glean knowledge? And, to what extent do you think AI used is acceptable to improve an image?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Comparing your work to others is a natural part of improving, so don't hesitate to do it, it's how we all get better. AI tools are indeed used in processing, but they aren’t magic. In fact, if overused, AI can often degrade an image rather than enhance it.

It's usually quite easy to spot when AI has been overdone. The real difference comes down to the person behind the processing. Even with comparable equipment, someone with great skill and experience can produce far superior results compared to someone less experienced. It’s all about honing your skills, learning the nuances of processing, and understanding how to bring out the best in your data.

Keep practicing, and you’ll see your results improve!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

So far AI tools mostly assist with noise reduction and sharpening, making those steps easier and or having modestly better results than traditional methods. Like someone else said, when they are pushed too far you can tell. Too much noise reduction and everything looks plastic. Too much sharpening and the details look wormy or suspect.

Given those two tools in isolation could make an image less noisy or sharper than yours, but the surrounding processing that those tools facilitate are likely to be a bigger difference, such as being able to stretch finer detail out or push saturation further without as much detriment.

Being that you are relatively new, it could be that "they" are perhaps more skilled at processing than you are right now. The good news is that you can learn and improve! And you will! I am by no means an expert, but my processing flow took a long time to develop into something I am only somewhat comfortable with and somewhat satisfied with.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

AI is present in 99% of the APODs

Some will say "yeah, blur x is used but it is not a big deal" while it fixes/enhances sharpness, stars shape, star size, chromatic aberrations and spherical aberrations.

I've seen what it does to crappy images. In some cases it turns an image acquired with terrible guiding, tilt, bad focus and chromatic aberrations into something you see and think "oh that's beautiful".

Indeed it fixes a lot os problems. Add SPCC to that and you no longer need to worry about star colors. To some extent using AI for noise reduction is reasonable but at least for me this is where I draw the line and I won't go over that.

I enjoy looking at beautiful images even though I know AI is very present but if the imager is happy then I'm happy. I just don't apply blur x to my images as I prefer looking at my own data even if by current standards (or anytime really) they are not impressive at all but at least I feel like they're still my data. Even when I over sharpened I did by myself . It is certainly not a popular opinion as you'll see below but that is what I think.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

"AI" is just another tool like color saturation. Experienced processors are wise enough to apply such (any) potent tools with moderation. I personally believe that processing accounts for more than 50% of a good astrophotograph. Good data can be easily ruined by bad processing. On the other hand, bad data can be made presentable with a wise choice of a workflow. The key for one to improve his processing skills is to have this observation in mind (I won't say "fact" :happy-2  , view as many photographs and attempt to process an image of his to match a similar astrophoto he have liked. And finally, to ask for critique!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

two reasons why the other guy could've got a better image than you 1. better processing 2. narrower dedicated dual NB filter and not necessarily AI tools, but AI tools do help when you use them right I always try not to pass the line and make an astrophoto over-processed and visibly disgusting, which can happen very quickly with AI tools if they're not used right I know in astrophotography, at least the colors, are subjective, and no matter how hard you try, you will have some inaccuracy somewhere, and that's just life (which forces me to believe my hobby is only a form of art, and maybe some science in regard to nebular details and the target wavelengths only, but I doubt a scientist or a student, or any science addict is going to be interested in learning something from one of my images... at least, until a little while longer...  ) my gut will tell me if I'm really distorting my data into something that can't exist and is plainly (or subtly) ugly; my gut rules most of my processing decisions

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Speaking from my point of view, AI is a nice tool to help me process my images. Processing for me is the least favourite part and I put some effort in it to get better, but in the end I hardly improve for several reasons. Time is the main factor. Processing is part of astro photography and so I do it, but most of the time half hearted. With tools like blurX and similar, I don't need to put that much effort in it, which is nice.

I can think this way, because my goal is not to create scientific correct images. In this case, AI must be used with caution. But I assume that most of the people here are trying to create pleasing images for the viewers eye and AI has become part of it. It is another technique like many other techniques that had been developed in the past. At some point many of them were revolutionary and today, we don't even think about it.

In real life photography, many processing techniques were the base for a new style of images. Think about HDR. There were times, when so many images you found in the web were "unreal" HDRs. They had their charme and looked great even to me, but soon people were oversaturated with the look and the hype was over. The same happens with colors and saturation. Many images look really saturated and at some point beautiful. But I prefer the more natural looking ones most of the time. In this case, I seem to belong to a minority.

Astro photography is slower, because we need time to collect data for one single final image. The world is not flooded with good images, because the signals are too faint to be used by the masses. That beeing said, we can recognize some development even in AP as well. There are different styles of images out there, but they are not as obvious as in other parts of photography. We are a niche and developing tools for us is not as popular for most programmers. But there is obviously some improvement.

During processing, I compare my work with final images made by other people. But I realize that often I took another road than what I had in mind in the first place, just because I did not like the results on my own image. I am presenting my images to others here on astrobin like we all do. But I am less interested in what other people think. This may sound like an excuse not to put time into learning my skills, but it isn't. I just want to share what I created and get into touch with people.

To summarize all this, I feel more than happy to choose what I prefer to do with my images. AI is helpful in this case. But it isn't mandatory. If you decide to keep it simple and you take your time to get the results you want, I am more than willing to honour your work. It's completely up to you how your images look and what you are trying to achive. Everything is right, as long as you take the time to think about it and make your decition. That's art and styles are not beautiful to everyone.

CS

Christian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Thanks, everyone, for your helpful comments.

I've always been somewhat a naturalist in processing of my photos of ordinary photography, things like travel, culture, landscape, etc. Astrophotography poses the question of AI to me as to how much is just unrealistic. Like, perhaps I'm comparing my idea of a more natural image to something exceptionally artistic.

I do need to learn better processing, for sure. But, also, it could be that a narrowband filter would yield some better results too.

Overall, I think I've done good for my skill level. I guess maybe, I need to decide what I want to achieve in an image, something maybe I'm still discovering.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Certain tools make some processes a lot easier than before. I think some of what makes them so hyped is that people who weren't actually able to use stuff like deconvolution, are now able to. More people are also getting into AP due to the increased simplicity without perhaps having enough understanding of what happens and as such it becomes sort of "magic".

The fact is that both deconvolution, star reduction and noise reduction is possible to achieve by not using any of the xterminator plugins as well. But most people wouldn't use a horse and cart to get from A to B, if they have the opportunity to use a car or a train.

With BXT and it's correct only mode I find approximately no visible change on my data. With bad data it does fairly well, but if you look carefully it can quite quickly result in false double stars etc. So use it with care, it's not a straight up replacement of good optics and good data like some make it out to be. Still, people are different, some think replacing patches of sky and introducing stars that aren't really there is completely fine and doesn't care - I personally do care.

I'd go as far as saying processing is at least 80% of the hobby. Anyone can put a telescope on a mount and start a session with everything controlled remotely, but being able to process the data requires a fair bit of practice. Depending on the project I can spend days, sometimes weeks or months before I'm happy with an image. Then I'd still probably find something I wasn't quite happy with. I use all of the xterminator tools and haven't touched any manual methods in years myself.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

IMHO there seems to be a bit of over stating what these "AI" tools can do. BlurX is not a magic bullet. It is pretty powerful but it is not magic. It can't substitute for integration time and learning to process. It can make a good image better but it cannot take a poor image and make it great. Honestly, integration time, star removal and separate processing are far far more important to me than BlurX.

And just a comment, but using PI and finishing in Photoshop is going to bring a much greater improvement than using DSS and Ps. At least it did for me. Certainly a much bigger improvement than adding BlurX.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

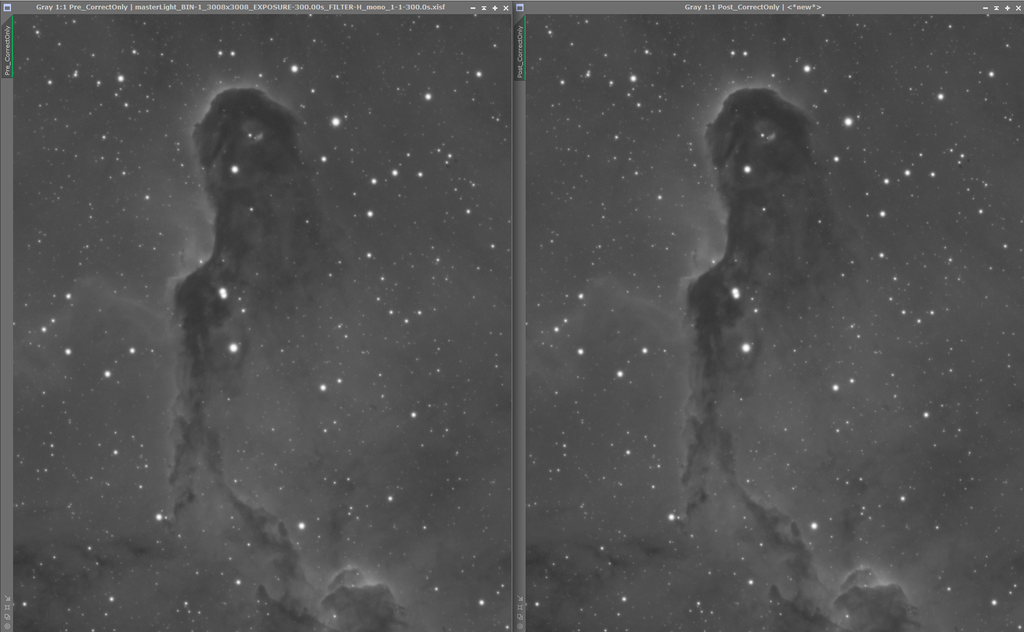

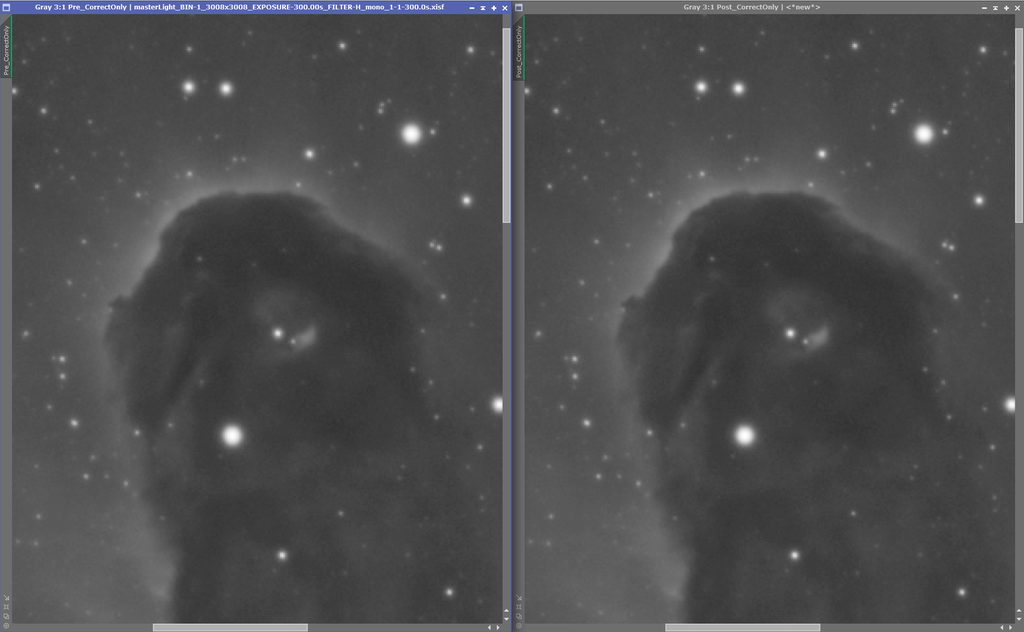

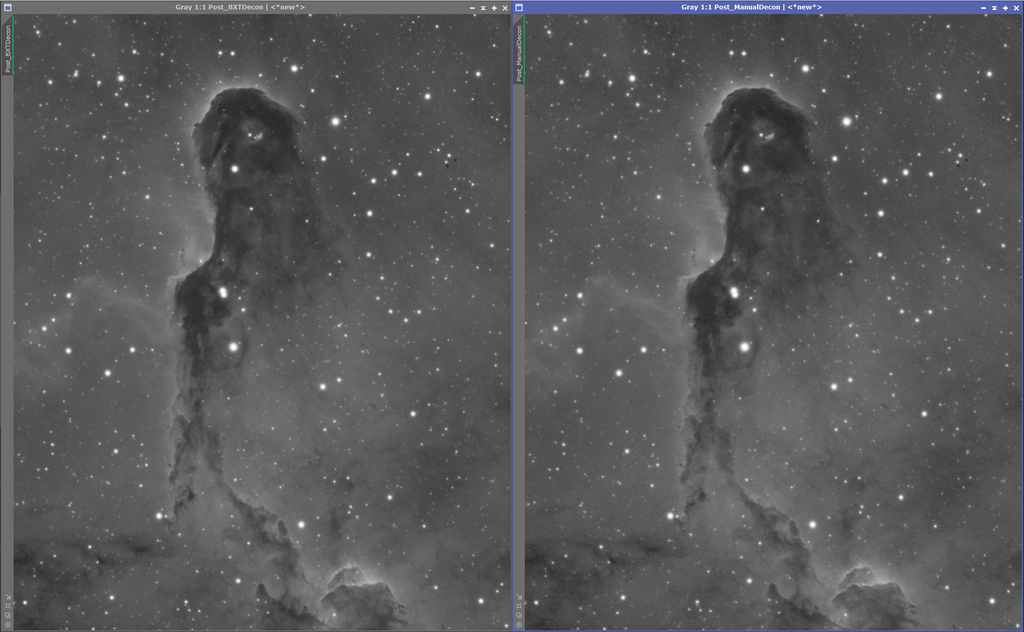

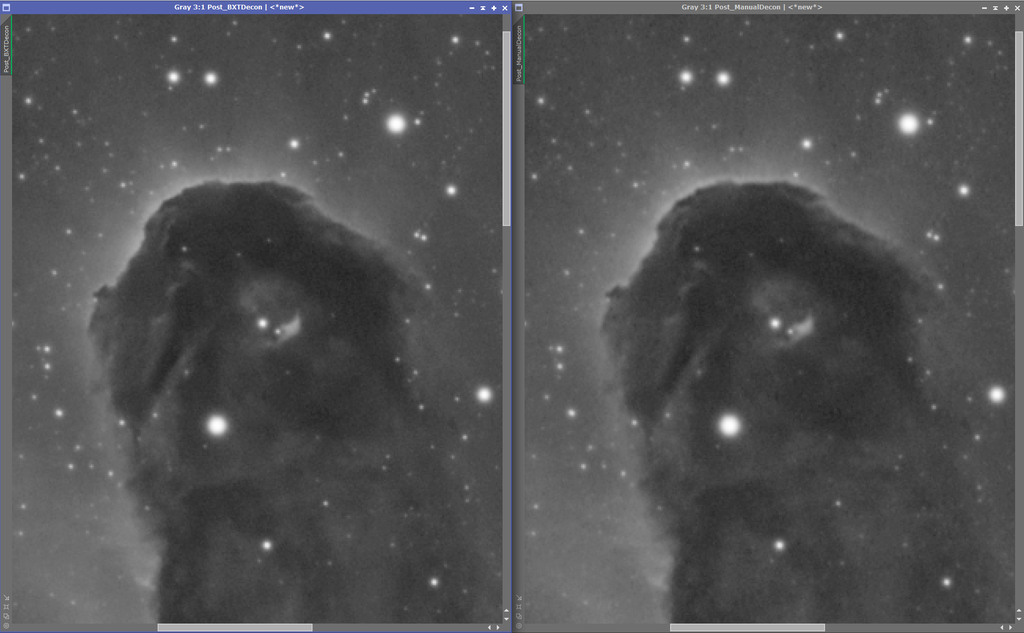

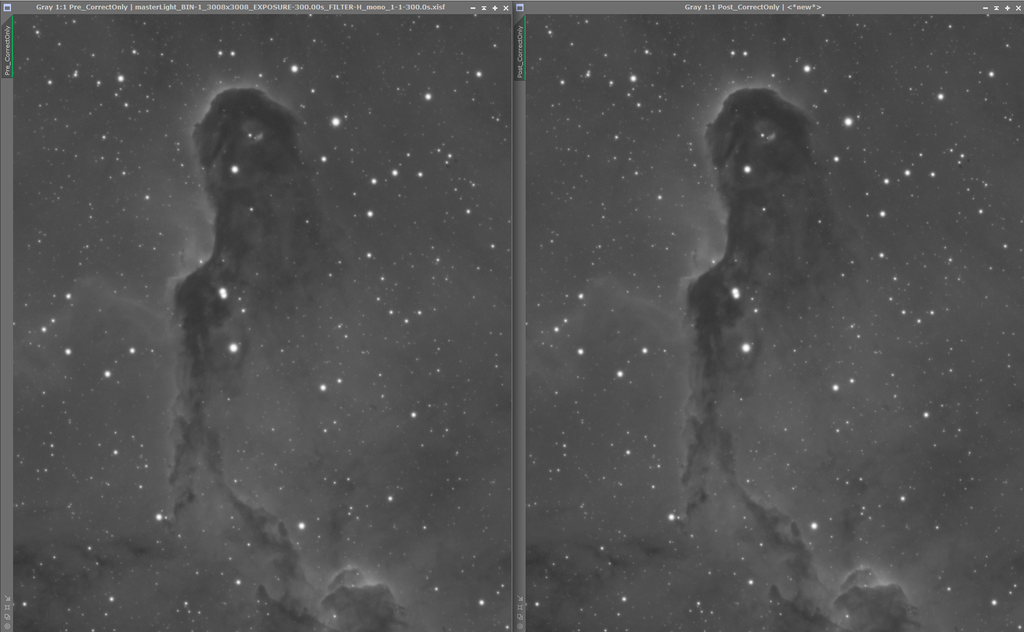

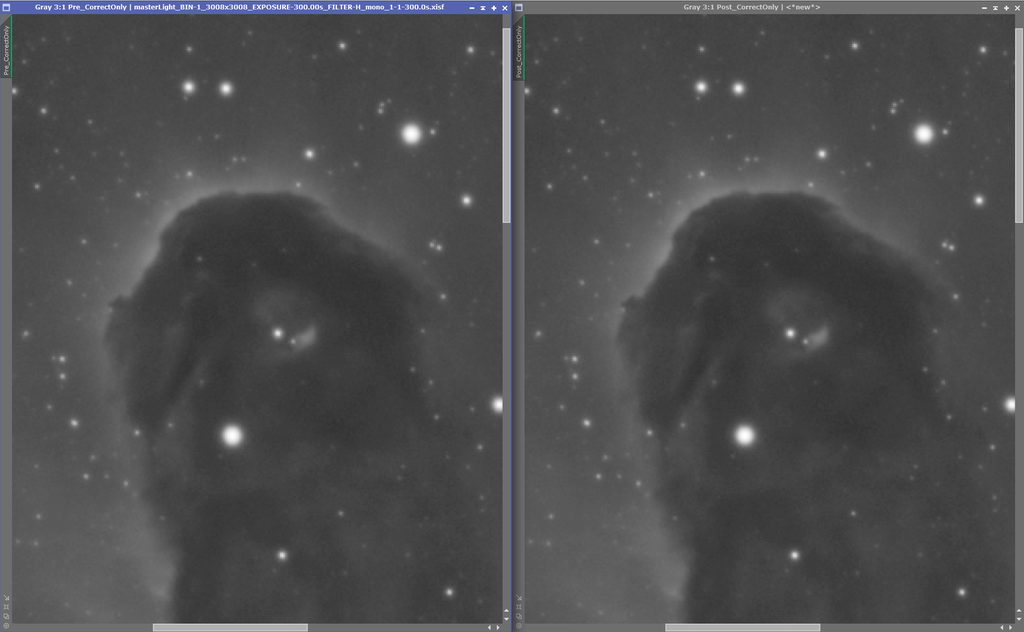

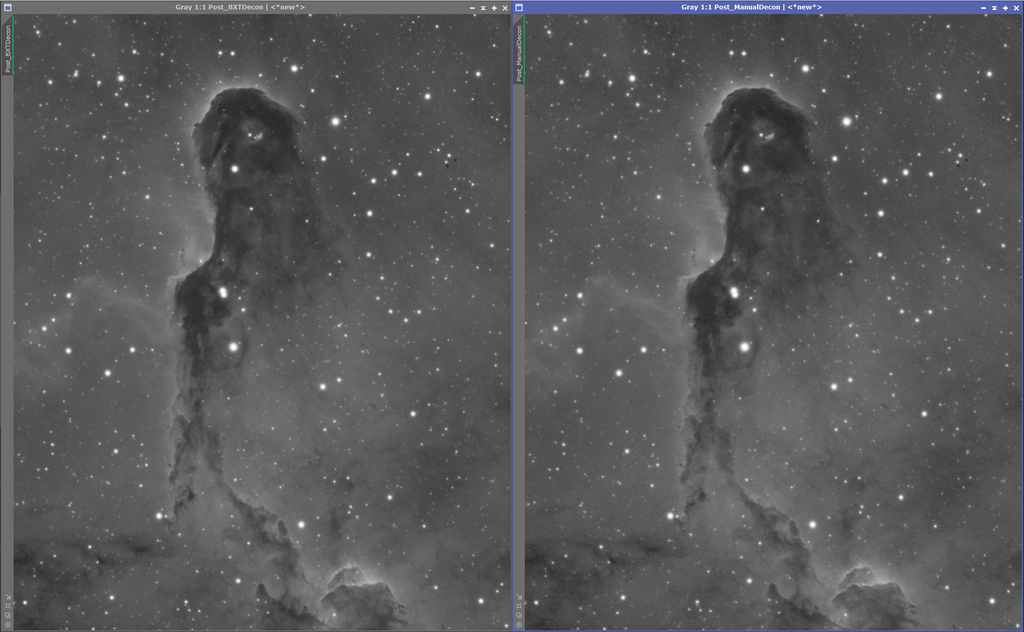

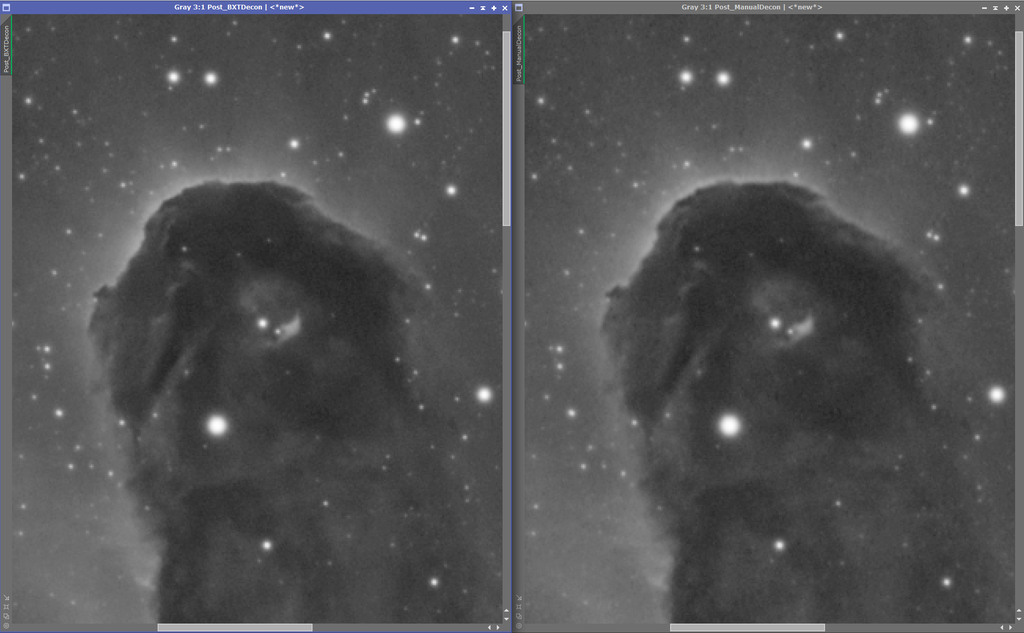

I agree. I briefly used Starnet for a while before going into SXT and it was night and day. Either way I thought I'd add some screenshots to show some examples as well, using a cropped FOV from some old data I have. The image is linear and only has a preview stretch enabled: Before and after doing correct only, I personally don't see any particular difference.  Even more zoomed in:  And here's a quick comparison with deconvolution between BXT and PI's own deconvolution process, an unfair match since I'm no expert on that process and haven't opened it in years. So only basic settings were applied. It still show to some extent that this improvement can also be achieved without BXT if one cares to learn how to use the settings properly.  Even more zoomed in:  The deconvolution process is, of course, only one of many different processes you can apply in order to make an image appear sharper, have more contrast etc. So this is just a very basic comparison. So for me it all comes down to if one is willing to pay for the option to not have to use so many manual tools, and to have smarter tools that can make those manual tweaks for you based on what's in the image. EDIT: Forgot to mention that I ran BXT with non-stellar sharpening at at 0.6, it probably had 0.1 of star sharpening as well which wasn't intended. So bear that in mind.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Christian Großmann:

Speaking from my point of view, AI is a nice tool to help me process my images. Processing for me is the least favourite part and I put some effort in it to get better, but in the end I hardly improve for several reasons. Time is the main factor. Processing is part of astro photography and so I do it, but most of the time half hearted. With tools like blurX and similar, I don't need to put that much effort in it, which is nice.

I can think this way, because my goal is not to create scientific correct images. In this case, AI must be used with caution. But I assume that most of the people here are trying to create pleasing images for the viewers eye and AI has become part of it. It is another technique like many other techniques that had been developed in the past. At some point many of them were revolutionary and today, we don't even think about it.

In real life photography, many processing techniques were the base for a new style of images. Think about HDR. There were times, when so many images you found in the web were "unreal" HDRs. They had their charme and looked great even to me, but soon people were oversaturated with the look and the hype was over. The same happens with colors and saturation. Many images look really saturated and at some point beautiful. But I prefer the more natural looking ones most of the time. In this case, I seem to belong to a minority.

Astro photography is slower, because we need time to collect data for one single final image. The world is not flooded with good images, because the signals are too faint to be used by the masses. That beeing said, we can recognize some development even in AP as well. There are different styles of images out there, but they are not as obvious as in other parts of photography. We are a niche and developing tools for us is not as popular for most programmers. But there is obviously some improvement.

During processing, I compare my work with final images made by other people. But I realize that often I took another road than what I had in mind in the first place, just because I did not like the results on my own image. I am presenting my images to others here on astrobin like we all do. But I am less interested in what other people think. This may sound like an excuse not to put time into learning my skills, but it isn't. I just want to share what I created and get into touch with people.

To summarize all this, I feel more than happy to choose what I prefer to do with my images. AI is helpful in this case. But it isn't mandatory. If you decide to keep it simple and you take your time to get the results you want, I am more than willing to honour your work. It's completely up to you how your images look and what you are trying to achive. Everything is right, as long as you take the time to think about it and make your decition. That's art and styles are not beautiful to everyone.

CS

Christian I agree with Christian. Maybe I am still a newbie, but I cant get an image that I would want to share without, stacking, background extraction, blurxterminator, and some starxterminator, color callibration, ( depending on the image type). All of those are different elements of AI. I like to think of image processing as Art and and image capture as Science. If I am honest, I am not an Artsy guy, so the quickest path to something I view as pretty and something I can be proud of the better. If my nebula has more red or blue than the "real thing", I can live with.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

James, you mention anything about your processing tools/workflow. Different tools do provide different results. But different tools do not make a really huge difference if you know the basics of these tools. However really knowing your tools in detail and (!!) knowing also more than basics regarding colour imaging is making a huge difference. I have studied really many processing workflows which people provided in various internet resources until I came to a result that finally pleased me. I ended up regarding tools with PixInsight and Adobe Lightroom / Photoshop to get the results I am liking. What I learned is processing of astrophotography is really different. If you want to compare your processing results you can go to get Hubble raw images and process them. You can then compare your results with what was published. In the following please find my result of ARP 273, based on data from Hubble.  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

There is one thing that bothers me a bit about this discussion and it is centered on the original premise of using AI in image processing. Artificial intelligence is a term that applies to the overall implementation of a system that could in principle take all of your raw data and make all of the appropriate processing choices to turn it into a final, completed image. We don’t yet have any such thing; although, it could almost certainly be done. Systems with artificial intelligence are designed to mimic the operation of the human brain. Strictly speaking, we do not use AI in image processing—at all. The tools that are available for image processing are much more limited than that.

Processing tools like Russ Croman’s BlurXterminator use something called a “neural network” (NN). A NN is a subset of what might be used in a true “AI” system that is designed to mimic a biological neural system and it relies on a set training data to arrive at a “best guess” solution. In the case of BXT, it’s another way to approach the age-old, ill-posed problem of deconvolution—and there were a million papers written on that subject. For a long time, a big goal in image science was to figure out how to deconvolve an image to arrive back at a “better” view of what the object looked like. The chanllenge was how to avoid the influence of noise and how to minimize artifacts—most often ringing. I’m always amused by folks who seem alarmed that Russ used Hubble data to train BXT with concerns that the algorithm might introduce something that’s not actually there. That is the EXACT same concern that has always existed with any deconvolution algorithm whether it uses a NN or not! The beauty of the NN solution is that it is vastly less sensitive to the influence of noise and convergence is never a problem. It is simply up to the user to determine if the algorithm is doing something good or something bad to the data and again, that’s exactly what we have to do with any deconvolution algorithm. In my view, as a tool for astronomical image deconvolution, BXT is an enormous leap forward and it is an extremely clever use of space-based image data.

Obviously BXT is not the only NN driven tool out there. SkyWave from Innovation Foresight is another such tool that uses a NN to derive wavefront information from raw star test data. It has quietly revolutionized optical alignment in the amateur world and it’s being used in many other professional applications as well. All of this stuff helps us as amateurs to produce images with our backyard equipment that rival some of the best images taken with the world’s largest telescopes only ~40 years ago.

John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

James,

you mention anything about your processing tools/workflow.

Different tools do provide different results. But different tools do not make a really huge difference if you know the basics of these tools. However really knowing your tools in detail and (!!) knowing also more than basics regarding colour imaging is making a huge difference. I have studied really many processing workflows which people provided in various internet resources until I came to a result that finally pleased me. I ended up regarding tools with PixInsight and Adobe Lightroom / Photoshop to get the results I am liking. What I learned is processing of astrophotography is really different. If you want to compare your processing results you can go to get Hubble raw images and process them. You can then compare your results with what was published. In the following please find my result of ARP 273, based on data from Hubble. My workflow consists of DSS (mainly) or ZWO Studio for stacking, then Photoshop to process. I do use StarExterminator, Gradient Exterminator and HLVG. But, I've also taught myself to do the jobs AI does so as to not become dependent and have a bit more control. I think I get good result, but not exceptional. I think my real concern is that I am not beating myself trying to achieve a result sampled in someone else's image because it can only be done with AI, manipulation of the image with details that look amazing but are not there or unachievable through photography. Maybe that's where the line is between astrophotography and artistic rendering?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Clayton Ostler:

I agree with Christian. Maybe I am still a newbie, but I cant get an image that I would want to share without, stacking, background extraction, blurxterminator, and some starxterminator, color callibration, ( depending on the image type). All of those are different elements of AI. I like to think of image processing as Art and and image capture as Science. If I am honest, I am not an Artsy guy, so the quickest path to something I view as pretty and something I can be proud of the better. If my nebula has more red or blue than the "real thing", I can live with. I don't disagree. The point I'm trying to make about this kind of AI is that isn't comparable to the sort of generative AI stuff that it's typically associated with. These tools aren't generative, I think of them as such: they are similar to existing available processes native to PI, but the AI sort out the optimal settings/tweaking based on the information in the image (for which it is trained to do) - apart from the obvious ones that we choose, like the amount to apply and if we want non-stellar or stellar sharpening. They do not however insert any false information in your image, except when you over-do it by choosing a way too high amount which leads to strange artifacts. This would also happen if you over sharpen something using manual processes like deconvolution, unsharp mask, local histogram equalization or HDRMT, to mention a few. You could get similar or equal results without using them, which goes to show that. At least that was what I was trying to show with my screenshots. Stacking, background extraction and color calibration are absolute, and basic, necessities for everyone I'd say, but I have never considered those processes/scripts as AI (unless you are using something like GraXpert).

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

James:

James,

you mention anything about your processing tools/workflow.

Different tools do provide different results. But different tools do not make a really huge difference if you know the basics of these tools. However really knowing your tools in detail and (!!) knowing also more than basics regarding colour imaging is making a huge difference. I have studied really many processing workflows which people provided in various internet resources until I came to a result that finally pleased me. I ended up regarding tools with PixInsight and Adobe Lightroom / Photoshop to get the results I am liking. What I learned is processing of astrophotography is really different. If you want to compare your processing results you can go to get Hubble raw images and process them. You can then compare your results with what was published. In the following please find my result of ARP 273, based on data from Hubble.

My workflow consists of DSS (mainly) or ZWO Studio for stacking, then Photoshop to process. I do use StarExterminator, Gradient Exterminator and HLVG. But, I've also taught myself to do the jobs AI does so as to not become dependent and have a bit more control.

I think I get good result, but not exceptional.

I think my real concern is that I am not beating myself trying to achieve a result sampled in someone else's image because it can only be done with AI, manipulation of the image with details that look amazing but are not there or unachievable through photography. Maybe that's where the line is between astrophotography and artistic rendering? my personal experience is that there are two real critical steps in processing to get really the maximum out of the raw data: the move from linear to non-linear and, after being non-linear trying to get maximum details using curves transformation (or similar). If this is well done, you do not need anything like AI. Another experience I made personally: I keep my workflow as simple as possible, but when processing nebula I only do it with a starless image and I am adding stars at the end of the starless process.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

I tend to agree with @John Hayes - The term "AI" is used pretty loosely Although there's machine learning behind the Xterminator series, BlurXT and noiseXT are really just improvements on processes we've already used. For example, before BlurXT we had roll-your-own deconvolution. A challenging, time-intensive, and imo producted modest results. The techniques BlurXT use are improvements on the basic deconvolution process, but it doesn't make a photo better, only more clear I contrast this with AI in the terrestrial photo realm, where AI is more liberally used in generative ways: creating things that aren't really there. However, none of these are going to make images better. They may technically improve them, but a poorly rendered image that BlurXT is used on is still a poorly rendered image that is more clear. Brin

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Brian Valente:

I tend to agree with @John Hayes - The term "AI" is used pretty loosely

Although there's machine learning behind the Xterminator series, BlurXT and noiseXT are really just improvements on processes we've already used. For example, before BlurXT we had roll-your-own deconvolution. A challenging, time-intensive, and imo producted modest results. The techniques BlurXT use are improvements on the basic deconvolution process, but it doesn't make a photo better, only more clear I contrast this with AI in the terrestrial photo realm, where AI is more liberally used in generative ways: creating things that aren't really there.

However, none of these are going to make images better. They may technically improve them, but a poorly rendered image that BlurXT is used on is still a poorly rendered image that is more clear.

Brin I guess I am really out of the loop, because the closest thing to generative AI that I am aware of in AP is BlurXT. I agree if there are other tools that "generate an image" thats an entirely different scenario. I guess I could type in to chatgpt "create an image of m42" but that thats definately not AP.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Clayton Ostler:

I guess I am really out of the loop, because the closest thing to generative AI that I am aware of in AP is BlurXT. I agree if there are other tools that "generate an image" thats an entirely different scenario. I guess I could type in to chatgpt "create an image of m42" but that thats definately not AP. I think maybe there's a misunderstanding. BlurXT is not generative, There is no generative AI in astro that I'm aware of (or that's used in common imaging applications like PixInsight) my point was exactly that there are *not* generative AI tools in astro processing, compared with traditional image editing apps like Photoshop that include generative AI, and seem to be accelerating in this direction. Latest Photoshop beta you can start without any image at all, just a prompt? smh Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Clayton Ostler:

I guess I am really out of the loop, because the closest thing to generative AI that I am aware of in AP is BlurXT. I agree if there are other tools that "generate an image" thats an entirely different scenario. I guess I could type in to chatgpt "create an image of m42" but that thats definately not AP. BXT is not generative! As I previously explained, it uses a neural net to make a "best guess" about the signal distribution that best matches the blurred data within an image patch. The training data contains a large library of blurred images (typically 0.5M - 1M samples) generated from known un-blurred "ideal" image patches. The neural net simply finds a best match between a patch in your image and a best-fit match in the library to determine what the un-blurred data originally looked like. The accuracy of the system depends almost completely on the quality of the training data. John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Clayton Ostler:

Christian Großmann:

Speaking from my point of view, AI is a nice tool to help me process my images. Processing for me is the least favourite part and I put some effort in it to get better, but in the end I hardly improve for several reasons. Time is the main factor. Processing is part of astro photography and so I do it, but most of the time half hearted. With tools like blurX and similar, I don't need to put that much effort in it, which is nice.

I can think this way, because my goal is not to create scientific correct images. In this case, AI must be used with caution. But I assume that most of the people here are trying to create pleasing images for the viewers eye and AI has become part of it. It is another technique like many other techniques that had been developed in the past. At some point many of them were revolutionary and today, we don't even think about it.

In real life photography, many processing techniques were the base for a new style of images. Think about HDR. There were times, when so many images you found in the web were "unreal" HDRs. They had their charme and looked great even to me, but soon people were oversaturated with the look and the hype was over. The same happens with colors and saturation. Many images look really saturated and at some point beautiful. But I prefer the more natural looking ones most of the time. In this case, I seem to belong to a minority.

Astro photography is slower, because we need time to collect data for one single final image. The world is not flooded with good images, because the signals are too faint to be used by the masses. That beeing said, we can recognize some development even in AP as well. There are different styles of images out there, but they are not as obvious as in other parts of photography. We are a niche and developing tools for us is not as popular for most programmers. But there is obviously some improvement.

During processing, I compare my work with final images made by other people. But I realize that often I took another road than what I had in mind in the first place, just because I did not like the results on my own image. I am presenting my images to others here on astrobin like we all do. But I am less interested in what other people think. This may sound like an excuse not to put time into learning my skills, but it isn't. I just want to share what I created and get into touch with people.

To summarize all this, I feel more than happy to choose what I prefer to do with my images. AI is helpful in this case. But it isn't mandatory. If you decide to keep it simple and you take your time to get the results you want, I am more than willing to honour your work. It's completely up to you how your images look and what you are trying to achive. Everything is right, as long as you take the time to think about it and make your decition. That's art and styles are not beautiful to everyone.

CS

Christian

I agree with Christian. Maybe I am still a newbie, but I cant get an image that I would want to share without, stacking, background extraction, blurxterminator, and some starxterminator, color callibration, ( depending on the image type). All of those are different elements of AI. I like to think of image processing as Art and and image capture as Science. If I am honest, I am not an Artsy guy, so the quickest path to something I view as pretty and something I can be proud of the better. If my nebula has more red or blue than the "real thing", I can live with. Everything you listed except BX and SX is pure math and absolutely necessary, not AI. As for BlurXTerminator, the AI part is most likely guessing the optimal PSF function to use. Deconvolution itself is not that complicated and has been done since forever in many different fields. None of the AI tools will make a difference as large as the original post described and they will not introduce data that weren't there to begin with. In fact, NoiseXTerminator will make you lose data. What will really make a difference is an extreme attention to details and good knowledge, from the choice of equipment to data processing and everything in-between.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

What would really help would be to see these two images. Where the one with the lesser integration time and worse sky conditions are not beaten by the opposite.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

James:

So, either I'm terrible at processing (I don't think I am) or they are using AI. Back to your original premise, I suggest you ask them for their processing steps and compare with yours, recognize that the steps are one part, how you use those steps is where the skill comes in. Someone gave an example of curves: You can both use curves as the same step, but the results can be wildly different depending on how well you understand and use curves. You may not be terrible at processing, but you may not be great either. Astro image processing is imo much more akin to signal processing than wysiwyg type image processing. Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.

Gilmour Dickson:

What would really help would be to see these two images. Where the one with the lesser integration time and worse sky conditions are not beaten by the opposite. I thought of this but didn't want to cast aspersions on the other photographer, especially since I'm new to the art and it's very likely they are just better than me at processing. It just stood out to me that I have the same equipment (except I used a multi band pass filter), much better skies and the same settings. Ofc, anyone can look at my photos. Likely, you'll come to a conclusion that I have more to learn.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Copy the URL below to share a direct link to this post.

This post cannot be edited using the classic forums editor.

To edit this post, please enable the "New forums experience" in your settings.